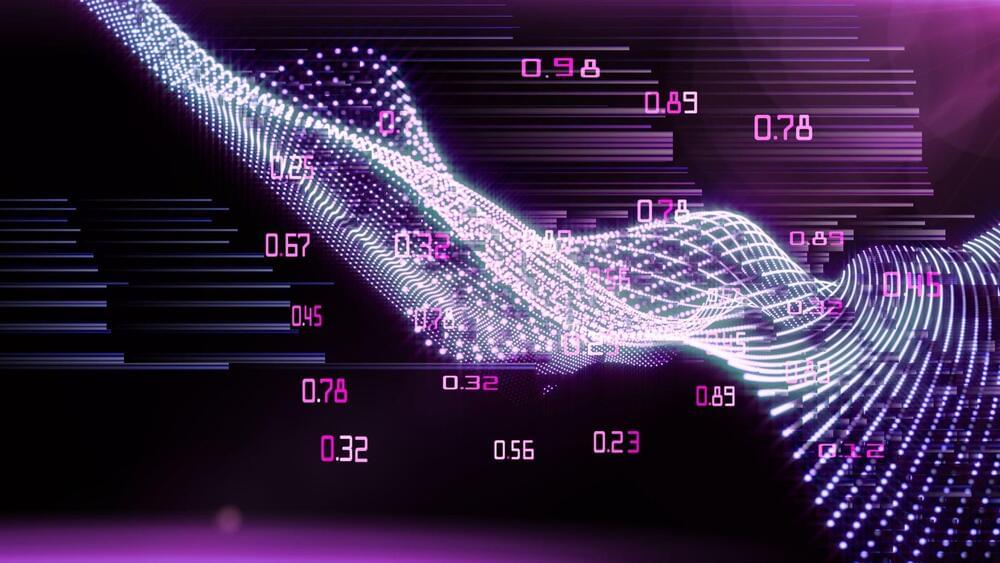

Machine learning has increased considerably in several areas due to its performance in recent years. Thanks to modern computers’ computing capacity and graphics cards, deep learning has made it possible to achieve results that sometimes exceed those experts give. However, its use in sensitive areas such as medicine or finance causes confidentiality issues. A formal privacy guarantee called differential privacy (DP) prohibits adversaries with access to machine learning models from obtaining data on specific training points. The most common training approach for differential privacy in image recognition is differential private stochastic gradient descent (DPSGD). However, the deployment of differential privacy is limited by the performance deterioration caused by current DPSGD systems.

The existing methods for differentially private deep learning still need to operate better since that, in the stochastic gradient descent process, these techniques allow all model updates regardless of whether the corresponding objective function values get better. In some model updates, adding noise to the gradients might worsen the objective function values, especially when convergence is imminent. The resulting models get worse as a result of these effects. The optimization target degrades, and the privacy budget is wasted. To address this problem, a research team from Shanghai University in China suggests a simulated annealing-based differentially private stochastic gradient descent (SA-DPSGD) approach that accepts a candidate update with a probability that depends on the quality of the update and the number of iterations.

Concretely, the model update is accepted if it gives a better objective function value. Otherwise, the update is rejected with a certain probability. To prevent settling into a local optimum, the authors suggest using probabilistic rejections rather than deterministic ones and limiting the number of continuous rejections. Therefore, the simulated annealing algorithm is used to select model updates with probability during the stochastic gradient descent process.