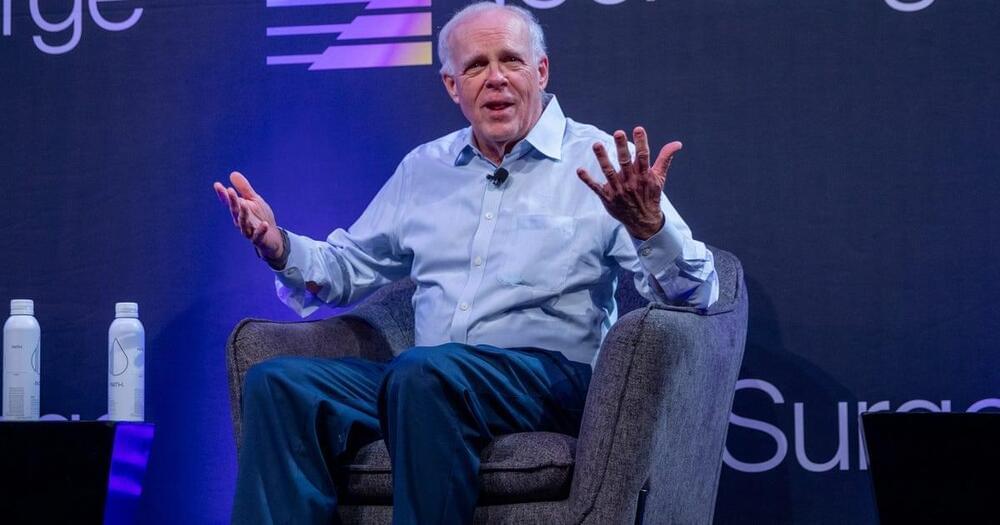

ChatGPT and other AI systems are propelling us faster toward the long-term technology dream of artificial general intelligence and the radical transformation called the “singularity,” Silicon Valley chip luminary and former Stanford University professor John Hennessy believes.

Hennessy won computing’s highest prize, the Turing Award, with colleague Dave Patterson for developing the computing architecture that made energy-efficient smartphone chips possible and that now is the foundation for virtually all major processors. He’s also chairman of Google parent company Alphabet.