Some possible economic and geopolitical implications.

Welcome to the FUTURE of IT jobs! In todays video, “Exciting New IT Jobs That Will Come Out of AI and the Ones Destined for Automation”, we deep dive into the ever-evolving landscape of technology and employment. We’ll explore the some new jobs that have come out of AI and also some jobs that are getting automated because of AI.

Whether you’re a tech enthusiast, a professional looking to future-proof your career, or someone just curious about the tech industry, this video is your one-stop guide!

Timestamps: ⏰

00:00 — Intro.

00:57 — Prompt Engineer.

01:40 — Subject Matter Expert.

03:37 — Data Protection Officer (DPO)

05:20 — Jobs becoming more automated.

08:10 — QA Engineer.

09:20 — Outro.

Github: https://github.com/TiffinTech.

LinkedIn: https://www.linkedin.com/in/tiffany-janzen/

Instagram: https://www.instagram.com/tiffintech.

Tiktok: https://www.tiktok.com/@tiffintech?lang=en.

❤️ Subscribe for more videos using this link

Business inquiries: [email protected].

In this video, we’ll dive deep into the cutting-edge research on how Artificial Intelligence (AI) and Artificial General Intelligence (AGI) are helping us to better understand the aging process and unlock the secrets to living forever.

We’ll discuss the latest breakthroughs in AI and AGI and how they are enabling researchers to analyze vast amounts of data, identify patterns, and make predictions that were once impossible. We’ll also explore how AI and AGI are being used to develop new treatments and therapies to prevent or reverse aging-related diseases within the Longevity Industry.

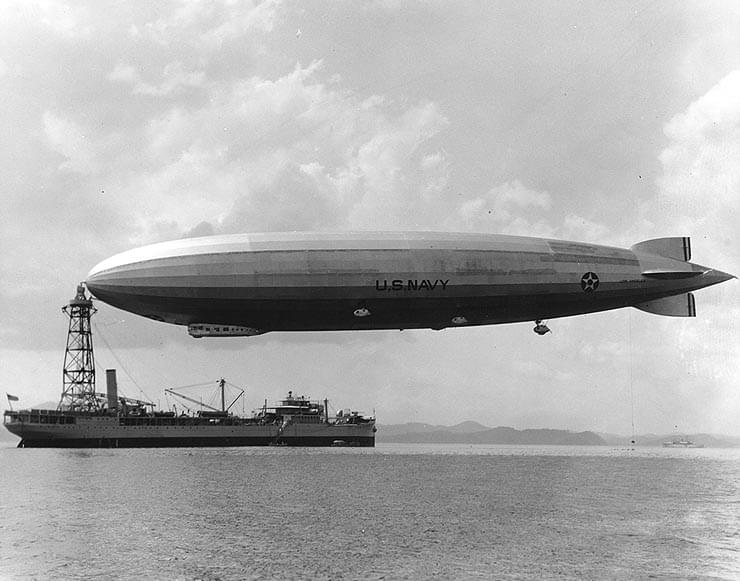

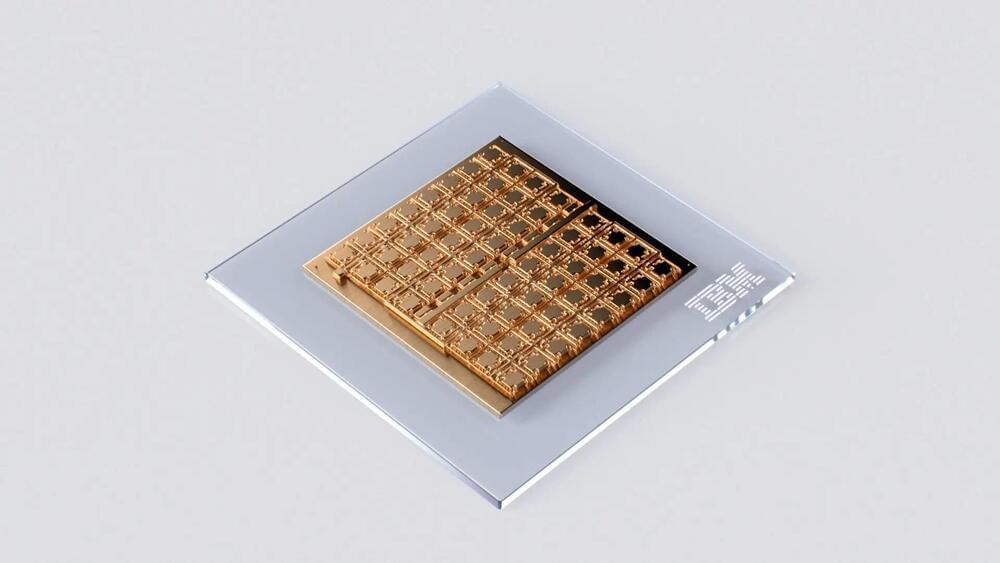

For decades, electronics engineers have been trying to develop increasingly advanced devices that can perform complex computations faster and consuming less energy. This has become even more salient after the advent of artificial intelligence (AI) and deep learning algorithms, which typically have substantial requirements both in terms of data storage and computational load.

A promising approach for running these algorithms is known as analog in-memory computing (AIMC). As suggested by its name, this approach consists of developing electronics that can perform computations and store data on a single chip. To realistically achieve both improvements in speed and energy consumption, this approach should ideally also support on-chip digital operations and communications.

Researchers at IBM Research Europe recently developed a new 64-core mixed-signal in-memory computing chip based on phase-change memory devices that could better support the computations of deep neural networks. Their 64-core chip, presented in a paper in Nature Electronics, has so far attained highly promising results, retaining the accuracy of deep learning algorithms, while reducing computation times and energy consumption.

The machine will make power grid evaluations safer and more reliable.

A new grid analytics company in Canada’s Waterloo is aiming to predict power outages before they even occur by using robotics. This is according to a report by CTV News.

Interesting Engineering is a cutting edge, leading community designed for all lovers of engineering, technology and science.

A process called smoothing helps robots quickly determine an efficient manipulation strategy for moving things.

The field of robotics has been witnessing rapid advancements in recent times, with systems becoming capable of carrying out complex tasks. One thing robots still struggle with is whole-body manipulation, a skill that humans typically excel at.

Consider carrying a big, weighty package up a set of stairs. A human being may spread their fingers and lift the box with both hands, then support it against their chest by balancing it on top of their forearms while utilizing the entire body to move the box.

A new AI technique enables a robot to develop complex plans for manipulating an object using its entire hand, not just fingertips. This model can generate effective plans in about a minute using a standard laptop.

With AI models like DALL-E 2, Midjourney, Stable Diffusion, and Adobe’s Firefly, over 15 billion images have been created, each raising the ceiling of creativity.

In the blink of an eye, the world of digital art has undergone a transformative revolution catalyzed by the surge in AI-generated masterpieces. A recent report by the Artificial Intelligence-centric blog, Everypixel Journal.

Communities dedicated to AI art have sprung up across the digital landscape, acting as vibrant hubs for artists to hone their skills, exchange techniques, and create mesmerizing imagery. From the likes of Reddit to Twitter and Discord, a dynamic ecosystem of innovation has emerged, culminating in an astonishing volume of content.

This post is also available in:  עברית (Hebrew)

עברית (Hebrew)

Many users who want more from their smartphones are glad to use a plethora of advanced features, mainly for health and entertainment. Turns out that these features create a security risk when making or receiving calls.

Researchers from Texas A&M University and four other institutions created malware called EarSpy, which uses machine learning algorithms to filter caller information from ear speaker vibration data recorded by an Android smartphone’s own motion sensors, without overcoming any safeguards or needing user permissions.

In a talk from the cutting edge of technology, OpenAI cofounder Greg Brockman explores the underlying design principles of ChatGPT and demos some mind-blowing, unreleased plug-ins for the chatbot that sent shockwaves across the world. After the talk, head of TED Chris Anderson joins Brockman to dig into the timeline of ChatGPT’s development and get Brockman’s take on the risks, raised by many in the tech industry and beyond, of releasing such a powerful tool into the world.

If you love watching TED Talks like this one, become a TED Member to support our mission of spreading ideas: https://ted.com/membership.

Follow TED!

Twitter: https://twitter.com/TEDTalks.

Instagram: https://www.instagram.com/ted.

Facebook: https://facebook.com/TED

LinkedIn: https://www.linkedin.com/company/ted-conferences.

TikTok: https://www.tiktok.com/@tedtoks.

The TED Talks channel features talks, performances and original series from the world’s leading thinkers and doers. Subscribe to our channel for videos on Technology, Entertainment and Design — plus science, business, global issues, the arts and more. Visit https://TED.com to get our entire library of TED Talks, transcripts, translations, personalized talk recommendations and more.

Watch more: go.ted.com/gregbrockman.