Multimaterial 3D-printing approach produces functional devices in a single shot.

The onslaught of press, research and perceived urgency has done little to prepare business and information technology leaders to deploy artificial intelligence-powered technologies.

That’s according to the first Cisco AI Readiness Index, issued Tuesday. The company used a double-blind survey of over 8,000 business and IT leaders worldwide.

The findings are alarming. Although 97% of the organizations say that the urgency around deploying AI tech has risen in the last six months, only 14% feel they’re prepared to deploy and utilize it.

Are you ready to bring more awareness to your brand? Consider becoming a sponsor for The AI Impact Tour. Learn more about the opportunities here.

A new hallucination index developed by the research arm of San Francisco-based Galileo, which helps enterprises build, fine-tune and monitor production-grade large language model (LLM) apps, shows that OpenAI’s GPT-4 model works best and hallucinates the least when challenged with multiple tasks.

Published today, the index looked at nearly a dozen open and closed-source LLMs, including Meta’s Llama series, and assessed each of their performance at different tasks to see which LLM experiences the least hallucinations when performing different tasks.

The company on Wednesday announced Astro for Business, a version of its household robot that it’s framing as a crime prevention tool for retailers, manufacturers and a range of other industries, in spaces that are up to 5,000 square feet. Astro for Business is launching only in the U.S. to start, and it comes at a steep price point of $2,349.99.

Amazon unveiled Astro, its first home robot, in September 2021. The squat, three-wheeled device can roll around the house to answer Alexa voice commands, and it has a 42-inch periscope camera that allows it to see over countertops or other obstacles to check if a stove has been left on, among other tasks.

Two years on from its debut, the original Astro, which costs $1,599, is available in limited quantities and on an invite-only basis.

An artificial sensory system that is able to recognize fine textures—such as twill, corduroy and wool—with a high resolution, similar to a human finger, is reported in a Nature Communications paper. The findings may help improve the subtle tactile sensation abilities of robots and human limb prosthetics and could be applied to virtual reality in the future, the authors suggest.

Humans can gently slide a finger on the surface of an object and identify it by capturing both static pressure and high-frequency vibrations. Previous approaches to create artificial tactile sensors for sensing physical stimuli, such as pressure, have been limited in their ability to identify real-world objects upon touch, or they rely on multiple sensors. Creating a real-time artificial sensory system with high spatiotemporal resolution and sensitivity has been challenging.

Chuan Fei Guo and colleagues present a flexible slip sensor that mimics the features of a human fingerprint to enable the system to recognize small features on surface textures when touching or sliding the sensor across the surface. The authors integrated the sensor onto a prosthetic human hand and added machine learning to the system.

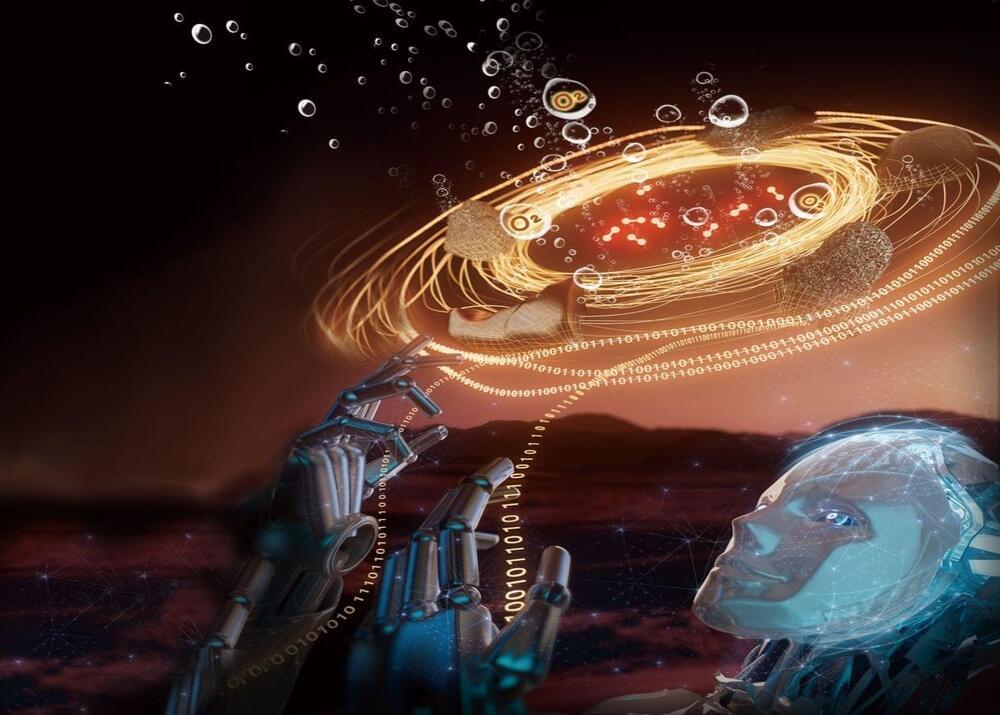

A robotic AI-Chemist@USTC makes useful Oxygen generation catalyst with Martian meteorites. (Image by AI-Chemist Group at USTC)

Immigration and living on Mars have long been depicted in science fiction works. But before dream turns into reality, there is a hurdle man has to overcome — the lack of essential chemicals such as oxygen for long-term survival on the planet. However, hope looms up thanks to recent discovery of water activity on Mars. Scientists are now exploring the possibility of decomposing water to produce oxygen through electrochemical water oxidation driven by solar power with the help of oxygen evolution reaction (OER) catalysts. The challenge is to find a way to synthesize these catalysts in situ using materials on Mars, instead of transporting them from the Earth, which is of high cost.

To tackle this problem, a team led by Prof. LUO Yi, Prof. JIANG Jun, and Prof. SHANG Weiwei from the University of Science and Technology of China (USTC) of the Chinese Academy of Sciences (CAS), recently made it possible to synthesize and optimize OER catalysts automatically from Martian meteorites with their robotic artificial intelligence (AI)-chemist.

3D printing is advancing rapidly, and the range of materials that can be used has expanded considerably. While the technology was previously limited to fast-curing plastics, it has now been made suitable for slow-curing plastics as well. These have decisive advantages as they have enhanced elastic properties and are more durable and robust.

The use of such polymers is made possible by a new technology developed by researchers at ETH Zurich and a US start-up. As a result, researchers can now 3D print complex, more durable robots from a variety of high-quality materials in one go. This new technology also makes it easy to combine soft, elastic, and rigid materials. The researchers can also use it to create delicate structures and parts with cavities as desired.