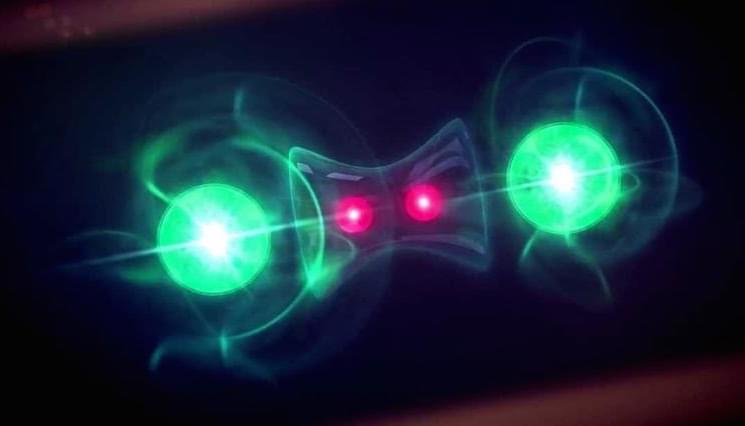

Quantum Mechanics is the science behind nuclear energy, smart phones, and particle collisions. Yet, almost a century after its discovery, there is still controversy over what the theory actually means. The problem is that its key element, the quantum-mechanical wave function describing atoms and subatomic particles, isn’t observable. As physics is an experimental science, physicists continue to argue over whether the wave function can be taken as real, or whether it is just a tool to make predictions about what can be measured—typically large, “classical” everyday objects.

The view of the antirealists, advocated by Niels Bohr, Werner Heisenberg, and an overwhelming majority of physicists, has become the orthodox mainstream interpretation. For Bohr especially, reality was like a movie shown without a film or projector creating it: “There is no quantum world,” Bohr reportedly affirmed, suggesting an imaginary border between the realms of microscopic, “unreal” quantum physics and “real,” macroscopic objects—a boundary that has received serious blows by experiments ever since. Albert Einstein was a fierce critic of this airy philosophy, although he didn’t come up with an alternative theory himself.

For many years only a small number of outcasts, including Erwin Schrödinger and Hugh Everett populated the camp of the realists. This renegade view, however, is getting increasingly popular—and of course triggers the question of what this quantum reality really is. This is a question that has occupied me for many years, until I arrived at the conclusion that quantum reality, deep down at the most fundamental level, is an all-encompassing, unified whole: “The One.”