In the six months since FLI published its open letter calling for a pause on giant AI experiments, we have seen overwhelming expert and public concern about the out-of-control AI arms race — but no slowdown. In this video, we call for U.S. lawmakers to step in, and explore the policy solutions necessary to steer this powerful technology to benefit humanity.

Category: military – Page 56

Blowback: How Israel Went From Helping Create Hamas to Bombing It

“This isn’t a conspiracy theory. Listen to former Israeli officials such as Brig. Gen. Yitzhak Segev, who was the Israeli military governor in Gaza in the early 1980s. Segev later told a New York Times reporter that he had helped finance the Palestinian Islamist movement as a ” counterweight” to the secularists and leftists of the Palestine Liberation Organization and the Fatah party, led by Yasser Arafat (who himself referred to Hamas as ” a creature of Israel.”)

The Israeli government gave me a budget, the retired brigadier general confessed, and the military government gives to the mosques.

Hamas, to my great regret, is Israel’s creation, Avner Cohen, a former Israeli religious… More.

What do you know about Hamas?

That it’s sworn to destroy Israel? That it’s a terrorist group, proscribed both by the United States and the European Union? That it rules Gaza with an iron fist? That it’s killed hundreds of innocent Israelis with rocket, mortar, and suicide attacks?

World’s top supercomputer to simulate nuclear reactors

The supercomputer which is under construction is 50 times more powerful that existing supercomputer at the facility.

The world’s most powerful supercomputer, Aurora, is being set up in the US to help scientists at the Argonne National Laboratory (ANL) simulate new nuclear reactors that are more efficient and safer than their predecessors, a press release said.

The US is already home to some of the world’s fastest supercomputers, as measured by TOP500. These supercomputers can be tasked with a variety of computational roles. Last month, Interesting Engineering reported how the Los Alamos National Laboratory (LANL) planned to use a supercomputer to check nuclear stockpiles for the US military.

Why the AH-64 Apache is the World’s Best Attack Helicopter

Nearly three decades later, the Apache’s status as the world’s premier attack helicopter remains largely unchallenged.

Here’s What You Need to Remember: The latest AH-64E Guardian model boasts uprated engines, remote drone-control capabilities, and a sensors designed to highlight muzzle flashes on the battlefield below. The Army has also experimentally deployed Apaches on U.S. Navy ships and had them practice anti-ship missions, and even tested a laser-armed Apache.

Early in the morning of January 17, 1991, eight sleek helicopters bristling with missiles swooped low over the sands of the An Nafud desert in as they soared towards the border separating Saudi Arabia from Iraq.

Coming Soon: Portable Poop-Powered Nuclear Reactors?

O.0!!!!

DARPA is our favorite source for off-the-wall yet compelling technology. We’ve seen proposals for flying cars, handheld nuclear fusion devices, and now poop-powered nuclear reactors. It’s not as crazy at seems.

Wired explains that there would be multiple benefits to sticking portable, poop-powered reactors at military bases. The reactors could eliminate human waste (and the need to dispose of it) at the same time as they reduce the need to scrounge up pricey fuel sources. And since people never stop pooping, potential fuel is virtually limitless.

DARPA’s portable nuclear device doesn’t exist yet–the agency is currently soliciting design concepts. Want to apply? DARPA asks that generator designs produce 15,000 gallons per day of fuel and support an electrical load of 5 to 10 MW. The design should also be inherently safe. That means human waste shouldn’t go flying everywhere if something goes wrong with the reactor. If DARPA finds what it is looking for, perhaps the agency should consider tipping off Bill Gates and Toshiba–they’re also working on hot tub-sized nuclear reactors.

New 4th Dimension Metamaterial Discovery Suggests How UAP Might Defy Physics in Our Airspace

If you’ve been watching the recent UAP reporting or the US Congressional Committee Hearing on UAP, you already know that we have military and civilian pilot eyewitness accounts in volume, as well as footage of incidents like the “tic-tac” live sighting in 2004. There are many more incidents whose video recordings are still classified and not yet available to the public. There are reports and testimony from career Navy and Air Force officials who’ve reported similar sightings. Comments such as the one made by Cmdr. David Fravor (Ret), made after the 2004 incident are common among experienced military pilots.

We don’t have the kind of physics understanding, now or back then, that would allow us the ability to do what we’re seeing these UAP do. — US Navy Cmdr. David Fravor (Ret)

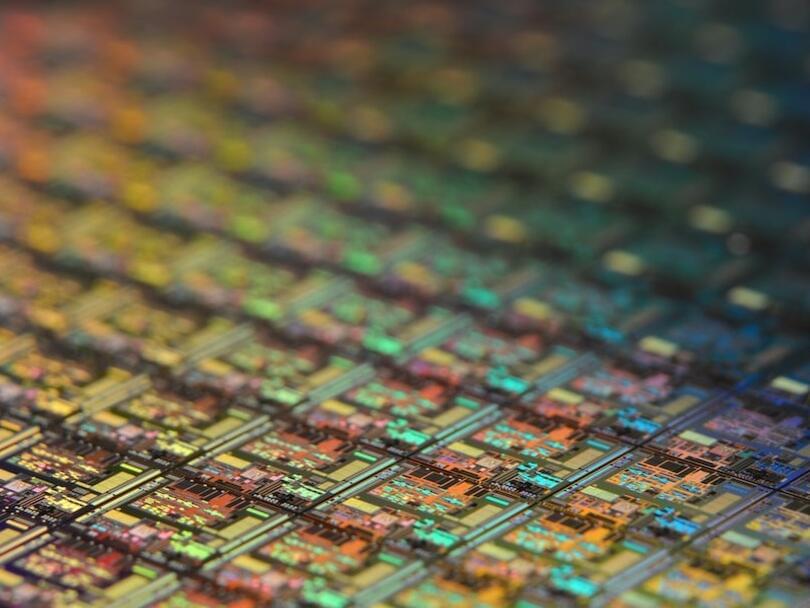

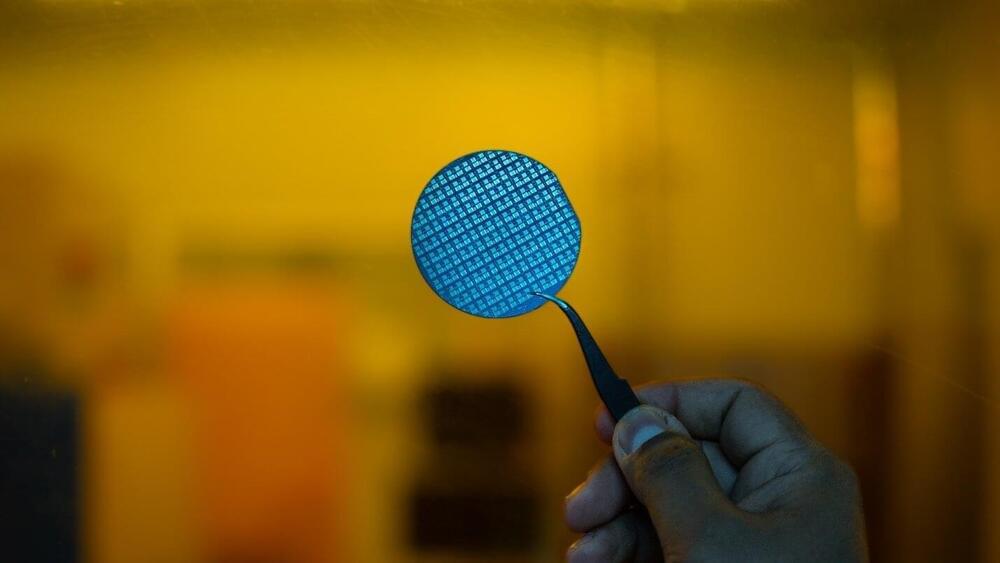

Indian research team develops fully indigenous gallium nitride power switch

Researchers at the Indian Institute of Science (IISc) have developed a fully indigenous gallium nitride (GaN) power switch that can have potential applications in systems like power converters for electric vehicles and laptops, as well as in wireless communications. The entire process of building the switch—from material growth to device fabrication to packaging—was developed in-house at the Center for Nano Science and Engineering (CeNSE), IISc.

Due to their high performance and efficiency, GaN transistors are poised to replace traditional silicon-based transistors as the building blocks in many electronic devices, such as ultrafast chargers for electric vehicles, phones and laptops, as well as space and military applications such as radar.

“It is a very promising and disruptive technology,” says Digbijoy Nath, Associate Professor at CeNSE and corresponding author of the study published in Microelectronic Engineering. “But the material and devices are heavily import-restricted … We don’t have gallium nitride wafer production capability at commercial scale in India yet.” The know-how of manufacturing these devices is also a heavily-guarded secret with few studies published on the details of the processes involved, he adds.

China tested the first ever drone equipped with a rotary detonation engine — the propulsion system will open the way to the creation of hypersonic aircraft and missiles

A private Chinese company, Thrust-to-Weight Ratio Engine, was able to test a rotary detonation engine on a drone. This is the first such test. Previously, only bench tests were conducted.

Here’s What We Know.

The rotary detonation engine will open the way to the development of hypersonic transport systems, including aircraft and missiles. Another feature of the propulsion system is reduced fuel consumption.

The company’s Thrust-to-Weight Ratio Engine was developed jointly with the Industrial Technology Research Institute of Chongqing University. It was named FB-1 Rotating Detonation Engine.

Autonomous drone could help end high-speed car chases in NY

The drone can travel at 45 miles an hour and read license plates from 800 feet away. It can be equipped with a speaker and a spotlight. Who needs a police car for a chase now?

A new high-tech autonomous drone, unveiled by California-based company Skydio, could help New York Police end high-speed car chases. The company which has supplied drones for both military and utility purposes, is working to use drones as first responders (DFR) for the police in the US.

A decade ago, Skydio began its journey as a company that provides athletes with a ‘follow-me-everywhere’ drone that could help shoot videos from the air while on the move. Three years ago, the company made a significant pivot as it looked… More.

Skydio.

Elon Musk Wins US Space Force Contract for Starshield

Elon Musk’s SpaceX has received its first contract from the US Space Force to provide customized satellite communications for the military under the company’s new Starshield program, extending the provocative billionaire’s role as a defense contractor.

Space Exploration Technologies Corp. is competing with 15 companies, including Viasat Inc., for $900 million in work orders through 2028 under the Space Force’s new “Proliferated Low Earth Orbit” contracts program, which is tapping into communications services of satellites orbiting from 100 miles to 1,000 miles (160 kilometers to 1,600 kilometers) above Earth.

The Starshield service will be provided over SpaceX’s existing constellation of Starlink communications satellites.