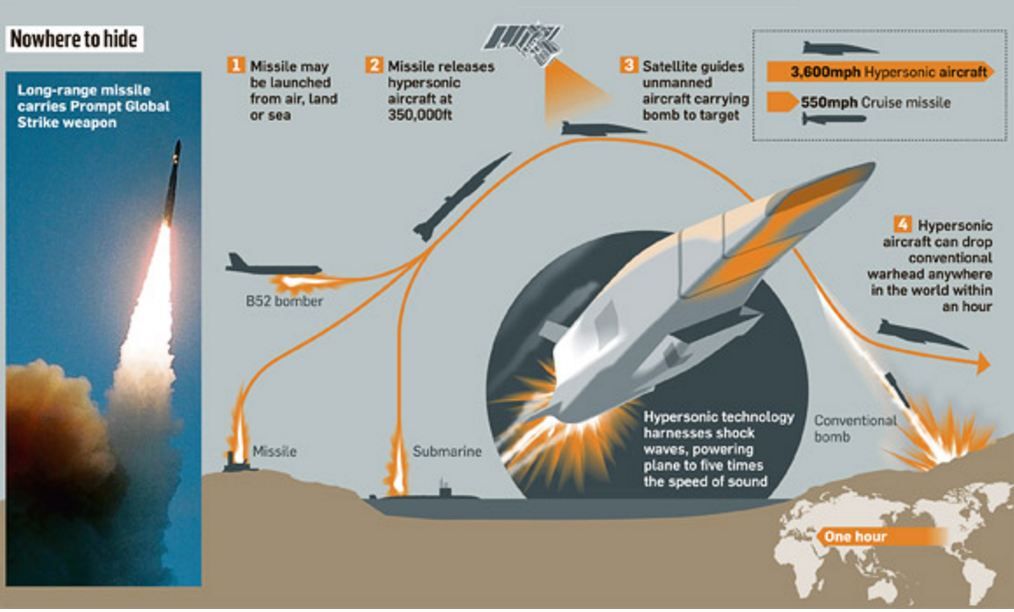

Russia is working to deploy a revolutionary hypersonic maneuvering strike missile by 2020, according to a Russian defense industry leader.

Boris Obnosov, director of the state-run Tactical Missiles Corp., told a Russian news agency the new hypersonic missile will be capable of penetrating advanced missile defenses and represents a revolutionary advance in military technology.

“It’s obvious that with such speeds—when missiles will be capable of flying through the atmosphere at speeds of seven to 12 times the speed of sound, all [air] defense systems will be weakened considerably,” Obnosov told the Rambler News Service this week.