Enjoy the videos and music you love, upload original content, and share it all with friends, family, and the world on YouTube.

Category: media & arts – Page 44

First Look Inside Blue Origin’s New Glenn Factory w/ Jeff Bezos!

Join Jeff Bezos for a tour inside Blue Origin’s New Glenn Production Facility at Cape Canaveral, Florida. This video was shot on May 30th, 2024.

00:00 — Intro.

00:40 — Interview Starts [Lobby]

05:20 — Recovering Saturn V Engines.

08:35 — Tank Production.

16:40 — Second Stage.

23:50 — Aft Section.

33:15 — Forward Section.

42:08 — Machine Shop.

51:35 — Payload Adapter and Fairings.

1:00:00 — Engines.

1:11:20 — Outro.

Want to support what I do? Consider becoming a Patreon supporter for access to exclusive livestreams, our discord channel! — / everydayastronaut.

Or become a YouTube member for some bonus perks as well! — / @everydayastronaut.

The best place for all your space merch needs!

https://everydayastronaut.com/shop/

All music is original! Check out my album \.

The Soliton Model of Elementary Particles (Dennis Braun)

Enjoy the videos and music you love, upload original content, and share it all with friends, family, and the world on YouTube.

2 MINUTES AGO: Scientists Warn: LLMs Are NOW Developing Their Own Understanding of Reality!

Enjoy the videos and music you love, upload original content, and share it all with friends, family, and the world on YouTube.

2 MINUTES AGO Meta’s AI training Sparks Worldwide Privacy Concerns

Enjoy the videos and music you love, upload original content, and share it all with friends, family, and the world on YouTube.

The Next Technological Revolution

If you love card games, definitely check out Doomlings. Click here and use code ISAAC20 to get 20% off of your copy of Doomlings! https://bit.ly/IsaacDoomlings.

Technology has shaped our civilization as it grew down the centuries, and since the industrial revolution, each new generation seems defined by some new technological revolution… So what will the next revolution be?

Visit our Website: http://www.isaacarthur.net.

Join Nebula: https://go.nebula.tv/isaacarthur.

Support us on Patreon: / isaacarthur.

Support us on Subscribestar: https://www.subscribestar.com/isaac-a…

Facebook Group: / 1583992725237264

Reddit: / isaacarthur.

Twitter: / isaac_a_arthur on Twitter and RT our future content.

SFIA Discord Server: / discord.

Credits:

The Next Technological Revolution.

Episode 460; August 15, 2024

Produced, Written \& Narrated by: Isaac Arthur.

Editor: Lukas Konecny.

Select imagery/video supplied by Getty Images.

Music Courtesy of Epidemic Sound http://epidemicsound.com/creator.

Stellardrone, \

Were Primitive Humans Uplifted By Aliens?

Many believe humanity’s climb upward may have been assisted by outsiders. Is this possible, and if so, what does that tell us about our own past… and future?

Watch my exclusive video Jupiter Brains \& Mega Minds: https://nebula.tv/videos/isaacarthur–…

Get Nebula using my link for 40% off an annual subscription: https://go.nebula.tv/isaacarthur.

Get a Lifetime Membership to Nebula for only $300: https://go.nebula.tv/lifetime?ref=isa…

Use the link gift.nebula.tv/isaacarthur to give a year of Nebula to a friend for just $30.

Join this channel to get access to perks:

/ @isaacarthursfia.

Visit our Website: http://www.isaacarthur.net.

Join Nebula: https://go.nebula.tv/isaacarthur.

Support us on Patreon: / isaacarthur.

Support us on Subscribestar: https://www.subscribestar.com/isaac-a…

Facebook Group: / 1583992725237264

Reddit: / isaacarthur.

Twitter: / isaac_a_arthur on Twitter and RT our future content.

SFIA Discord Server: / discord.

Credits:

Were Primitive Humans Uplifted?

Episode 459a; August 11, 2024

Produced, Narrated \& Written: Isaac Arthur.

Editor: Evan Schultheis.

Graphics: Jeremy Jozwik \& Ken York YD Visual.

Select imagery/video supplied by Getty Images.

Music Courtesy of Epidemic Sound http://epidemicsound.com/creator.

Sergey Cheremisinov, \

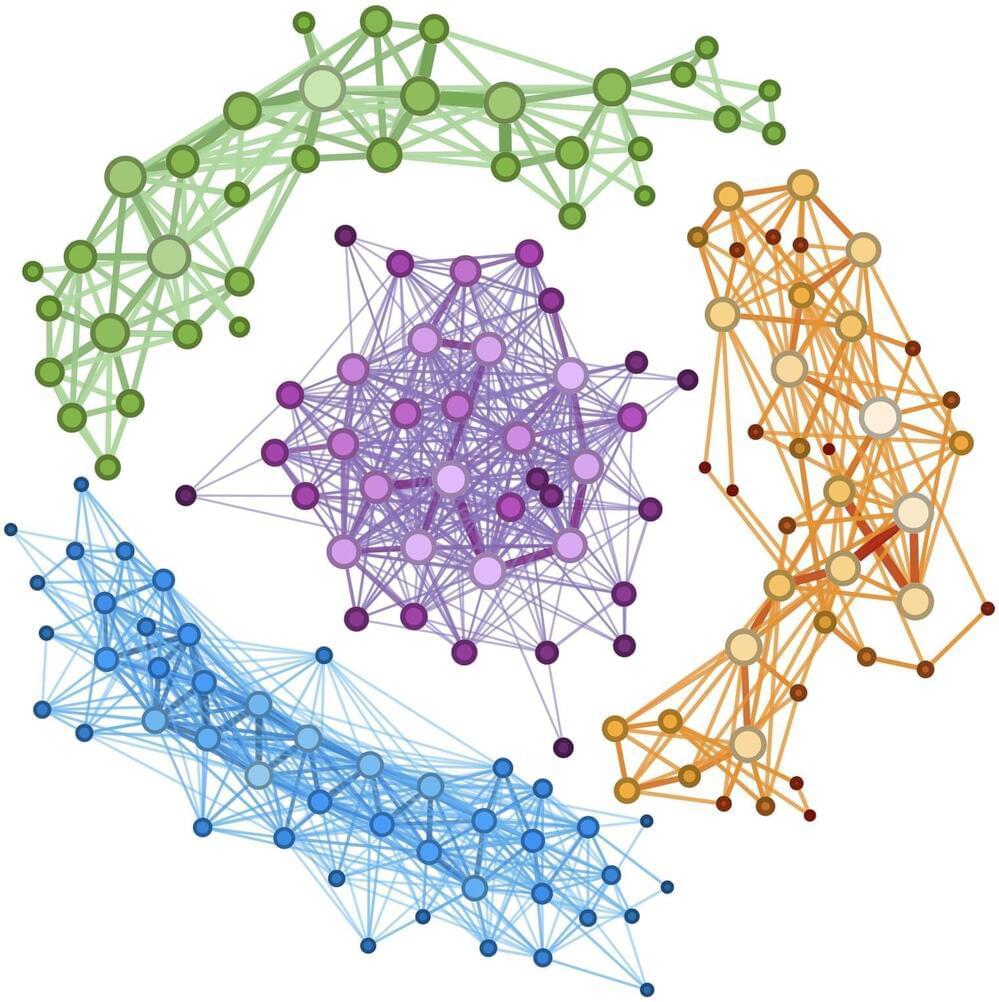

The structure of sound: Network insights into Bach’s music

Even today, centuries after he lived, Johann Sebastian Bach remains one of the world’s most popular composers. On Spotify, close to seven million people stream his music per month, and his listener count is higher than that of Mozart and even Beethoven. The Prélude to his Cello Suite No. 1 in G Major has been listened to hundreds of millions of times.

The Fermi Paradox: Migration

Go to https://brilliant.org/IsaacArthur/ to get a 30-day free trial and 20% off their annual subscription.

We often wonder where all the vast and ancient alien civilizations are, but could it be that they’ve migrated far away in space or time, or even journeyed beyond our cosmos?

Join this channel to get access to perks:

/ @isaacarthursfia.

Visit our Website: http://www.isaacarthur.net.

Join Nebula: https://go.nebula.tv/isaacarthur.

Support us on Patreon: / isaacarthur.

Support us on Subscribestar: https://www.subscribestar.com/isaac-a…

Facebook Group: / 1583992725237264

Reddit: / isaacarthur.

Twitter: / isaac_a_arthur on Twitter and RT our future content.

SFIA Discord Server: / discord.

Credits:

The Fermi Paradox: Migration.

Episode 459; August 8, 2024

Produced, Written \& Narrated by: Isaac Arthur.

Graphics:

Jeremy Jozwik.

Ken York.

LegionTech Studios.

Sergio Botero.

Select imagery/video supplied by Getty Images.

Music Courtesy of Epidemic Sound http://epidemicsound.com/creator.

Lombus, \