Expand your scientific horizon with Brilliant! First 200 to use our link https://brilliant.org/sabine will get 20% off the annual premium subscription.

Today I have an update on the reproduction efforts for the supposed room temperature superconductor, LK 99, the first images from the Euclid mission, more trouble with Starlink satellites, first results from a new simulation for cosmological structure formation, how to steer drops with ultrasound, bacteria that make plastic, an improvement for wireless power transfer, better earthquake warnings, an attempt to predict war, and of course the telephone will ring.

Here is the link to the overview on the LK99 reproduction experiments that I mentioned: https://urlis.net/vesb75fq.

💌 Support us on Donatebox ➜ https://donorbox.org/swtg.

🤓 Transcripts and written news on Substack ➜ https://sciencewtg.substack.com/

👉 Transcript with links to references on Patreon ➜ https://www.patreon.com/Sabine.

📩 Sign up for my weekly science newsletter. It’s free! ➜ https://sabinehossenfelder.com/newsletter/

👂 Now also on Spotify ➜ https://open.spotify.com/show/0MkNfXlKnMPEUMEeKQYmYC

🔗 Join this channel to get access to perks ➜

https://www.youtube.com/channel/UC1yNl2E66ZzKApQdRuTQ4tw/join.

🖼️ On instagram ➜ https://www.instagram.com/sciencewtg/

00:00 Intro.

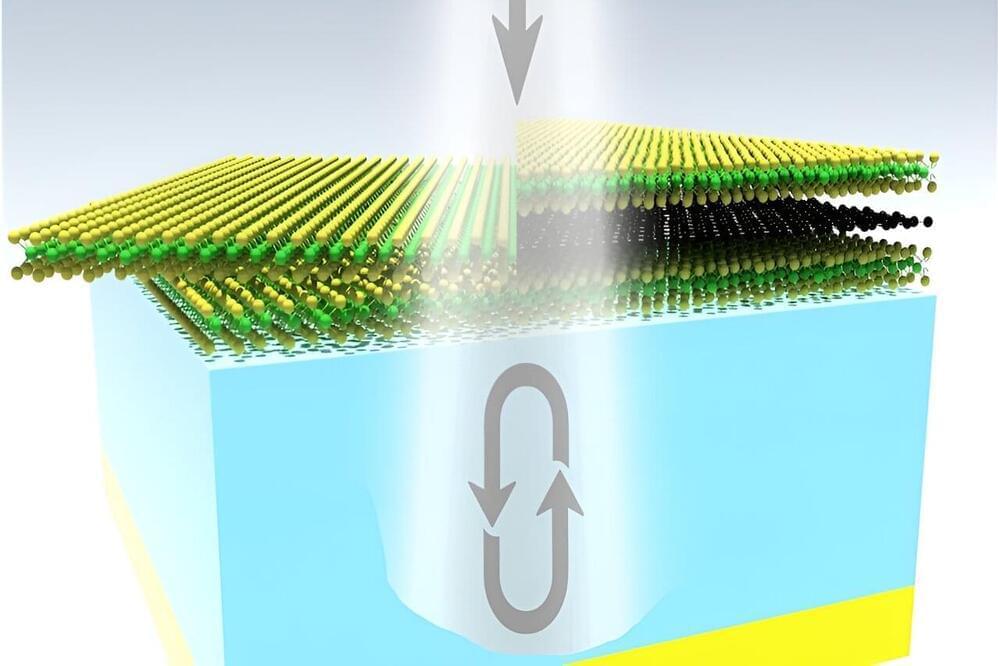

00:39 LK99 Superconductor Update.

03:03 First Images from Euclid Mission.

04:19 Starlink Satellites Leak Radiowaves.

06:13 New Cosmological Structure Formation Simulation.

08:27 An Ultrasound Platform to Levitate and Steer Drops.

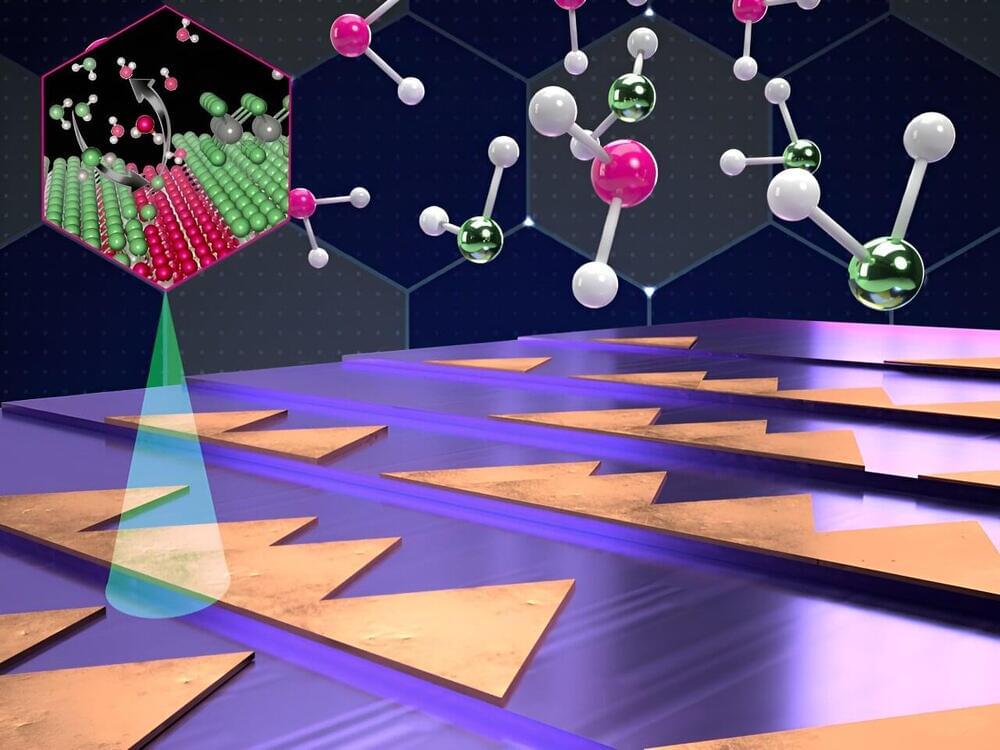

09:43 Bacteria That Make Recyclable Plastic.

11:02 Better Wireless Power Transfer.

13:02 A New Earthquake Precursor.

14:34 War Predictions.

16:00 Learn Science With Brilliant.

#science #sciencenews