Dr. Somrita Banerjee: “This is the first time AI has been used to help control a robot on the ISS. It shows that robots can move faster and more efficiently without sacrificing safety, which is essential for future missions where humans won’t always be able to guide them.”

How can an AI robot help improve human space exploration? This is what a recent study presented at the 2025 International Conference on Space Robotics hopes to address as a team of researchers investigated new methods for enhancing AI robots in space. This study has the potential to help scientists develop new methods for enhancing human-robotic relationships, specifically as humanity begins settling on the Moon and eventually Mars.

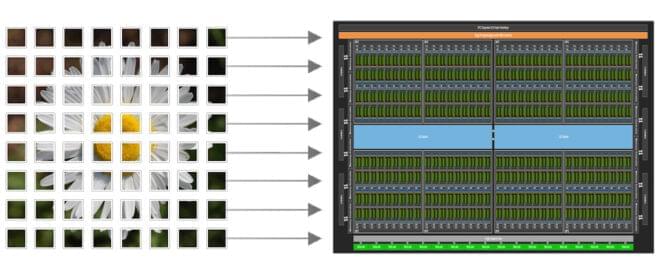

For the study, the researchers examined how a technique called machine learning-based warm starts could be used to improve robot autonomy. To accomplish this, the researchers launched the Astrobee free-flying robot to the International Space Station (ISS), where its algorithm was tested floating around the ISS in microgravity. The goal of the study was to ascertain if Astrobee could navigate its way around the ISS without the need for human intervention, relying only on its algorithm to determine safely traversing the ISS. In the end, the researchers found that Astrobee successfully navigated the tight terrain of the ISS with limited need for human intervention.