Most computers run on microchips, but what if we’ve been overlooking a simpler, more elegant computational tool all this time? In fact, what if we were the computational tool?

As crazy as it sounds, a future in which humans are the ones doing the computing may be closer than we think. In an article published in IEEE Access, Yo Kobayashi from the Graduate School of Engineering Science at the University of Osaka demonstrates that living tissue can be used to process information and solve complex equations, exactly as a computer does.

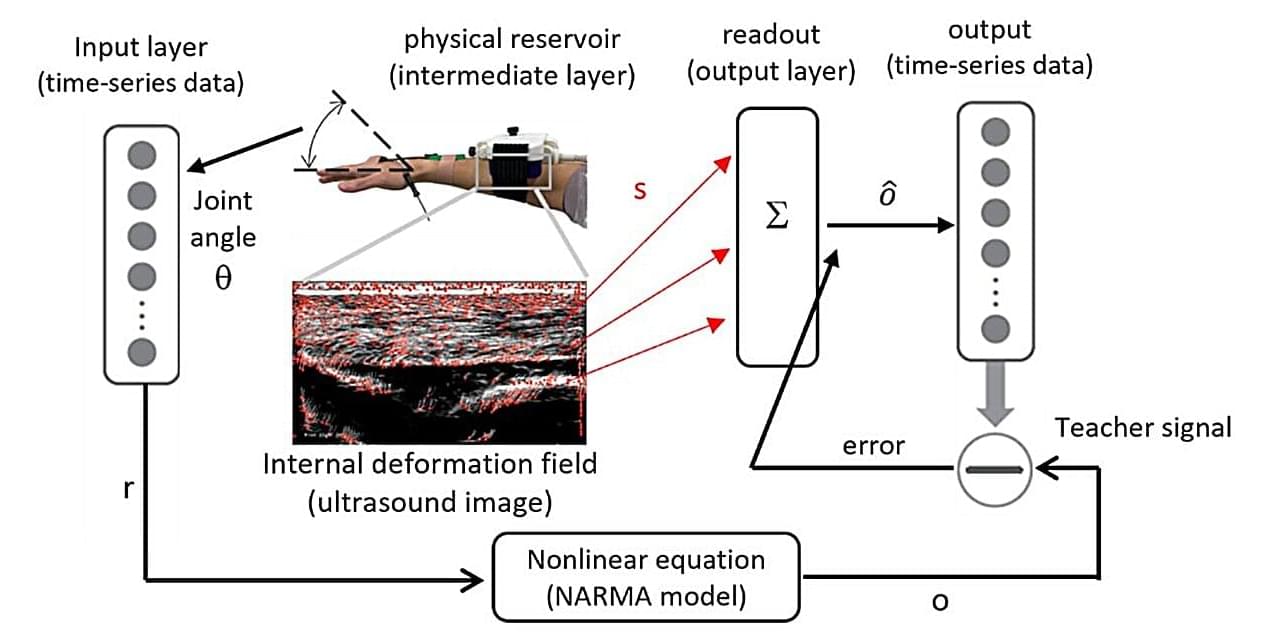

This achievement is an example of the power of the computational framework known as reservoir computing, in which data are input into a complex “reservoir” that has the ability to encode rich patterns. A computational model then learns to convert these patterns into meaningful outputs via a neural network.