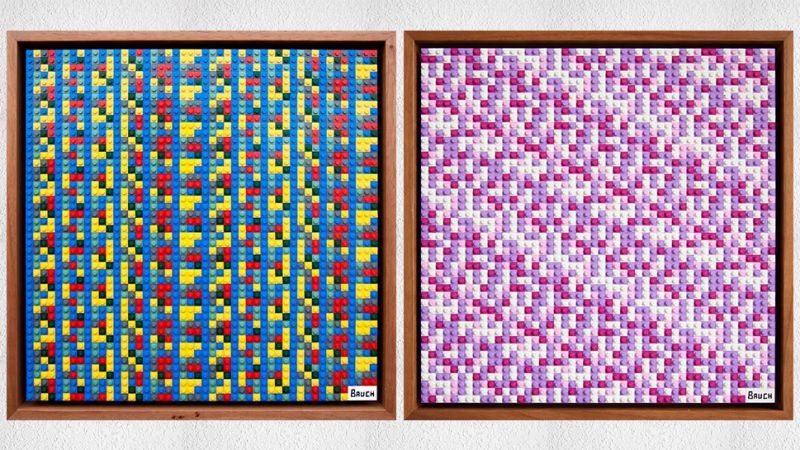

It has no inherent value and causes observers to rotate between feelings of fascination and anger. We’re talking about cryptocurrency, but also art. In a new series, artist Andy Bauch is bringing the two subjects together with works that use abstract patterns constructed in Lego bricks. Each piece visually represents the private key to a crypto-wallet, and anyone can steal that digital cash—if you can decode them.

Bauch first started playing around with cryptocurrencies in 2013 and told us in an interview that he considers himself an enthusiast but not a “rabid promoter” of the technology. “I wasn’t smart enough to buy enough to have fuck-you money,” he said. In 2016, he started to integrate his Bitcoin interest with his art practice.

His latest series of work, New Money, opens at LA’s Castelli Art Space on Friday. Bauch says that each piece in the series “is a secret key to various types of cryptocurrency.” He bought various amounts of Bitcoin, Litecoin, and other alt-coins in 2016 and put them in different digital wallets. Each wallet is encrypted with a private key that consists of a string of letters and numbers. That key was initially fed into an algorithm to generate a pattern. Then Bauch tweaked the algorithm here and there to get it to spit out an image that appealed to him. After finalizing the works, he’s rigorously tested them in reverse to ensure that they do, indeed, give you the right private key when processed through his formula.