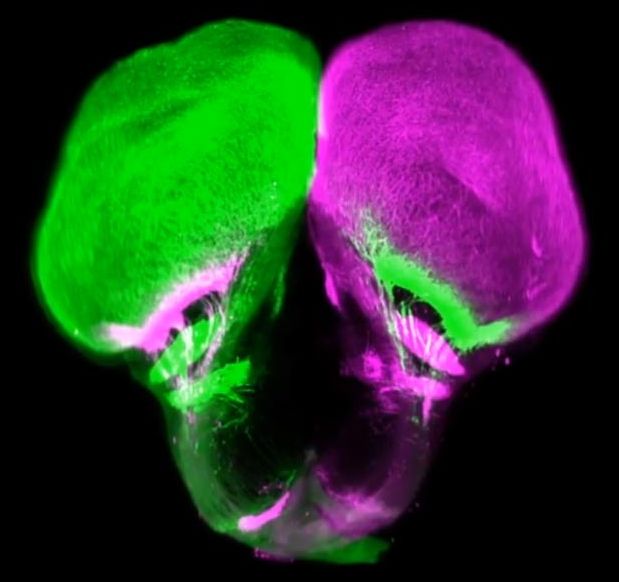

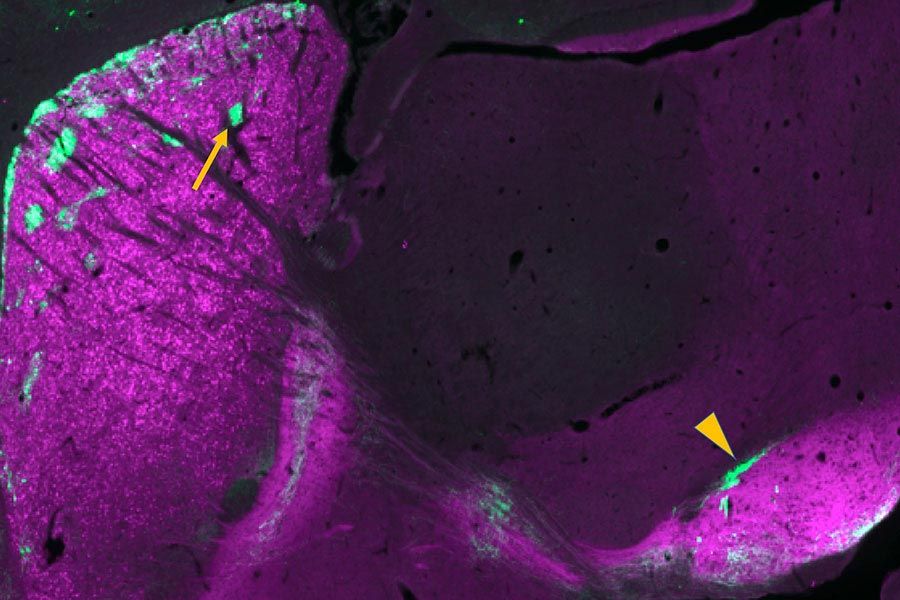

Controversy has shrouded the once-common plasticizer BPA since studies started to highlight its links to a whole range of adverse health effects in humans, but recent research has also shown that its substitutes mightn’t be all that safe either. A new study has investigated how these compounds impact nerve cells in the adult brain, with the authors finding that they likely permanently disrupt signal transmission, and also interfere with neural circuits involved in perception.

BPA, or bisphenol A, is a chemical that has been commonly used in food, beverage and other types of packaging for decades, but experts have grown increasingly concerned that it can leech into these consumables and impact human health in ways ranging from endocrine dysfunction to cancer. This came on the back of scientific studies revealing such links dating back to the 1990s, which in turn saw the rise of “BPA-free” plastics as a safer alternative.

One of those alternatives is bisphenol S (BPS), and while it allows plastic manufacturers to slap a BPA-free label on their packaging, more and more research is demonstrating that it mightn’t be much better for us. As just one example, a study last year showed through experiments on mice that just like BPA, BPS can alter the expression of genes in the placenta and likely fundamentally disrupt fetal brain development.