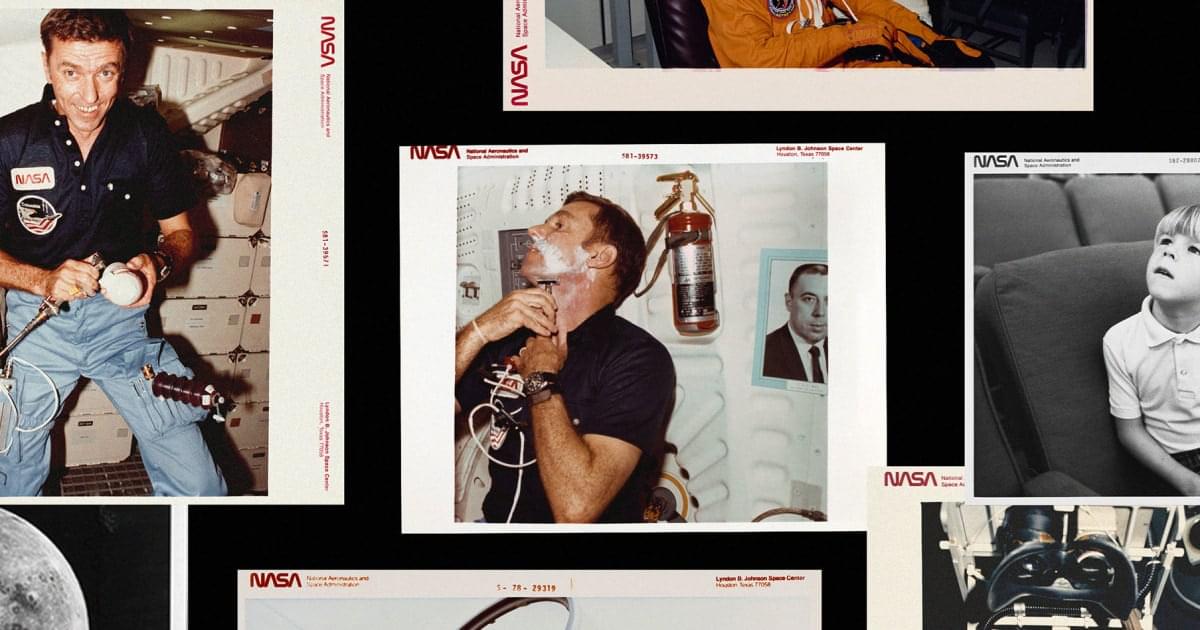

Traveling to space presents significant challenges to human health, with research detailing a variety of detrimental effects on the body that may mirror accelerated aging. These include a loss of bone density, swelling of brain and eye nerves, and changes in gene expression. NASA’s groundbreaking study featuring identical twin astronauts Mark and Scott Kelly provided vital insights into these concerns by observing Scott’s physical condition after spending 340 days in space, while Mark remained on Earth. Findings from this 2019 “twins study,” published in the journal Science, revealed that Scott experienced DNA damage, cognitive decline, and persistent telomere shortening—an indication of aging—even six months post-mission.

Recent research has now uncovered an alarming revelation about stem cells during spaceflight, indicating that they exhibit signs of aging at a staggering rate—up to ten times faster than their counterparts on Earth. Dr. Catriona Jamieson, director of the Sanford Stem Cell Institute at the University of California, San Diego, and a lead author of the new study published in the journal Cell Stem Cell, articulated the significance of this finding. Stem cells, which are crucial for the development and repair of various tissues, losing their youthful capacity could lead to grave health issues, such as chronic diseases, neurodegeneration, and cancer.

This study arrives at a pivotal moment, as both government agencies and private companies are gearing up for long-duration missions to the moon and beyond. With the surge in interest in spaceflight, understanding the associated health risks has never been more urgent. Insights from this accelerated cellular aging could not only inform safer space travel but also enhance our understanding of biological processes on Earth.