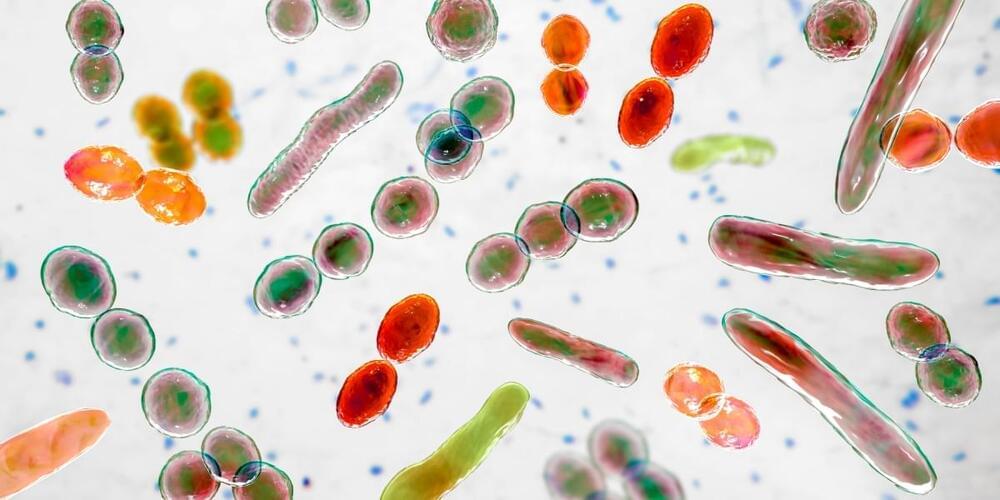

Human pancreas-on-a-chip (PoC) technology is quickly advancing as a platform for complex in vitro modeling of islet physiology. This review summarizes the current progress and evaluates the possibility of using this technology for clinical islet transplantation.

PoC microfluidic platforms have mainly shown proof of principle for long-term culturing of islets to study islet function in a standardized format. Advancement in microfluidic design by using imaging-compatible biomaterials and biosensor technology might provide a novel future tool for predicting islet transplantation outcome. Progress in combining islets with other tissue types gives a possibility to study diabetic interventions in a minimal equivalent in vitro environment.

Although the field of PoC is still in its infancy, considerable progress in the development of functional systems has brought the technology on the verge of a general applicable tool that may be used to study islet quality and to replace animal testing in the development of diabetes interventions.