Explore millions of resources from scholarly journals, books, newspapers, videos and more, on the ProQuest Platform.

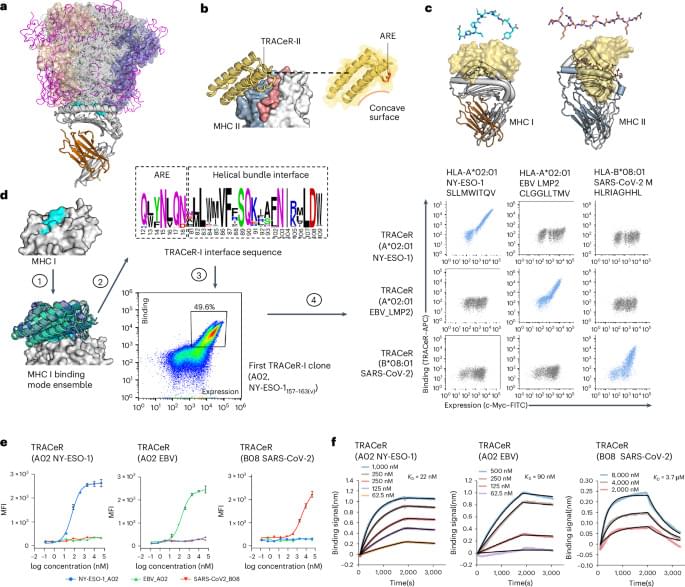

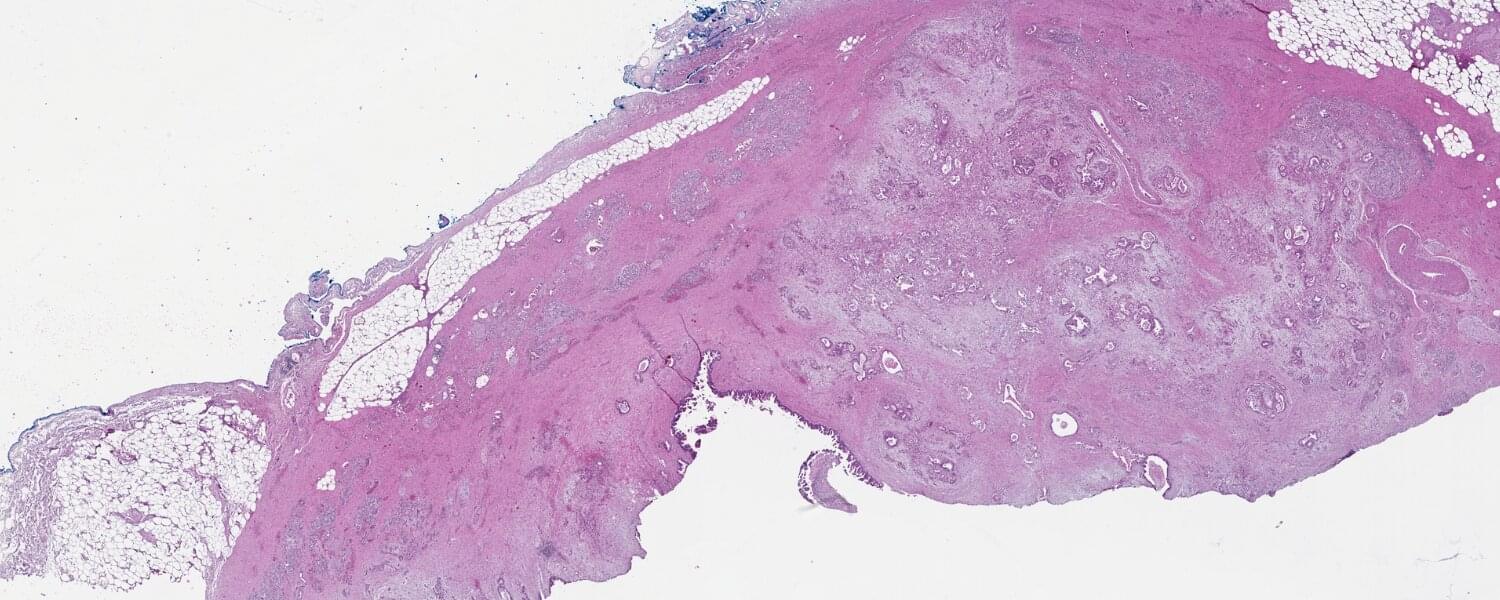

Van Andel Institute scientists and collaborators have developed a new method for identifying and classifying pancreatic cancer cell subtypes based on sugars found on the outside of cancer cells.

These sugars, called glycans, help cells recognize and communicate with each other. They also act as a cellular “signature,” with each subtype of pancreatic cancer cell possessing a different composition of glycans.

The new method, multiplexed glycan immunofluorescence, combines specialized software and imaging techniques to pinpoint the exact mix of pancreatic cancer cells that comprise tumors. In the future, this information may aid in earlier, more precise diagnosis.

Stargate SG1 (TV Program),Stargate Atlantis (TV Program),David Hewlett, Rodney McKay,actor,writer,director,father,son,parenting, Dad,family,stargate atlantis t…

Although ADHD was originally considered to be a disorder of childhood, it has been clear for years that it also impacts adults. At least 60% of children diagnosed with ADHD struggle with symptoms into adulthood and the estimated prevalence of ADHD in adults is between 4 and 5%. As with children and teens, medication treatment is…

Gemini Plays Pokemon (early prototype) — Hello Darkness, My Old Friend |!faq!badges!choppy

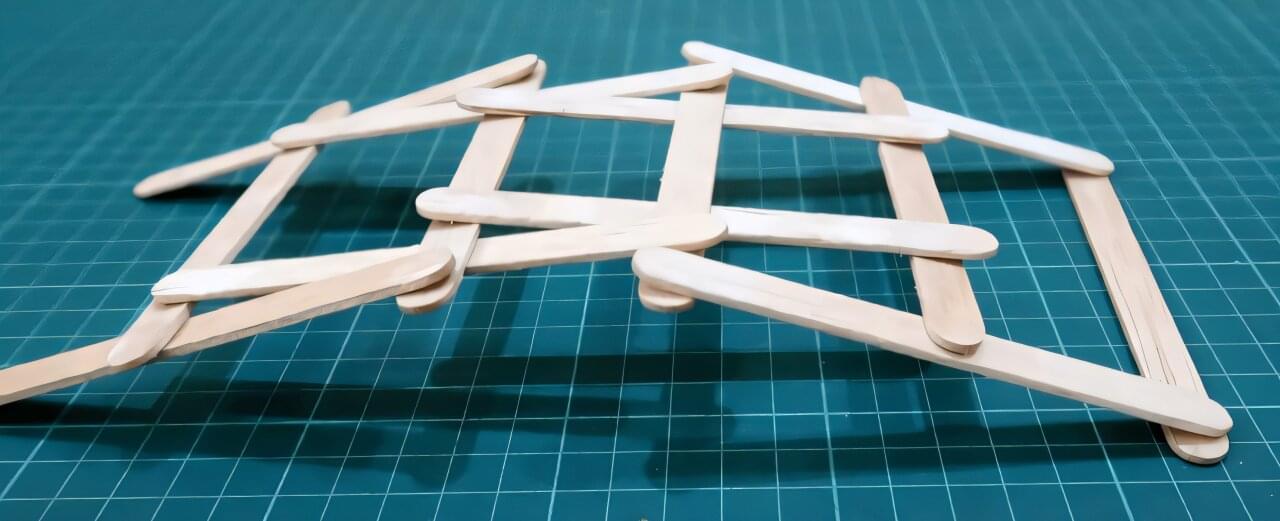

The concept of constructing a self-supporting structure made of rods—without the use of nails, ropes, or glue—dates back to Leonardo da Vinci. In the Codex Atlanticus, da Vinci illustrated a design for a self-supporting bridge across a river, which can be easily demonstrated using toothpicks, matches, or chopsticks. However, this design is fragile—pulling one of the rods or pushing the bridge from below can cause it to collapse.

In contrast, bird nests —which are also self-supporting structures consisting of rigid sticks and twigs—are remarkably stable despite continuous disturbances such as wind, ground vibrations, and the landing or takeoff of birds. What makes bird nests so sturdy?

This was the question at the center of a recent paper from L. Mahadevan and his team at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS). The research is published in the Proceedings of the National Academy of Sciences.