LEAF director Paul Spiegel at the recent ILC Sumit in Madrid discusses the society of the near future where people will exponentially increase their life expectancy. Aspects of work, leisure, pensions are discussed and the need for a new social contract.

Category: futurism – Page 1,258

Facebook just announced a YouTube competitor called Watch

Facebook Watch will let users discover videos outside of their own feed more easily and let them follow favorite content creators.

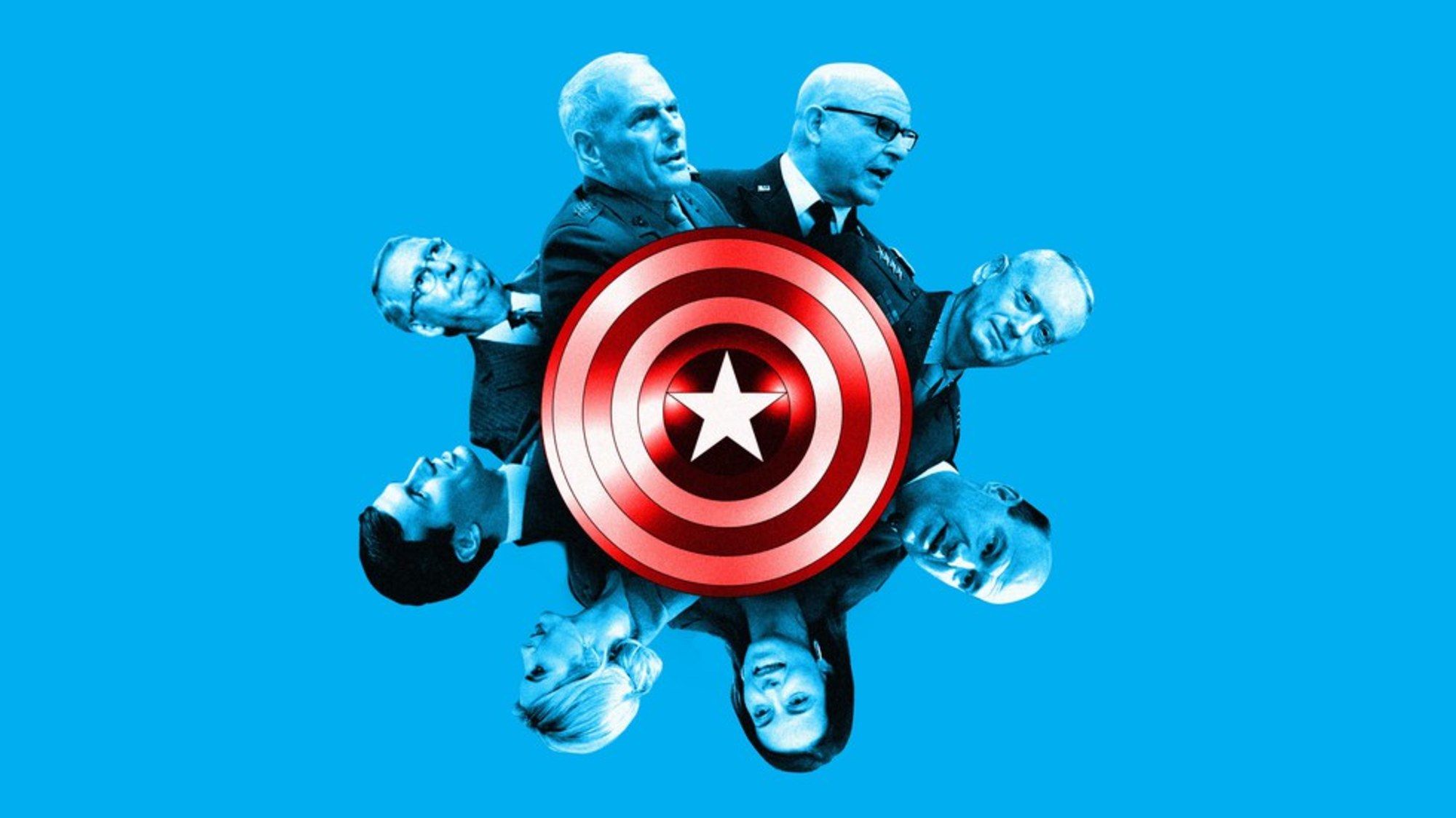

1 big thing: The Committee to Save America

Here’s one of the most intriguing — and consequential — theories circulating inside the White House: The generals, the New Yorkers and Republican congressional leaders see themselves as an unofficial committee to protect Trump and the nation from disaster.

This loose alliance is informal. But as one top official told me: “If you see a guy about to stab someone with a knife, you don’t need to huddle to decide to grab the knife.”

The theory was described to Jim VandeHei and me in a series of private chats with high-ranking officials:

24 Predictions for the Year 3000

In response to the Quora question Looking 1000 years into the future and assuming the human race is doing well, what will society be like?, David Pearce wrote:

The history of futurology to date makes sobering reading. Prophecies tend to reveal more about the emotional and intellectual limitations of the author than the future. […] But here goes…

Tech is at war with the world

America’s largely romantic view of its tech giants — Facebook, Google, Apple, Amazon, etc. —is turning abruptly into harsh scrutiny. Silicon Valley suddenly faces a much more intrusive hand from Washington, based on rapidly accumulating vulnerabilities in nine big areas listed below.

Be smart: Today’s conditions — populist rage in the country, combined with growing suspicion of corporate behemoths — closely mirror those that gave us Teddy Roosevelt’s trust-busting of oil and steel at the turn of the 1900s, and the progressive reforms that ushered in today’s antitrust protections.