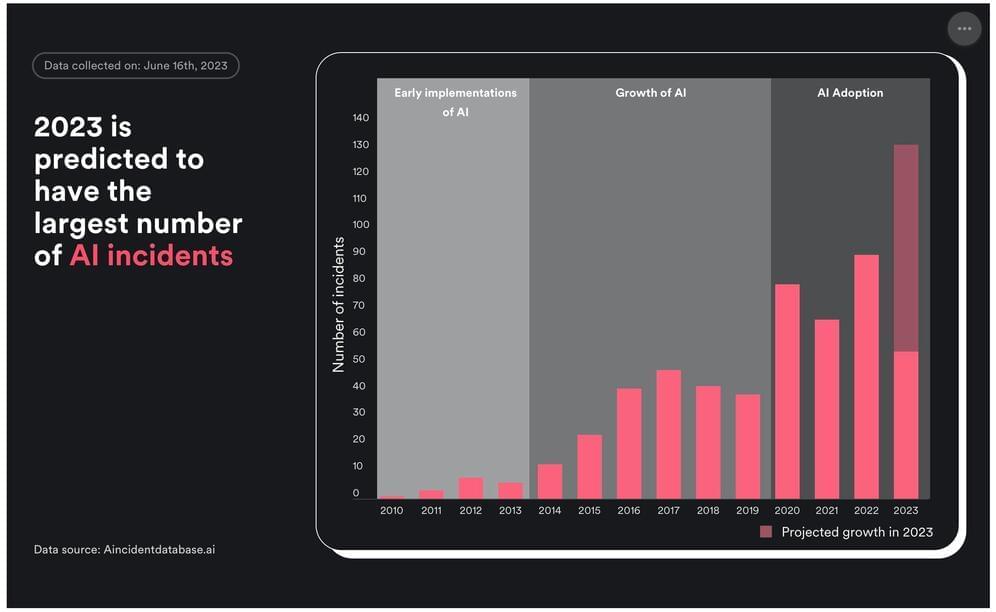

The first “AI incident” almost caused global nuclear war. More recent AI-enabled malfunctions, errors, fraud, and scams include deepfakes used to influence politics, bad health information from chatbots, and self-driving vehicles that are endangering pedestrians.

The worst offenders, according to security company Surfshark, are Tesla, Facebook, and OpenAI, with 24.5% of all known AI incidents so far.

In 1983, an automated system in the Soviet Union thought it detected incoming nuclear missiles from the United States, almost leading to global conflict. That’s the first incident in Surfshark’s report (though it’s debatable whether an automated system from the 1980s counts specifically as artificial intelligence). In the most recent incident, the National Eating Disorders Association (NEDA) was forced to shut down Tessa, its chatbot, after Tessa gave dangerous advice to people seeking help for eating disorders. Other recent incidents include a self-driving Tesla failing to notice a pedestrian and then breaking the law by not yielding to a person in a crosswalk, and a Jefferson Parish resident being wrongfully arrested by Louisiana police after a facial recognition system developed by Clearview AI allegedly mistook him for another individual.