Chinese scientists urge the authorities to monitor and prevent potential radioactive leakage.

A spinning black hole could provide enough energy to power civilization for trillions of years — and create the biggest bomb known to the universe. Using the rotation of a black hole to supercharge electromagnetic waves could create massive amounts of energy or equally massive amounts of destruction. Kurzgesagt explains what it would take to harness a black hole and the potential risks of the process.

This asteroid flyby was so close it was about halfway between the Earth and Moon. How’d we miss THAT? #SCINow

An asteroid approximately the size of a football field flew close by Earth only a day after it was first spotted this weekend. This near miss is a perfect example of an argument I’ve been making for some time: These are the asteroids we should worry about, not the so-called potentially hazardous rocks being tracked by NASA and periodically hyped by panicked headlines.

NASA scientists first observed the asteroid, now called 2018 GE3, on April 14, according to a database. It ventured as close as halfway the distance between Earth and the Moon, and was estimated to be between 47 meter and 100 meters in diameter (~150 and 330 feet). This is smaller than the asteroids governed by the NASA goal, which is to track 90 percent of near-Earth objects larger than 150 meters (~460 feet) in diameter. Nevertheless, it still could have caused a lot of damage if it had hit Earth.

If you read tabloids or Google news headlines, you probably hear about “potentially hazardous asteroids” all the time. But, like we’ve said before, those are not the asteroids you need to worry about. Potentially hazardous asteroids are those that NASA has determined could possibly hit the planet in the distant future, generally those within 20 lunar distances of Earth and 140 meters in diameter or larger.

Maybe one of those times where you shouldn’t have consulted a numerologist?

Silly fringe theories about Planet X—an imagined planet typically named Nibiru that is on course to hit or pass by Earth with disastrous consequences—are the kind of thing normally relegated to vanity press-published books or those tabloids you browse in the supermarket checkout aisle. On Wednesday, they made it into Fox News, with the added caveat that maybe some other Biblical catastrophe could surprise us instead.

The Planet X theory first emerged in 1995 and is usually evidenced by tortured interpretations of religious texts, with vague suppositions that NASA either hasn’t detected this ominous celestial body or is actively covering up its existence to prevent widespread panic. In an article filed to Fox’s website on Wednesday, this time the prophesied doomsday comes courtesy of an article in British rag the Daily Express citing numerologist David Meade’s interpretation of the Bible’s Revelation 12:1–2:

Is The Rapture finally here? One Christian numerologist says a biblical sign strongly suggests it.

The passage reads: “And a great sign appeared in heaven: a woman clothed with the sun, with the moon under her feet, and on her head a crown of 12 stars. She was pregnant and was crying out in birth pains and the agony of giving birth.”

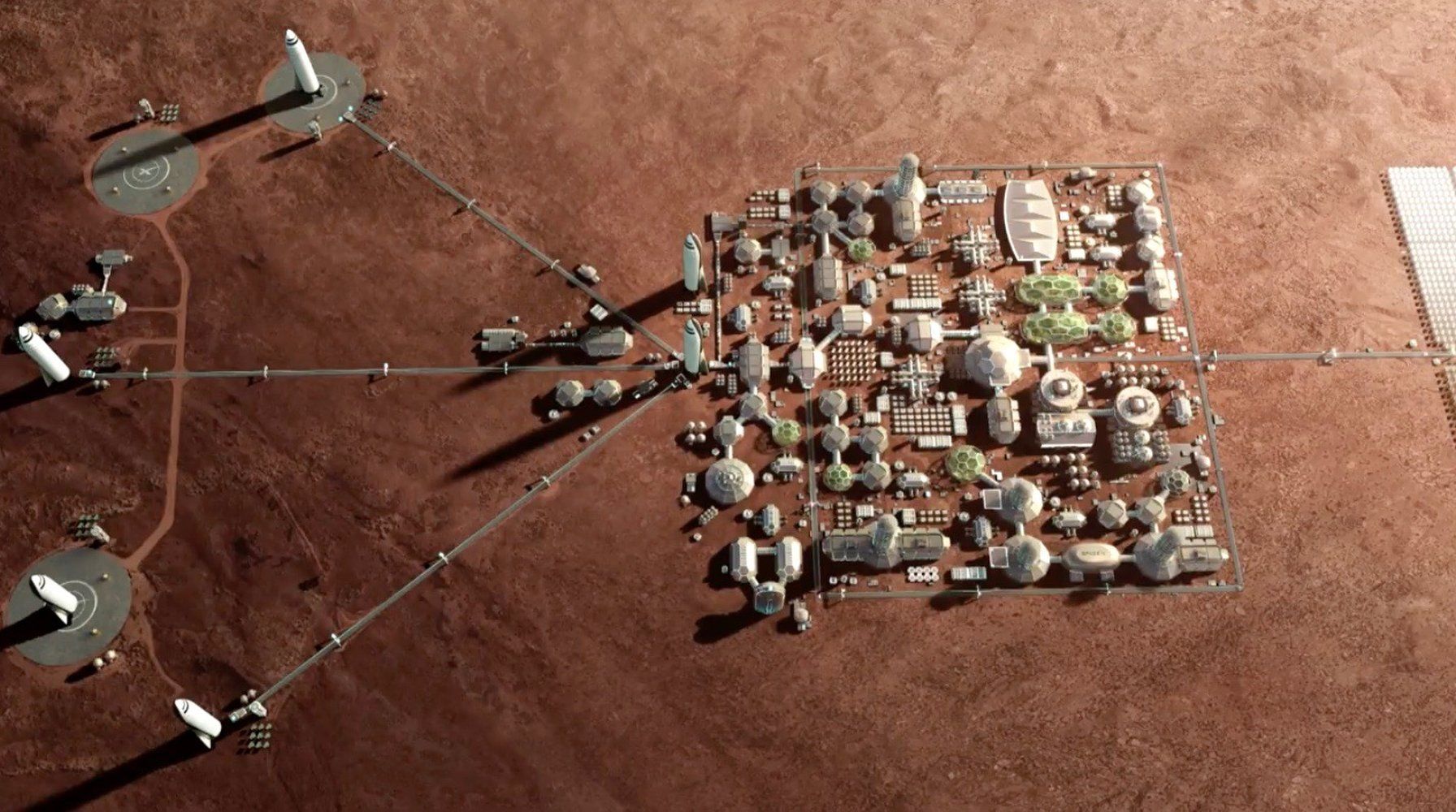

NASA wants to go to Mars. SpaceX wants to go to Mars. Michio Kaku wants humanity to go to Mars so we can avoid extinction. The rest of us just want to see our species actually set foot on Mars. But first, the moon.

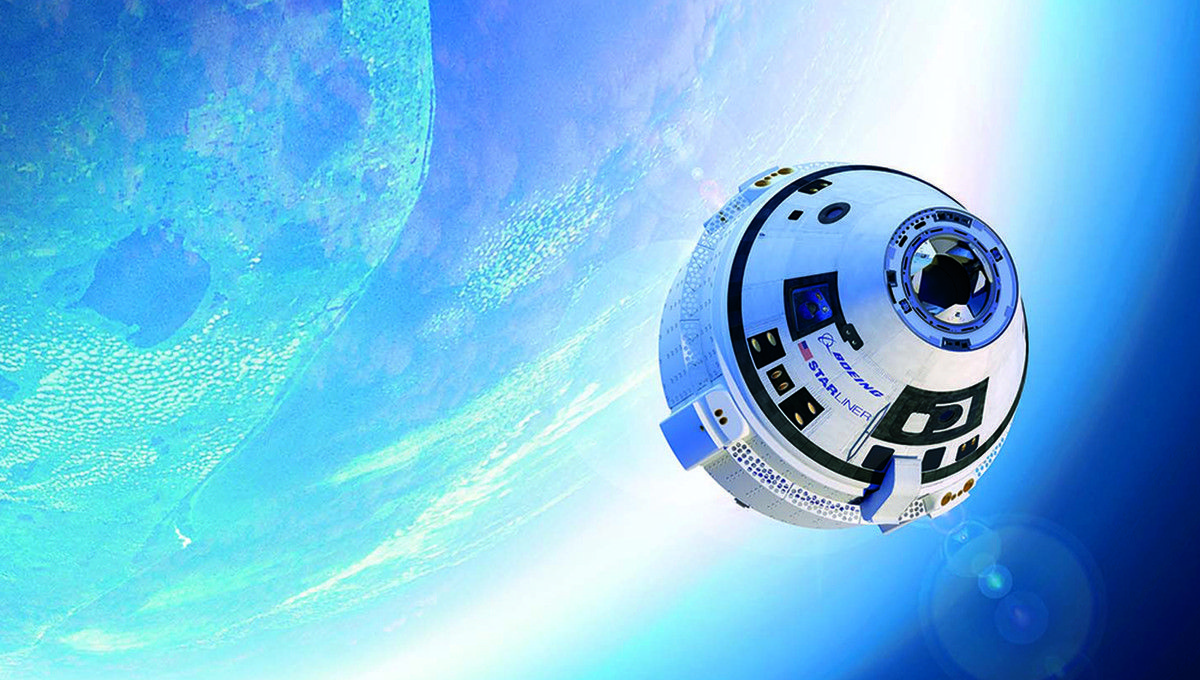

Think of the moon as a launchpad for the Red Planet. As LiveScience found out, Boeing’s Crew Space Transportation (CST)-100 Starliner is going to take advantage of our satellite as a blast-off point for the next frontier. Starliner (the name is about as sci-fi as you can get) is what happens when Boeing, which probably makes everyone think airplanes not spaceships, joins forces with NASA to develop a reusable space capsule that will be able to fly up to seven astronauts to the ISS. It will also be the world’s first commercial space vehicle.

Starliner is even autonomous. Meaning crews will spend less time on training and take off sooner. It only needs one astronaut to fly it, or more like assist it in flight, using tablets and touch screens.

In its early life, the Earth would have been peppered nearly continuously by asteroids smashing into our young planet. These fiery collisions made our world what it is today. It may seem like things have changed since then, given the vast assortment of life and wide blue oceans—and things have indeed changed. At least in some respects. However, Earth still receives thousands of tons of matter from space, but this is in the form of microscopic dust particles (as opposed to recurrent, energetic collisions).

Fortunately, in modern times, a large asteroid colliding with the surface of the Earth happens only very rarely. Nevertheless, it does happen from time to time.

As most are probably already aware, it is widely believed that an asteroid initiated the dinosaurs’ extinction some 65 million years ago. And more recently, the Russian Chelyabinsk meteor hit our planet in February of 2013. It entered at a shallow angle at 60 times the speed of sound. Upon contact with our atmosphere, it exploded in an air burst. The size of this body of rock (before it burned up and shattered) is estimated to be around 20 meters (across) and it weighed some 13,000 metric tons.

Humanity’s brutal and bellicose past provides ample justification for pursuing settlements on the moon and Mars, Elon Musk says.

The billionaire entrepreneur has long stressed that he founded SpaceX in 2002 primarily to help make humanity a multiplanet species — a giant leap that would render us much less vulnerable to extinction.

Human civilization faces many grave threats over the long haul, from asteroid strikes and climate change to artificial intelligence run amok, Musk has said over the years. And he recently highlighted our well-documented inability to get along with each other as another frightening factor. [The BFR: SpaceX’s Mars Colony Plan in Images].

Recent headlines have contained lots of asteroid-nuking talk. There’s a team of Russian scientists zapping mini asteroids in their lab, and supposedly NASA is thinking about a plan that would hypothetically involve nuking Bennushould it threaten Earth in 2135.

It’s true that NASA is drafting up ideas on how one might nuke an incoming asteroid, a theoretical plan called HAMMER, or the Hypervelocity Asteroid Mitigation Mission for Emergency Response, as we’ve reported. But scientists probably won’t need to use such a response on the “Empire State Building-sized” asteroid 101955 Bennu, which is set to pass close to Earth in 2135. Diverting such a threat could be much, much easier.

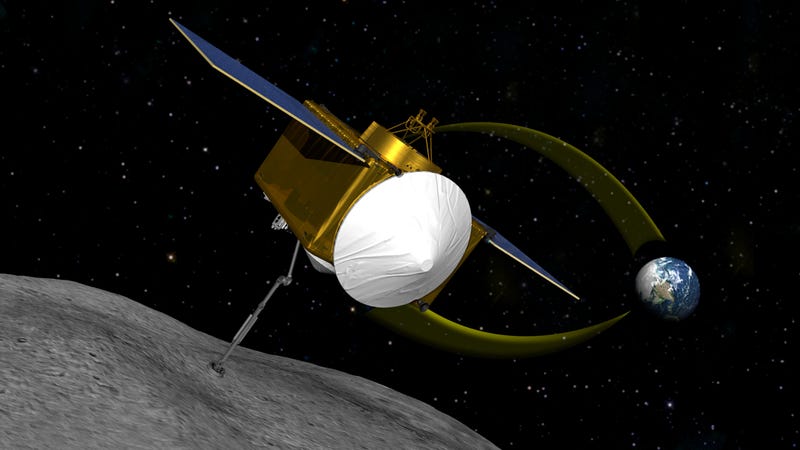

“Even just painting the surface a different color on one half would change the thermal properties and change its orbit,” Michael Moreau, NASA’s OSIRIS-REx Flight Dynamics System Manager, told Gizmodo. That would involve literally sending a spacecraft to somehow change the color of some of the asteroid.

There’s lots we don’t know about asteroids just yet, which is why NASA has sent the probe OSIRIS-REx toward Bennu. This mission aims to scoop up and return a sample of the rock in 2023.

There is a minuscule chance, around 1 in 2,700 odds, that Bennu will strike Earth in 2135, reports the Washington Post. The rock isn’t big enough to end humanity, but it could cause some major damage. The OSIRIS-REx spacecraft will study the rock more, and NASA will continue to collect data to either rule out the chance of an impact or increase the odds.

But don’t worry about Bennu yet. Should the odds of a Bennu strike grow too high, the laws of physics will allow for a much easier solution than nuking. We could just splash it with some paint.

The sun pelts everything in the solar system with a slew of tiny particles, for example. This imparts a little bit of pressure. These particles are of no consequence to our own orbit, since Earth is incredibly massive, but Bennu weighs only around 13 times the mass of the Great Pyramid of Giza. That’s very light, comparatively. Given the 120 years or so we’ve got and the amount of distance Bennu has left to travel before its nearby approach, if scientists could make some of it more susceptible to the solar radiation, that would slightly alter its path enough that it would miss us. Doing so would require changing part of its surface so it absorbs more radiation—for example, by covering one side of it with paint. Scientists first need to better study its orbit around the sun to determine the best course of action.

All that is to say, as usual, we’re not about to be hit by the giant asteroid in the headlines.

There are asteroids we need to worry about, of course. But as we’ve reported before, we’re not tracking them. The government has only required that NASA track asteroids larger than a football field or so. Something smaller could go under the radar and cause significant local damage without the 120 years of warning Bennu has afforded us.

The thought of nuking asteroids makes for great science fiction. But instead, you should spend more time upset that we don’t know what smaller asteroids are threatening us, rather than worry about the ones scientists are tracking that could be diverted more easily.