Cybersecurity expert Leeza Garber discusses Interior Secretary Doug Burgum warning that the United States could lose the AI race to China on ‘Fox Report.’

Cyberattacks can snare workflows, put vulnerable client information at risk, and cost corporations and governments millions of dollars. A botnet—a network infected by malware—can be particularly catastrophic. A new Georgia Tech tool automates the malware removal process, saving engineers hours of work and companies money.

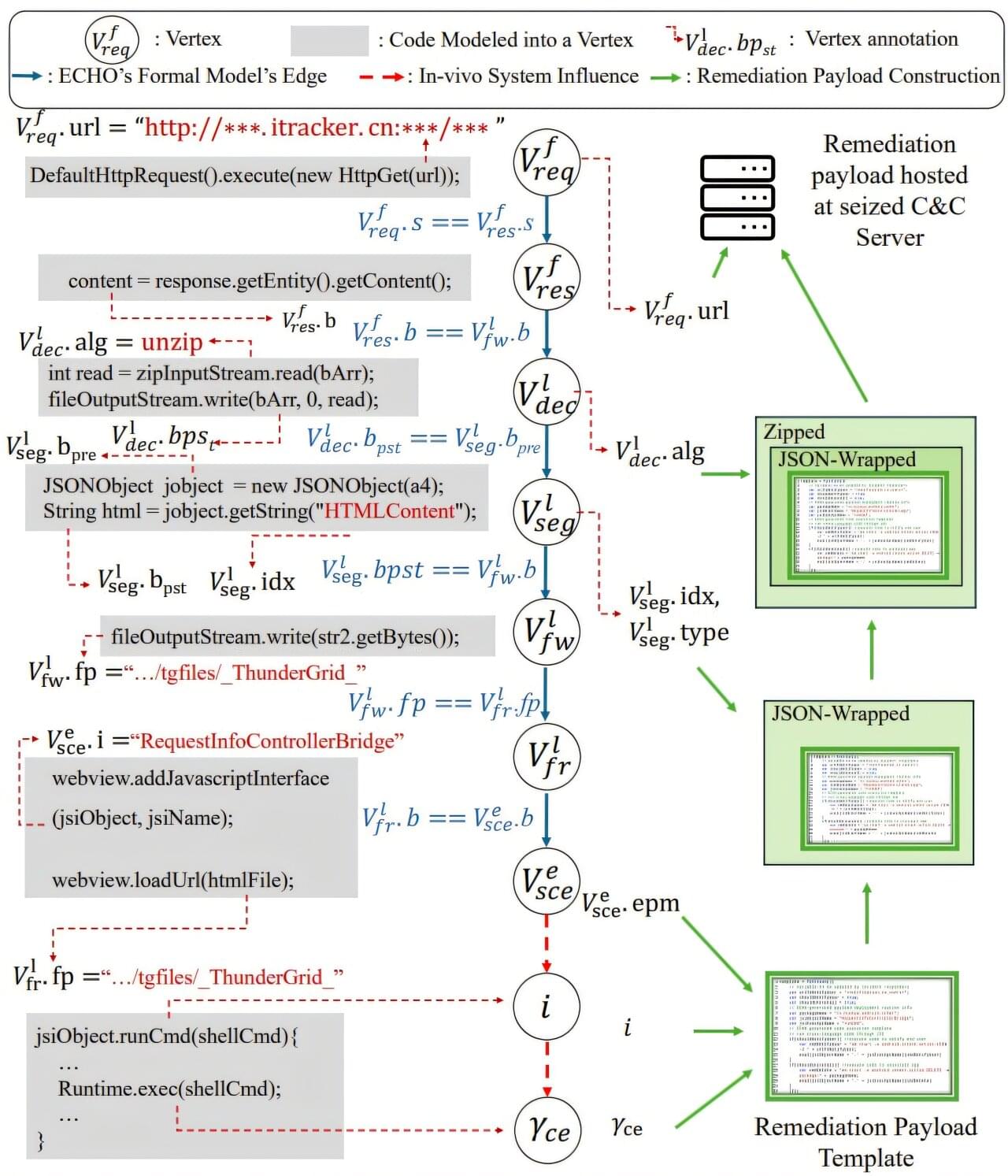

The tool, ECHO, turns malware against itself by exploiting its built-in update mechanisms and preventing botnets from rebuilding. ECHO is 75% effective at removing botnets. Removing malware used to take days or weeks to fix, but can now be resolved in a few minutes. Once a security team realizes their system is compromised, they can now deploy ECHO, which works fast enough to prevent the botnet from taking down an entire network.

“Understanding the behavior of the malware is usually very hard with little reward for the engineer, so we’ve made an automatic solution,” said Runze Zhang, a Ph.D. student in the School of Cybersecurity and Privacy (SCP) and the School of Electrical and Computer Engineering.

Darcula adds GenAI tools + Lowers phishing skills barrier + 25,000 scam pages taken down.

Without coordinated action, genomic data could be exploited for surveillance, discrimination, or even bioterrorism. Current protections are fragmented, and vital collaboration between disciplines is lacking. Key to successful prevention will be interdisciplinary cooperation between computer scientists, bioinformaticians, biotechnologists, and security professionals – groups that rarely work together but must align.

Our research lays the foundations for improving biosecurity by providing a single, clear list of all the possible threats in the entire next-generation sequencing process.

The paper also recommends practical solutions, including secure sequencing protocols, encrypted storage, and AI-powered anomaly detection, creating a foundation for much stronger cyber-biosecurity.

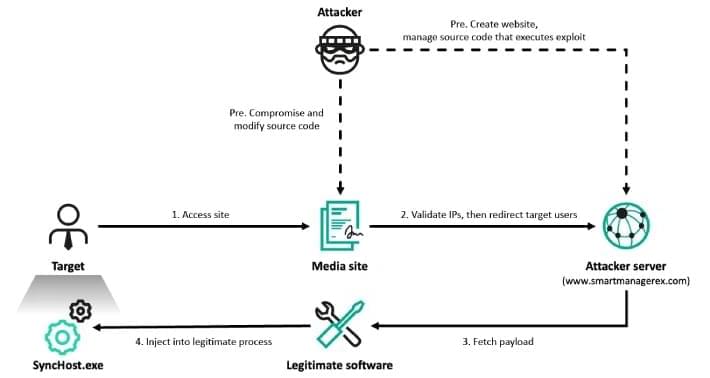

Cybersecurity researchers have revealed that Russian military personnel are the target of a new malicious campaign that distributes Android spyware under the guise of the Alpine Quest mapping software.

“The attackers hide this trojan inside modified Alpine Quest mapping software and distribute it in various ways, including through one of the Russian Android app catalogs,” Doctor Web said in an analysis.

The trojan has been found embedded in older versions of the software and propagated as a freely available variant of Alpine Quest Pro, a paid offering that removes advertising and analytics features.

Next-generation DNA sequencing (NGS)—the same technology which is powering the development of tailor-made medicines, cancer diagnostics, infectious disease tracking, and gene research—could become a prime target for hackers.

A study published in IEEE Access highlights growing concerns over how this powerful sequencing tool—if left unsecured—could be exploited for data breaches, privacy violations, and even future biothreats.

Led by Dr. Nasreen Anjum from the University of Portsmouth’s School of Computing, it is the first comprehensive research study of cyber-biosecurity threats across the entire NGS workflow.