Mathematics application to a new understanding thd world and life and information.

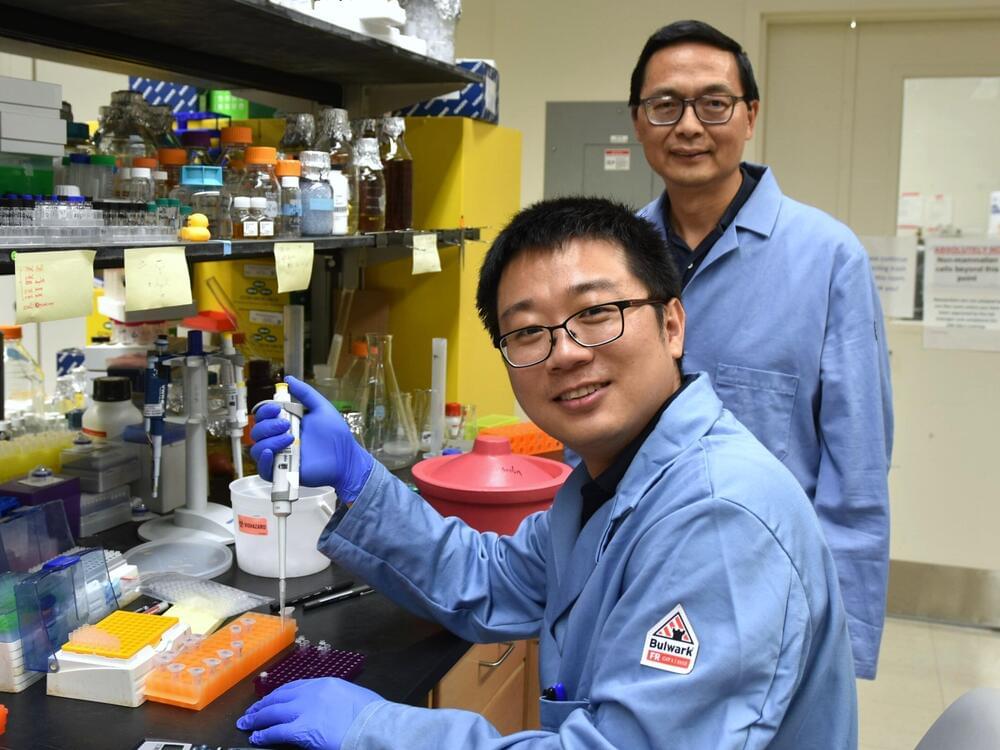

Dr. David Spivak introduces himself as a keynote speaker at the 17th Annual Artificial General Intelligence Conference in Seattle and shares his lifelong passion for math. He discusses his journey from feeling insecure about the world as a child, to grounding his understanding in mathematics.

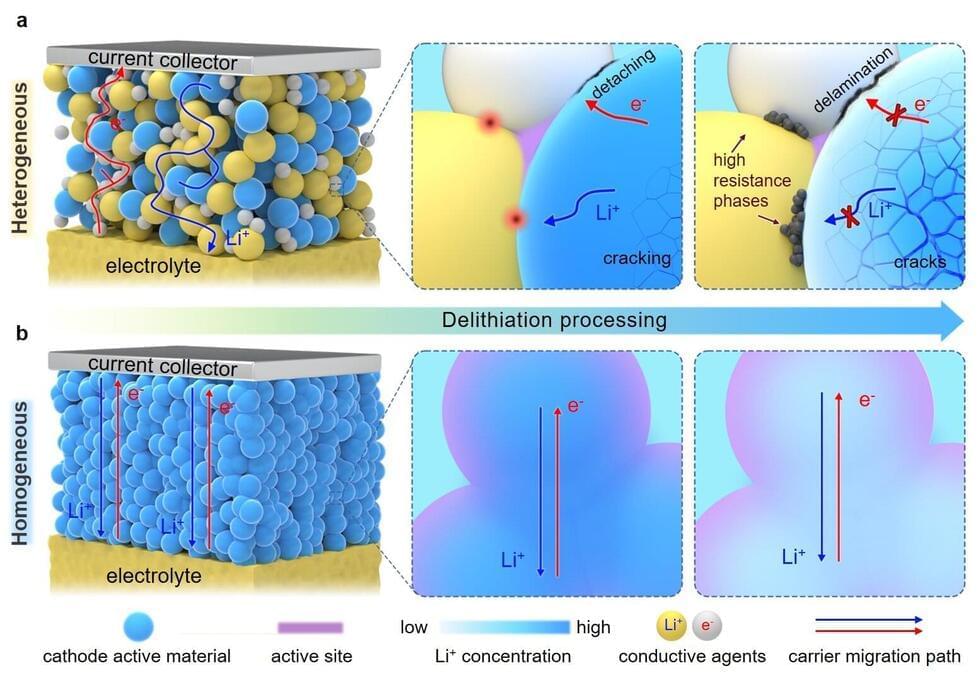

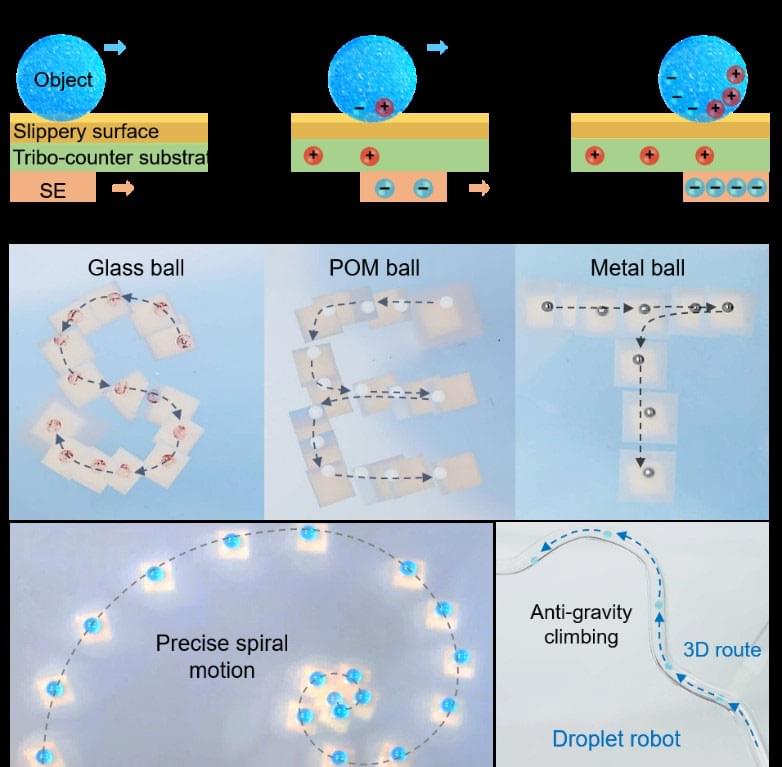

Dr. Spivak is the Secretary of the Board at the Topos Institute and on the Topos staff as Senior Scientist and Institute Fellow, following an appointment as founding Chief Scientist. Since his PhD from UC Berkeley in 2007, he has worked to bring category-theoretic ideas into science, technology, and society, through novel mathematical research and collaboration with scientists from disciplines including Materials Science, Chemistry, Robotics, Aeronautics, and Computing. His mission at Topos is to help develop the ability for people, organizations, and societies to see more clearly—and hence to serve—the systems that sustain them.

For more information and registration, please visit the Conference website: https://agi-conf.org/2024/

#AGI #AGI24 #AI #Mathematics.