Aging is a natural process that affects all living organisms, prompting gradual changes in their behavior and abilities. Past studies have highlighted several physiological factors that can contribute to aging, including the body’s immune responses, an imbalance between the production of reactive oxygen (i.e., free radicals) and antioxidants, and sleep disturbances.

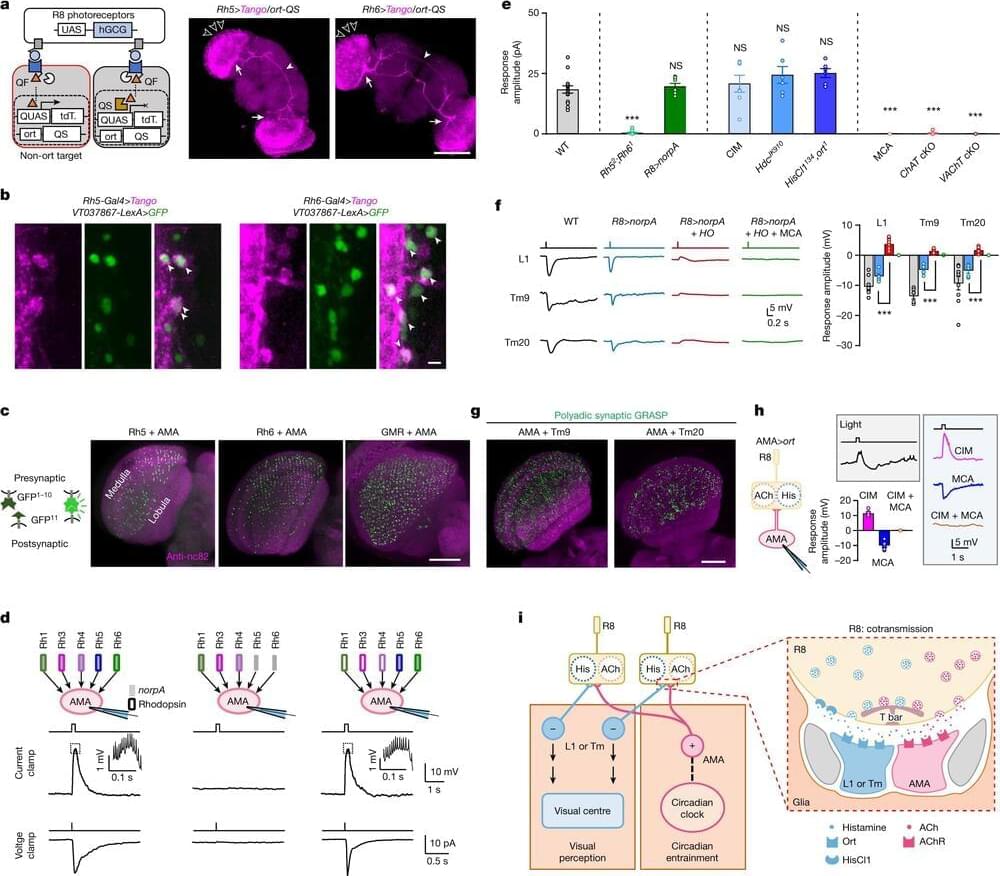

While the link between aging and these different factors is well-document, the connection between them is still poorly understood. Researchers at Washington University in St. Louis recently identified an immune molecule that could play a key role in modulating the process of aging and the duration living organism’s lifespan.

Their paper, published in Neuron, was inspired by two independent research efforts at the university.