Scientists at the U.S. Department of Energy’s (DOE) Brookhaven National Laboratory have developed a novel artificial intelligence (AI)-based method to dramatically tame the flood of data generated by particle detectors at modern accelerators. The new custom-built algorithm uses a neural network to intelligently compress collision data, adapting automatically to the density or “sparsity” of the signals it receives.

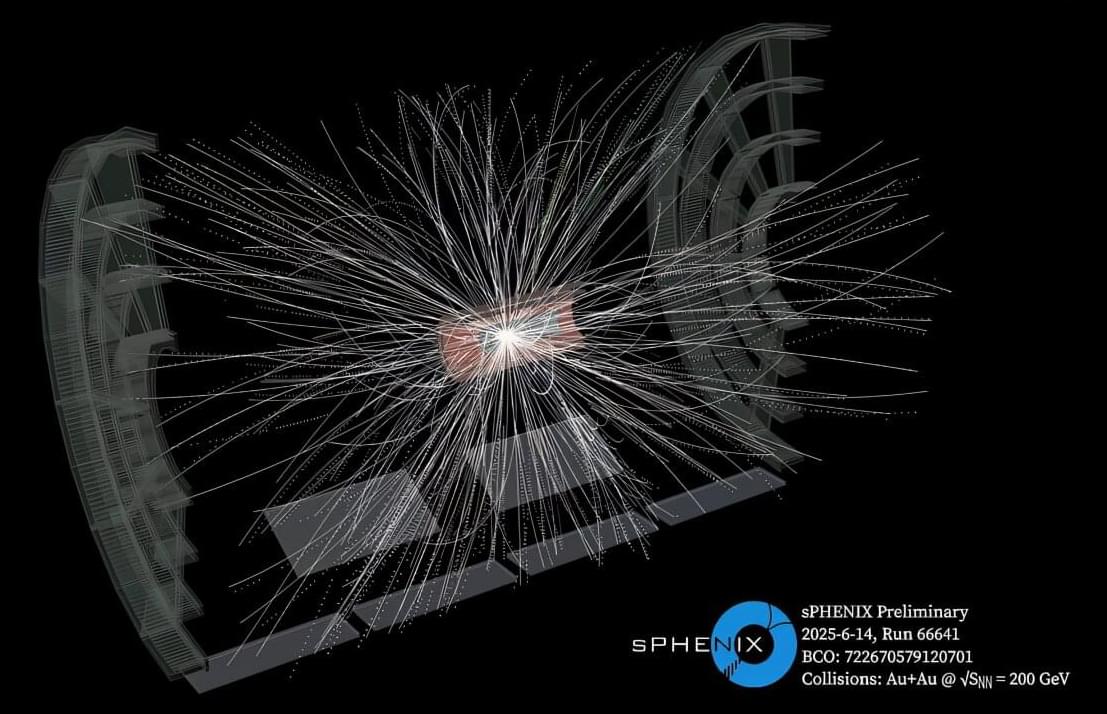

As described in a paper just published in the journal Patterns, the scientists used simulated data from sPHENIX, a particle detector at Brookhaven Lab’s Relativistic Heavy Ion Collider (RHIC), to demonstrate the algorithm’s potential to handle trillions of bits of detector data per second while preserving the fine details physicists need to explore the building blocks of matter.

The algorithm will help physicists gear up for a new era of streaming data acquisition, where every collision is recorded without pre-selecting which ones might be of interest. This will vastly expand the potential for more accurate measurements and unanticipated discoveries.