A new study from the University at Albany shows that artificial intelligence systems may organize information in far more intricate ways than previously thought. The study, “Exploring the Stratified Space Structure of an RL Game with the Volume Growth Transform,” has been published online through arXiv.

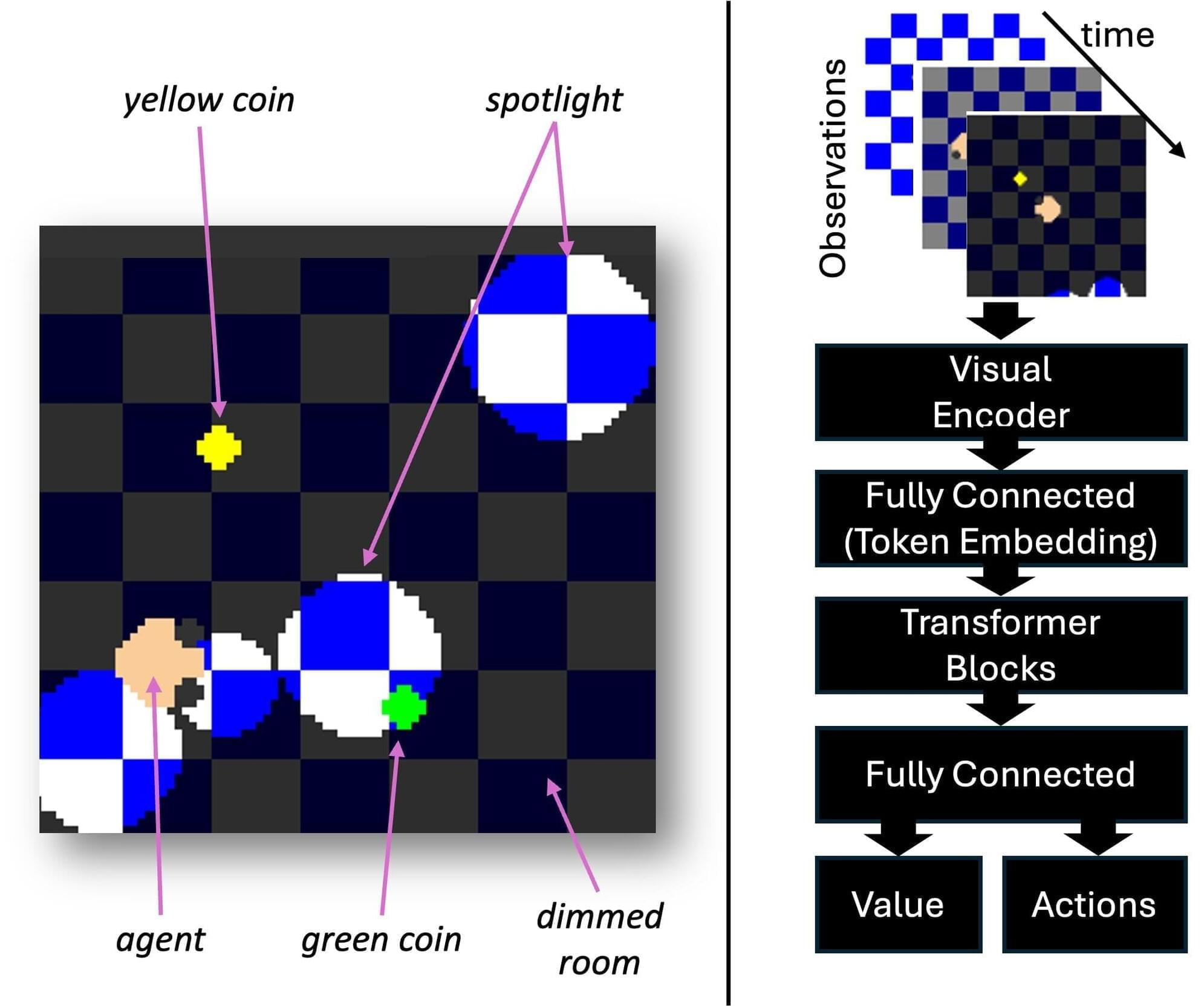

For decades, scientists assumed that neural networks encoded data on smooth, low-dimensional surfaces known as manifolds. But UAlbany researchers found that a transformer-based reinforcement-learning model instead organizes its internal representations in stratified spaces—geometric structures composed of multiple interconnected regions with different dimensions. Their findings mirror recent results in large language models, suggesting that stratified geometry might be a fundamental feature of modern AI systems.

“These models are not living on simple surfaces,” said Justin Curry, associate professor in the Department of Mathematics and Statistics in the College of Arts and Sciences. “What we see instead is a patchwork of geometric layers, each with its own dimensionality. It’s a much richer and more complex picture of how AI understands the world.”