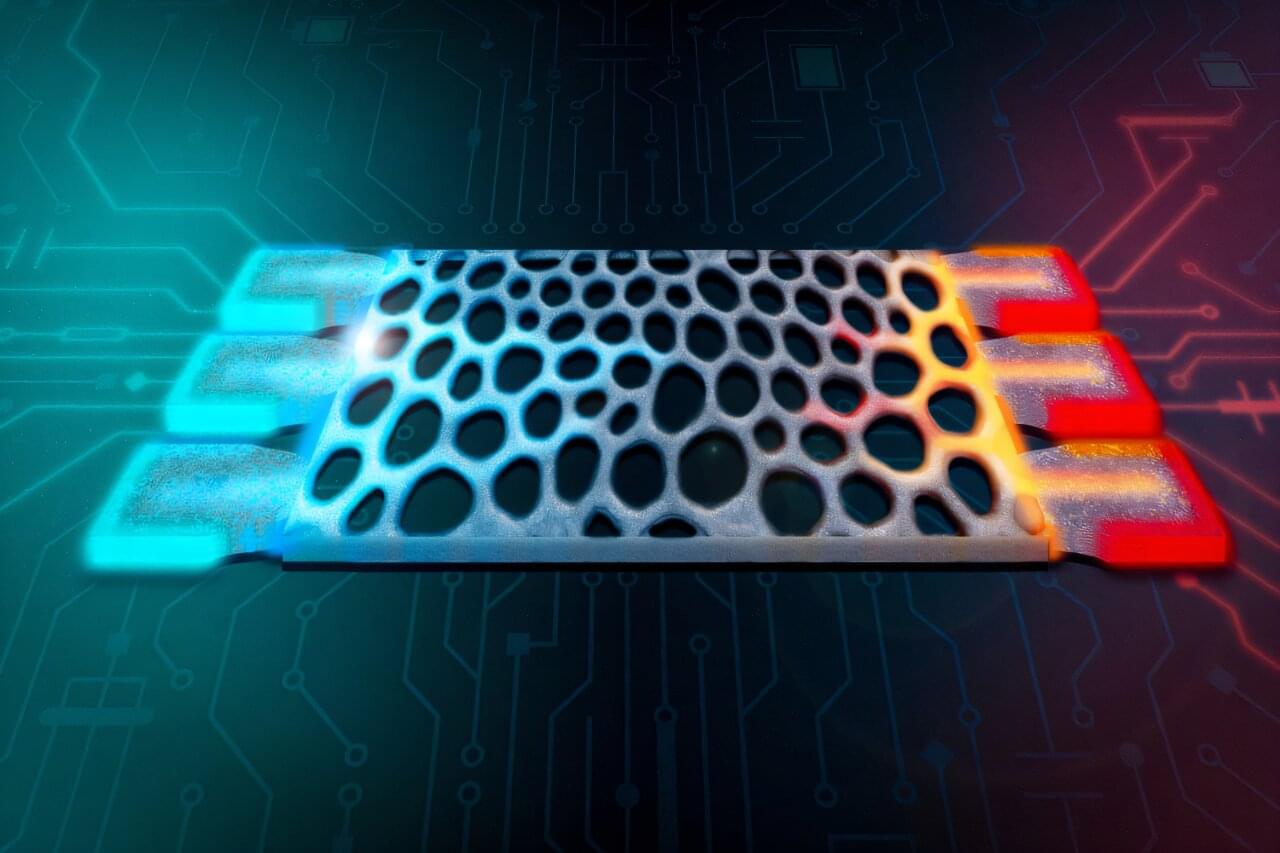

MIT researchers have designed silicon structures that can perform calculations in an electronic device using excess heat instead of electricity. These tiny structures could someday enable more energy-efficient computation. In this computing method, input data are encoded as a set of temperatures using the waste heat already present in a device.

The flow and distribution of heat through a specially designed material forms the basis of the calculation. Then the output is represented by the power collected at the other end, which is a thermostat at a fixed temperature.

The researchers used these structures to perform matrix vector multiplication with more than 99% accuracy. Matrix multiplication is the fundamental mathematical technique machine-learning models like LLMs utilize to process information and make predictions.