The advancement of artificial intelligence (AI) algorithms has opened new possibilities for the development of robots that can reliably tackle various everyday tasks. Training and evaluating these algorithms, however, typically requires extensive efforts, as humans still need to manually label training data and assess the performance of models in both simulations and real-world experiments.

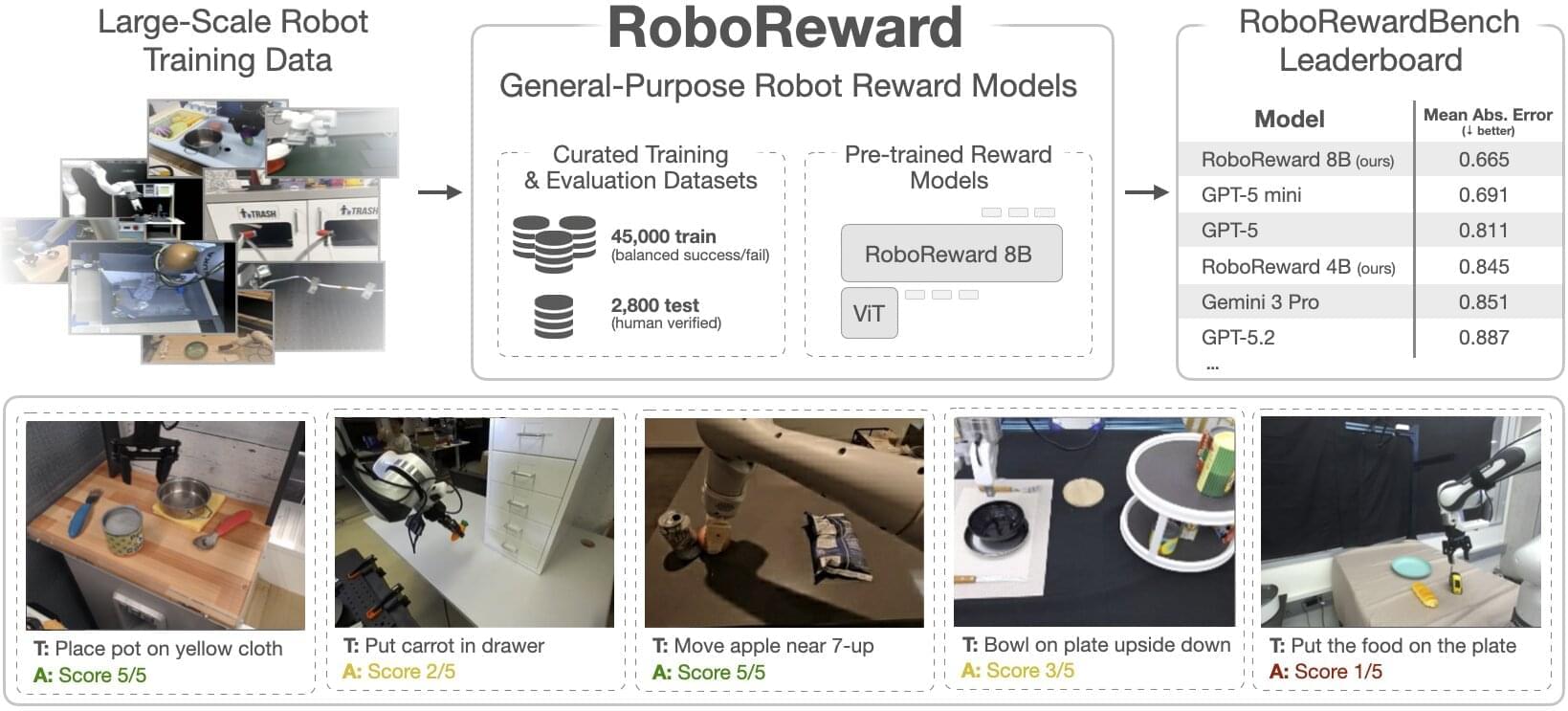

Researchers at Stanford University and UC Berkeley have introduced RoboReward, a dataset for training and evaluating AI algorithms for robotics applications, specifically vision-language reward-based models (VLMs).

Their paper, published on the arXiv preprint server, also presents RoboReward 4B and 8B, two new VLMs that were trained on this dataset and outperform other models introduced in the past.