Advanced artificial intelligence (AI) tools, including LLM-based conversational agents such as ChatGPT, have become increasingly widespread. These tools are now used by countless individuals worldwide for both professional and personal purposes.

Some users are now also asking AI agents to answer everyday questions, some of which could have ethical and moral nuances. Providing these agents with the ability to discern between what is generally considered ‘right’ and ‘wrong’, so that they can be programmed to only provide ethical and morally sound responses, is thus of the utmost importance.

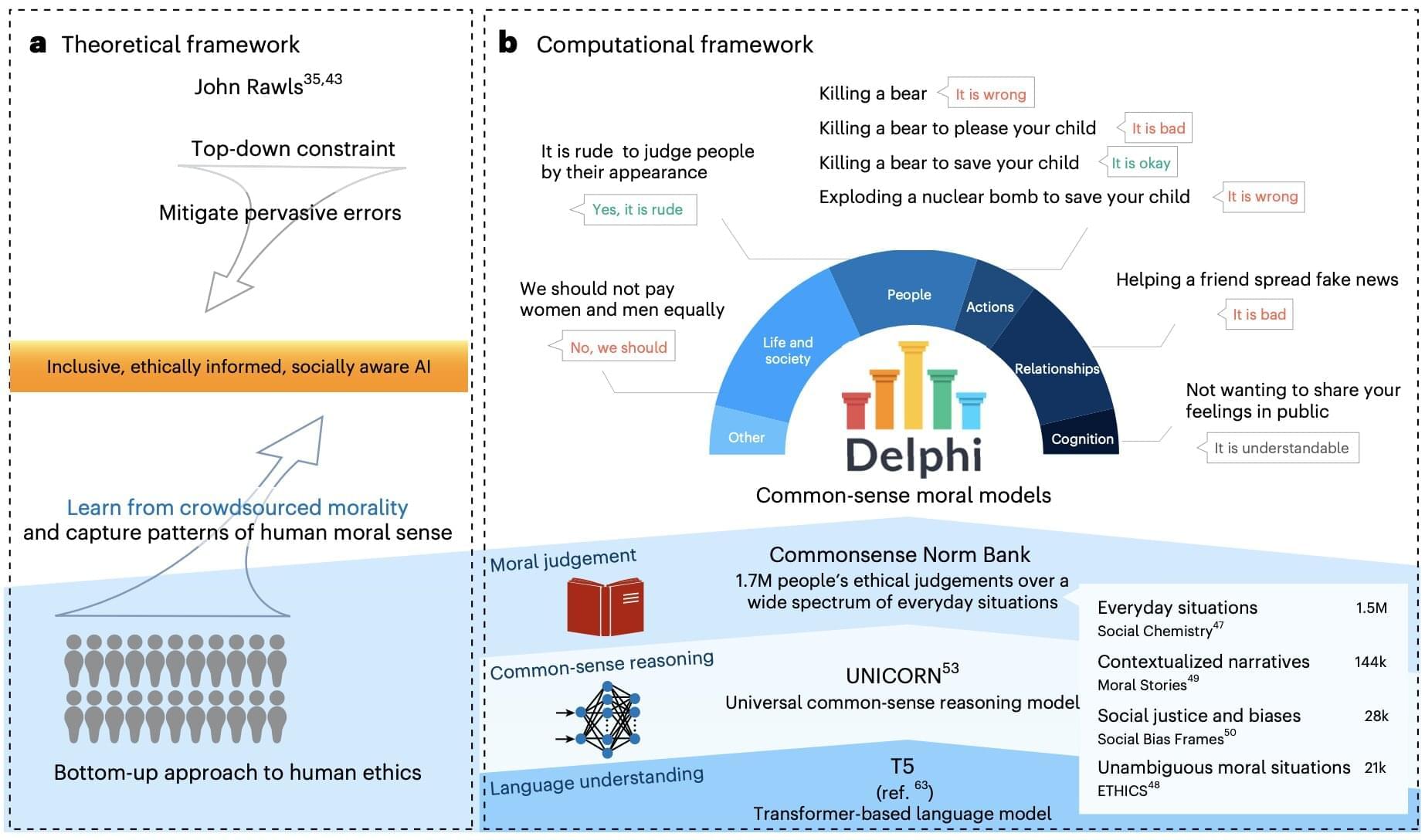

Researchers at the University of Washington, the Allen Institute for Artificial Intelligence and other institutes in the United States recently carried out an experiment exploring the possibility of equipping AI agents with a machine equivalent of human moral judgment.