About six years ago, scientists discovered a new type of more powerful neural network model known as a transformer. These models can achieve unprecedented performance, such as by generating text from prompts with near-human-like accuracy. A transformer underlies AI systems such as ChatGPT and Bard, for example. While incredibly effective, transformers are also mysterious: Unlike with other brain-inspired neural network models, it hasn’t been clear how to build them using biological components.

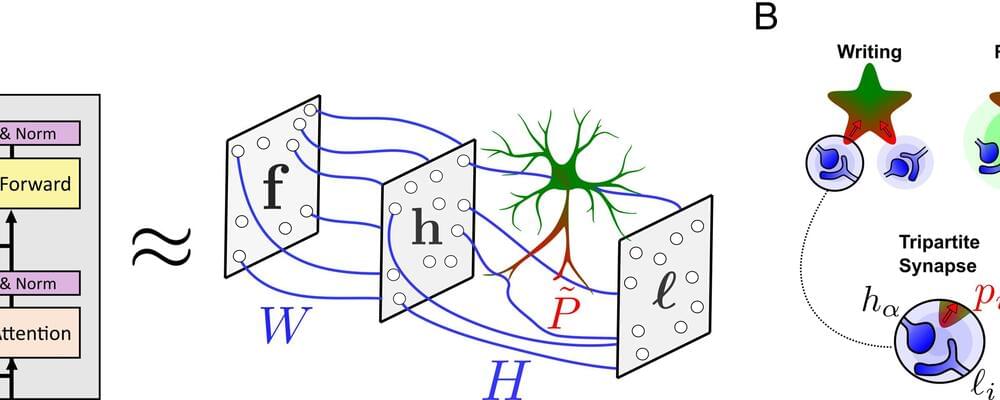

Now, researchers from MIT, the MIT-IBM Watson AI Lab, and Harvard Medical School have produced a hypothesis that may explain how a transformer could be built using biological elements in the brain. They suggest that a biological network composed of neurons and other brain cells called astrocytes could perform the same core computation as a transformer.