Whatever business a company may be in, software plays an increasingly vital role, from managing inventory to interfacing with customers. Software developers, as a result, are in greater demand than ever, and that’s driving the push to automate some of the easier tasks that take up their time.

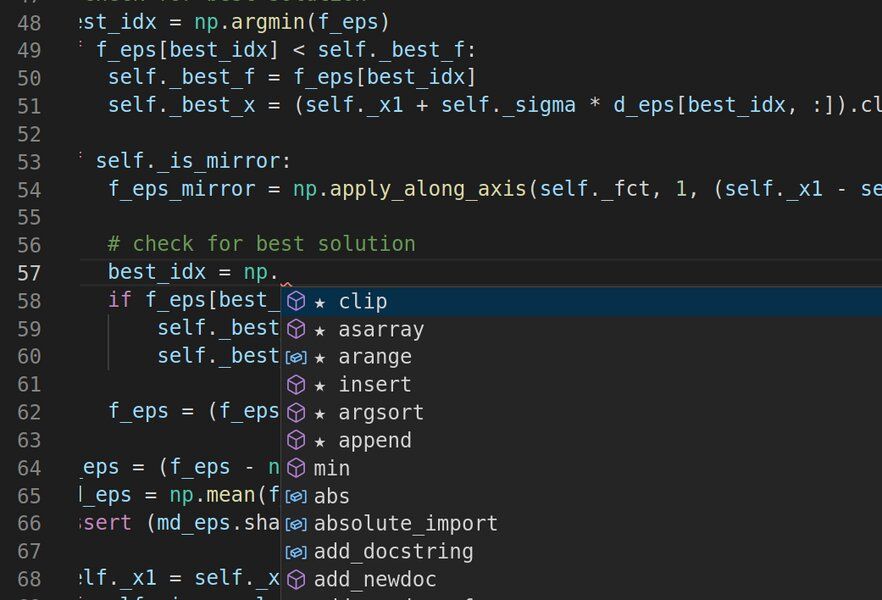

Productivity tools like Eclipse and Visual Studio suggest snippets of code that developers can easily drop into their work as they write. These automated features are powered by sophisticated language models that have learned to read and write computer code after absorbing thousands of examples. But like other deep learning models trained on big datasets without explicit instructions, language models designed for code-processing have baked-in vulnerabilities.

“Unless you’re really careful, a hacker can subtly manipulate inputs to these models to make them predict anything,” says Shashank Srikant, a graduate student in MIT’s Department of Electrical Engineering and Computer Science. “We’re trying to study and prevent that.”