Voyager 1, the furthest spacecraft from Earth that has been out there for nearly half a century, simply refuses to die.

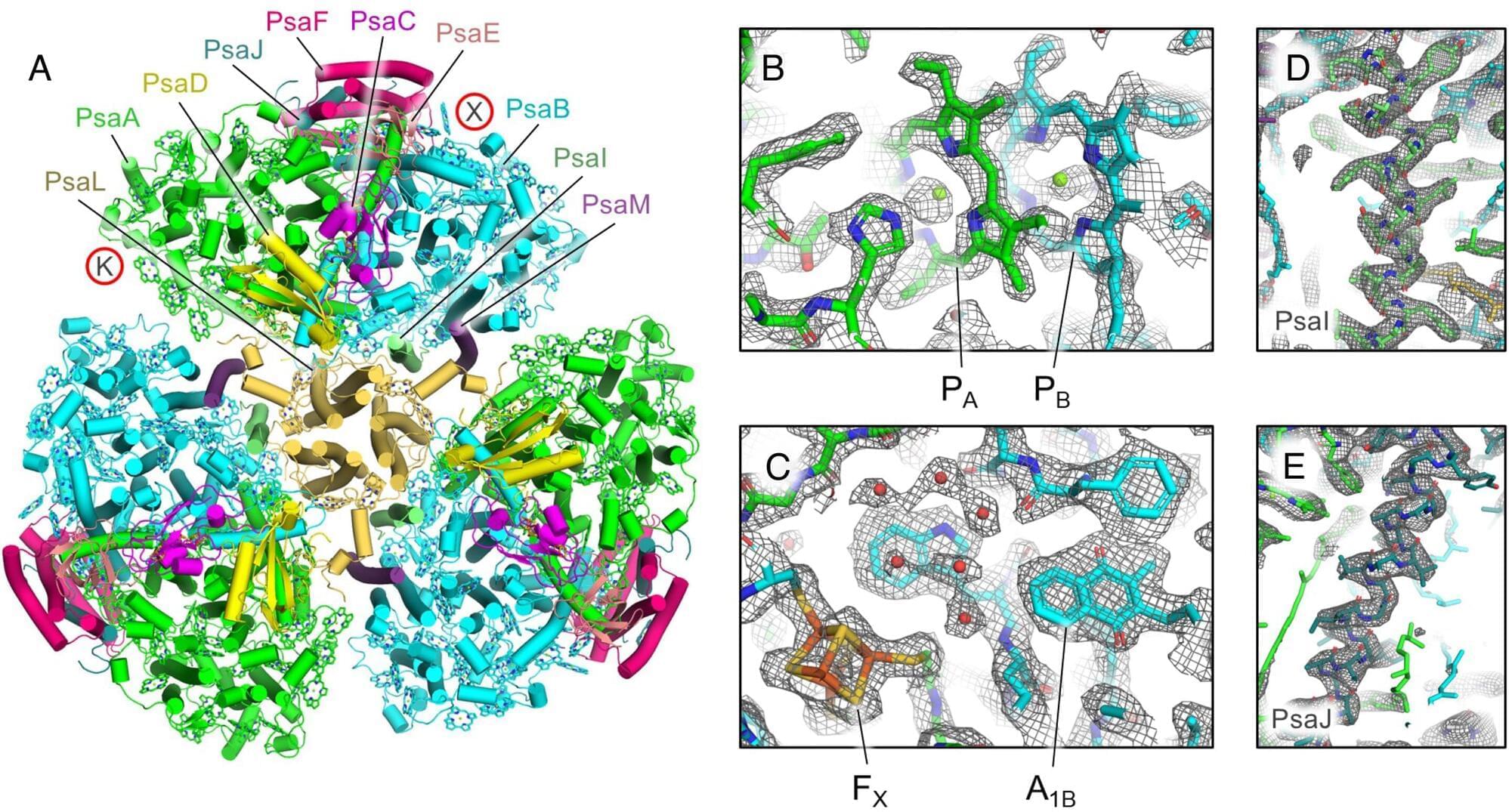

An international team of scientists have unlocked a key piece of Earth’s evolutionary puzzle by decoding the structure of a light-harvesting “nanodevice” in one of the planet’s most ancient lineages of cyanobacteria.

The discovery, published in Proceedings of the National Academy of Sciences, provides an unprecedented glimpse into how early life harnessed sunlight to produce oxygen—a process that transformed our planet forever.

The team, including Dr. Tanai Cardona from Queen Mary University of London, focused on Photosystem I (PSI), a molecular complex that converts light into electrical energy, purified from Anthocerotibacter panamensis —a recently discovered species representing a lineage that diverged from all other cyanobacteria roughly 3 billion years ago.

New research reveals how elevated eye pressure distorts blood vessels and disrupts oxygen delivery, potentially accelerating the progression of glaucoma, a leading cause of irreversible vision loss.

Get access to metatrends 10+ years before anyone else — https://bit.ly/METATRENDS Max Hodak is the co-founder of Neuralink and the founder of Science Corp.0…

Meta released a massive trove of chemistry data Wednesday that it hopes will supercharge scientific research, and is also crucial for the development of more advanced, general-purpose AI systems.

The company used the data set to build a powerful new AI model for scientists that can speed up the time it takes to create new drugs and materials.

The Open Molecules 2025 effort required 6 billion compute hours to create, and is the result of 100 million calculations that simulate the quantum mechanics of atoms and molecules in four key areas chosen for their potential impact on science.

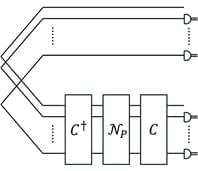

A new theorem shows that no universal entanglement purification protocol exists for all two-qubit entangled states if one is limited to just standard local operations and classical communication.

View a PDF of the paper titled J1: Incentivizing Thinking in LLM-as-a-Judge via Reinforcement Learning, by Chenxi Whitehouse and 6 other authors

Posted in robotics/AI | Leave a Comment on View a PDF of the paper titled J1: Incentivizing Thinking in LLM-as-a-Judge via Reinforcement Learning, by Chenxi Whitehouse and 6 other authors

The progress of AI is bottlenecked by the quality of evaluation, and powerful LLM-as-a-Judge models have proved to be a core solution. Improved judgment ability is enabled by stronger chain-of-thought reasoning, motivating the need to find the best recipes for training such models to think. In this work we introduce J1, a reinforcement learning approach to training such models. Our method converts both verifiable and non-verifiable prompts to judgment tasks with verifiable rewards that incentivize thinking and mitigate judgment bias. In particular, our approach outperforms all other existing 8B or 70B models when trained at those sizes, including models distilled from DeepSeek-R1. J1 also outperforms o1-mini, and even R1 on some benchmarks, despite training a smaller model. We provide analysis and ablations comparing Pairwise-J1 vs Pointwise-J1 models, offline vs online training recipes, reward strategies, seed prompts, and variations in thought length and content. We find that our models make better judgments by learning to outline evaluation criteria, comparing against self-generated reference answers, and re-evaluating the correctness of model responses.

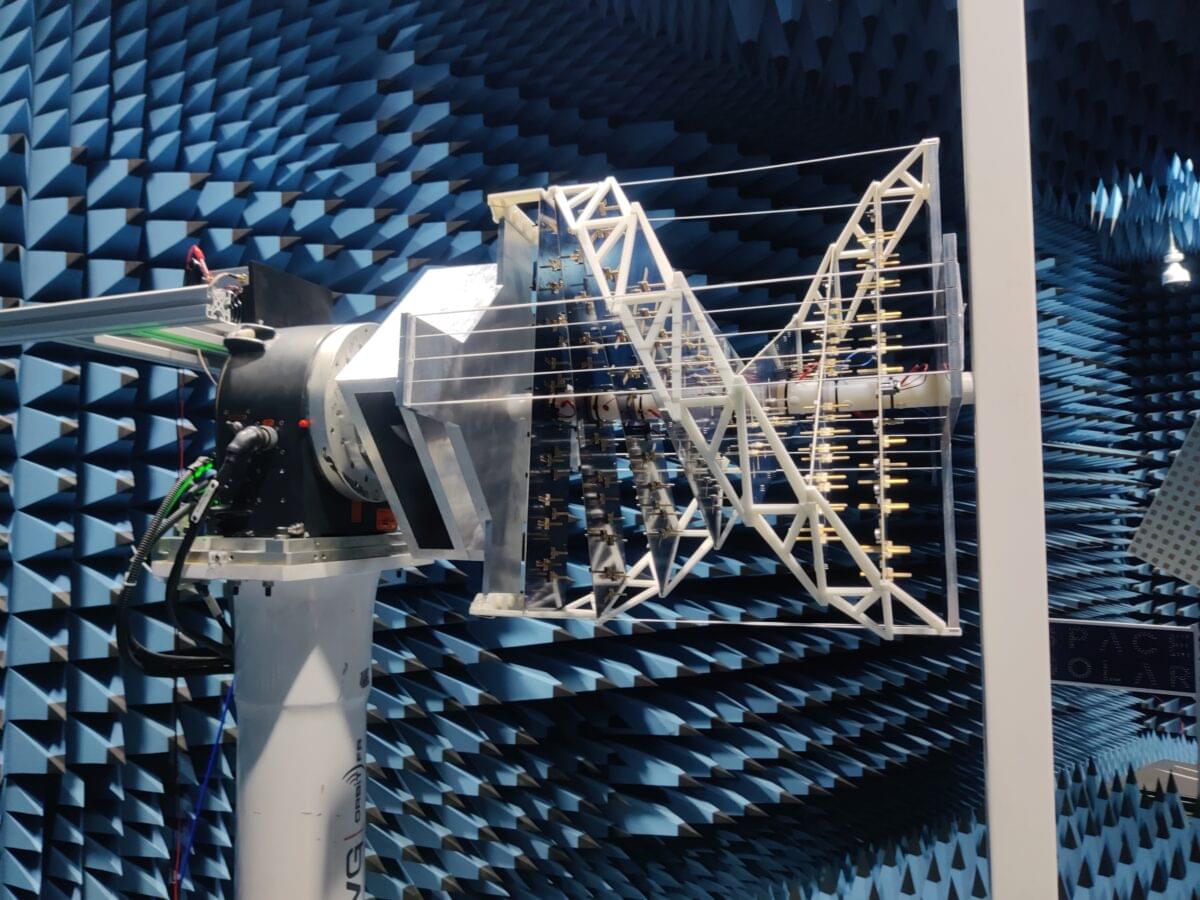

Three year old Space Solar has completed an 18-month engineering design and development project for key parts of its modular space-based solar power system.

Tesla is developing a terawatt-level supercomputer at Giga Texas to enhance its self-driving technology and AI capabilities, positioning the company as a leader in the automotive and renewable energy sectors despite current challenges ## ## Questions to inspire discussion.

Tesla’s Supercomputers.

💡 Q: What is the scale of Tesla’s new supercomputer project?

A: Tesla’s Cortex 2 supercomputer at Giga Texas aims for 1 terawatt of compute with 1.4 billion GPUs, making it 3,300x bigger than today’s top system.

💡 Q: How does Tesla’s compute power compare to Chinese competitors?

A: Tesla’s FSD uses 3x more compute than Huawei, Xpeng, Xiaomi, and Li Auto combined, with BYD not yet a significant competitor. Full Self-Driving (FSD)

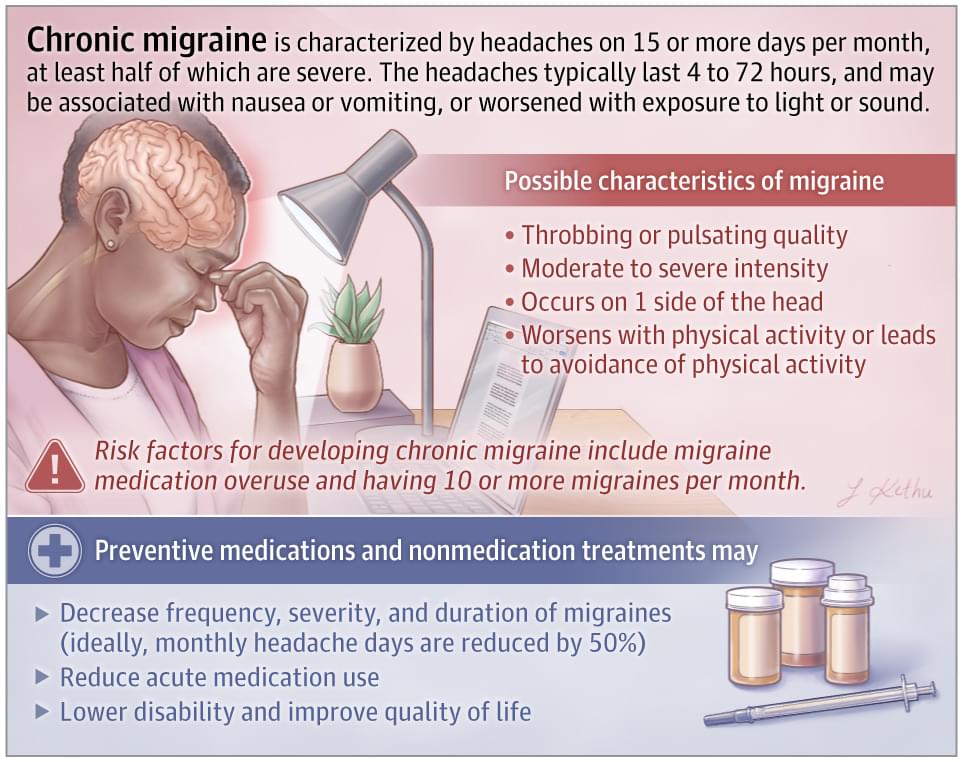

This JAMA Patient Page describes the condition of chronic migraine, its risk factors, and preventive and acute treatment options.