Superagers retain sharp minds into their 80s and beyond, defying the idea that cognitive decline is inevitable as we age.

The British government isn’t the only one looking to introduce digital ID cards. There is so much to worry about here, not least the threat of hacks, says Annalee Newitz

A team of researchers led by Peter Mac’s Professor Sherene Loi has uncovered how having children and breastfeeding reduces a woman’s long-term risk of breast cancer.

Published today in the prestigious journal Nature, the study provides a biological explanation for the protective effect of childbearing and shows how this has a lasting impact on a woman’s immune system. Professor Loi says the findings also offer new insights into breast cancer prevention and treatment.

In this video, we explore one of the most fascinating frontiers of technology — merging humans and machines through soundwaves. Discover how scientists are using acoustic signals to transmit data, control implants, and even connect the human brain to AI systems — all without wires. From ultrasonic communication to sound-based neural interfaces, this is where biology meets next-gen tech. Watch till the end to see how this breakthrough could redefine human evolution!

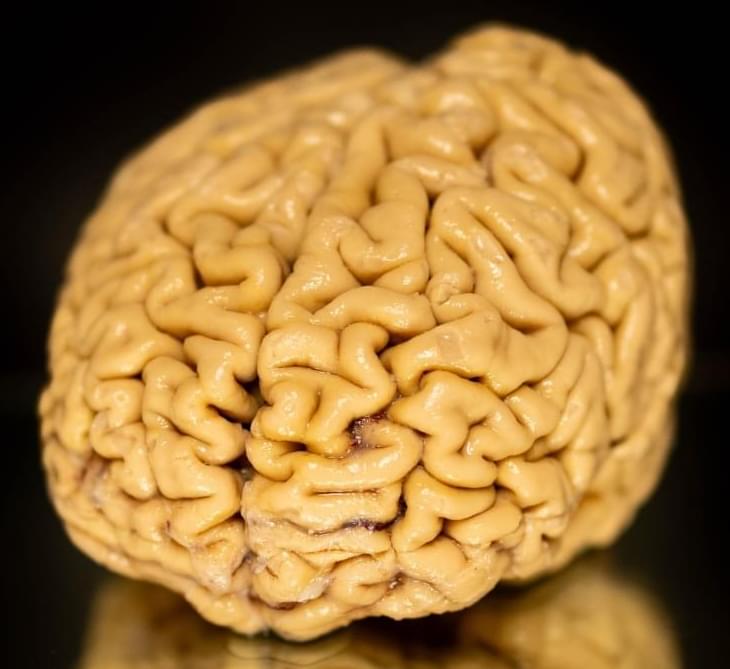

Metabolism guides the activation states of regulatory T cells, the immune cells that prevent inappropriate activation of the immune system. St. Jude Children’s Research Hospital scientists recently uncovered how mitochondria, the powerhouse of cells, and lysosomes, cellular recycling systems, work together to activate and deactivate these immune controllers. Their discoveries carry implications from understanding autoimmune and inflammatory diseases to improving immunotherapy for cancer. The findings were published today in Science Immunology.

When the immune system identifies and responds to a threat, it creates inflammation to combat the problem. A subset of immune cells, called regulatory T cells, also become activated and ensure that the inflammation is properly controlled. They return a tissue to normal once the threat is neutralized. Regulatory T cells play such an important role that the 2025 Nobel Prize in Physiology or Medicine was awarded in recognition of their original discovery.

When regulatory T cells don’t function properly, people can develop tissue damage from uncontrolled inflammation or autoimmune disorders due to the immune system being inappropriately activated. Despite their importance, the precise molecular process driving regulatory T cell activation has been unclear. This limits the capacity to harness these cells to treat autoimmune or inflammatory disorders.

On Prince of Wales Island, Alaska, gray wolves are doing something unexpected: hunting sea otters. This surprising dietary shift appears to have notable implications for both ecosystems and wolf health, but little is known about how the predators are capturing marine prey. Patrick Bailey, a Ph.D. candidate at the University of Rhode Island, is researching these understudied behaviors of gray wolves.

Using a creative mix of approaches—including wolf teeth samples and trail cameras—Bailey is exploring how coastal gray wolves are using marine resources, what this suggests about their behavioral and hunting adaptations, and how these adaptations differentiate them from other wolf populations.

On land, gray wolves are known to play a vital ecological role because of their ability to regulate food webs. “We don’t have a clear understanding of the connections between water and land food webs, but we suspect that they are much more prevalent than previously understood,” says Bailey, a member of Sarah Kienle’s CEAL Lab in the Department of Natural Resources Science. “Since wolves can alter land ecosystems so dramatically, it is possible that we will see similar patterns in aquatic habitats.”