Do things look duller? As we age, the way we see the world can change – literally

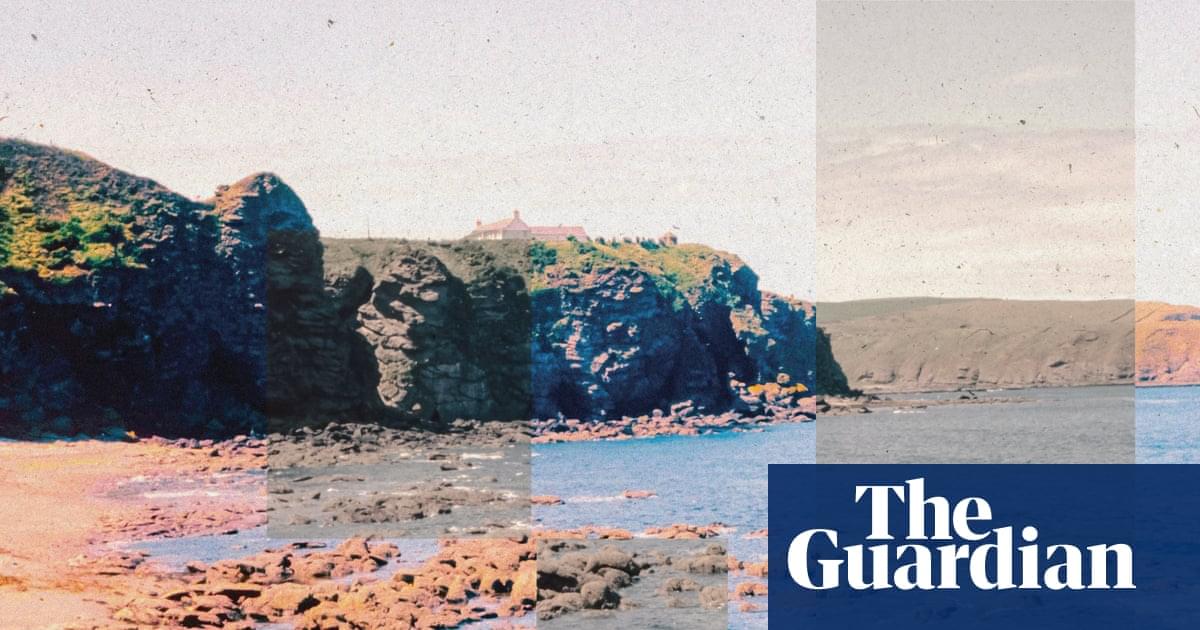

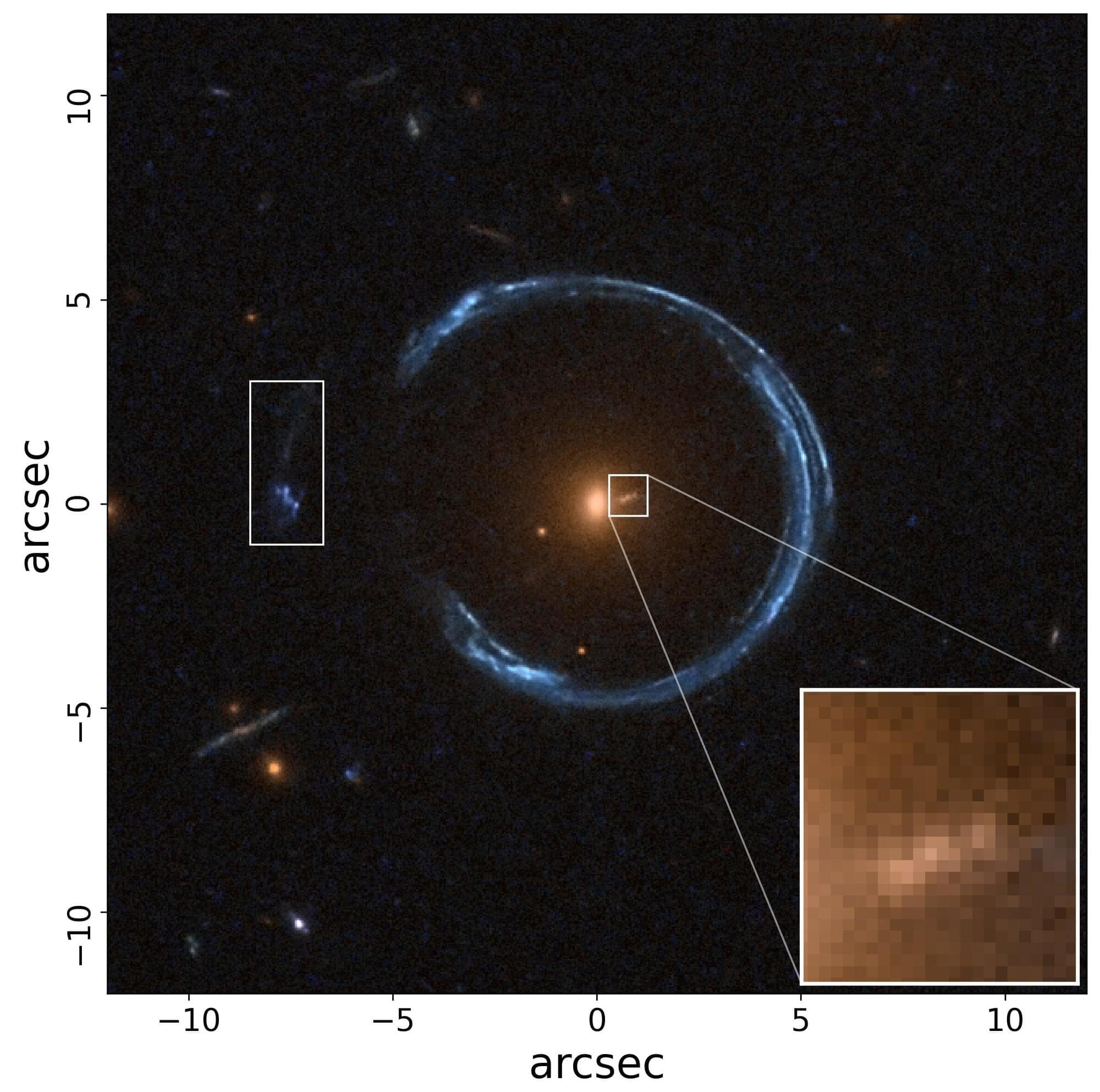

Astronomers have discovered potentially the most massive black hole ever detected. The cosmic behemoth is close to the theoretical upper limit of what is possible in the universe and is 10,000 times heavier than the black hole at the center of our own Milky Way galaxy.

It exists in one of the most massive galaxies ever observed—the Cosmic Horseshoe—which is so big it distorts spacetime and warps the passing light of a background galaxy into a giant horseshoe-shaped Einstein ring.

Such is the enormousness of the ultramassive black hole’s size, it equates to 36 billion solar masses, according to a new paper published today in Monthly Notices of the Royal Astronomical Society.

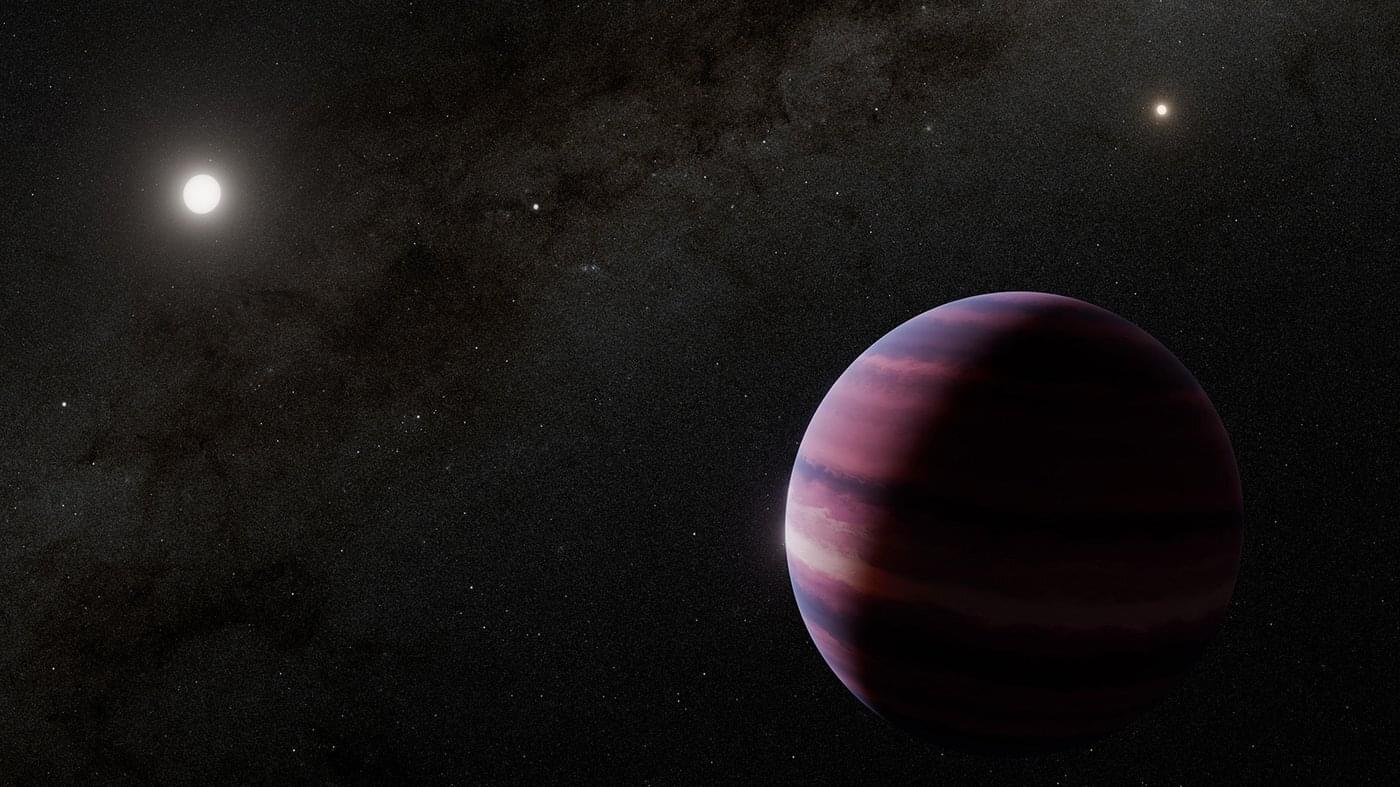

Astronomers have used NASA’s James Webb Space Telescope to find strong evidence for a planet orbiting a star in the triple system closest to our own sun. At just 4 light-years away from Earth, the Alpha Centauri star system has long been a compelling target in the search for worlds beyond our solar system called exoplanets.

The system is made up of a close pair of orbiting stars, Alpha Centauri A and Alpha Centauri B, the two closest sun-like stars to Earth, as well as the faint red dwarf star Proxima Centauri. While there are three confirmed planets orbiting Proxima Centauri, the presence of other worlds surrounding the sun-like twins of Alpha Centauri A and Alpha Centauri B has proved challenging to confirm.

Now, Webb’s observations from its Mid-Infrared Instrument (MIRI) are providing the strongest evidence to date of a gas giant planet orbiting in the habitable zone of Alpha Centauri A. (The MIRI instrument was developed in part by the Jet Propulsion Laboratory [JPL], which is managed by Caltech for NASA). The habitable zone is the region around a star where temperatures could be right for liquid water to pool on a planet’s surface.

While all seeds produced within a fruit have the same maternal genome, the paternal genomes of seeds can come from the pollen of one or more paternal parents. A common assumption about flowering plants is that the ovules are most often pollinated by multiple paternal parents at the flower level.

Various genomic conflicts can arise during the process of fertilization and fruit production in multiseed plants, including conflicts over nutritional resources between the maternal plant and its offspring, conflicts over nutritional resources among developing seeds, between paternal and maternal genomes over seed development and competition among paternal parents. The relationship between these genomic conflicts and single or multiple paternal parentage is unclear.

To shed some light on the prevalence of monogamy and polyandry in flowering plants, a group of researchers in India conducted a systematic literature review of studies from 1984 to 2024 and a meta-analysis of 63 flowering plant species from diverse families. The study was recently published in the Proceedings of the National Academy of Sciences. The number of paternal parents was determined in the context of self-compatible vs. incompatible breeding, seed number, and phylogenetic relationships.

Most of us find it difficult to grasp the quantum world. According to Heisenberg’s uncertainty principle, it’s like observing a dance without being able to see simultaneously exactly where someone is dancing and how fast they’re moving—you always must choose to focus on one.

And yet, this quantum dance is far from chaotic; the dancers follow a strict choreography. In molecules, this strange behavior has another consequence: Even if a molecule should be completely frozen at absolute zero, it never truly comes to rest. The atoms it is made of perform a constant, never-ending quiet dance driven by so-called zero-point energy.

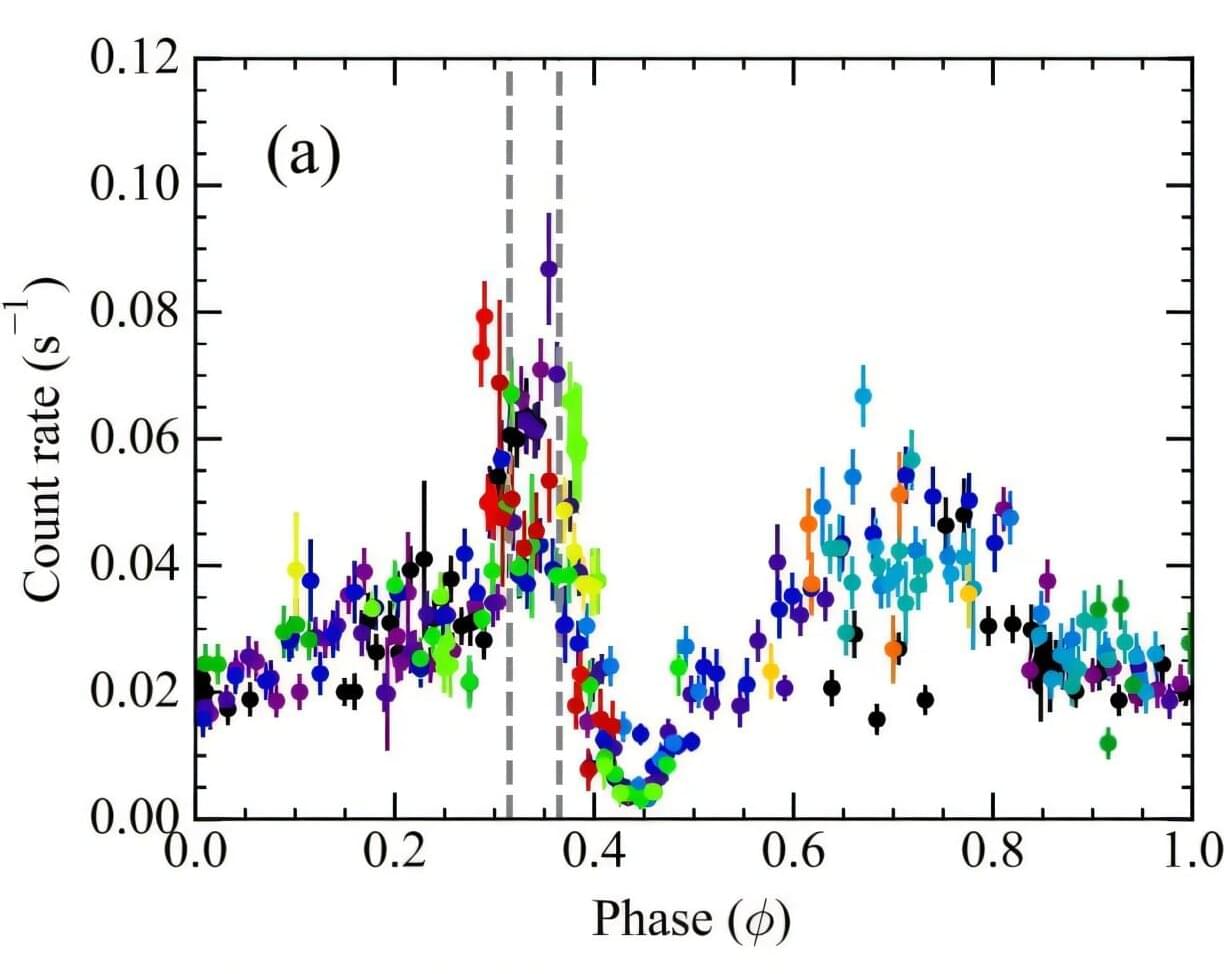

An international team of astronomers has performed multiwavelength observations of a gamma-ray binary system known as HESS J0632+057. Results of the observational campaign, presented July 31 on the pre-print server arXiv, shed more light on the nature of this binary.

Gamma-ray binaries consist of a massive OB-type star in orbit with a compact object like a neutron star or black hole. Some of them emit a significant amount of radiation in very high-energy (TeV) and are therefore known as TeV gamma-ray binaries (TGBs).

TGBs exhibit diverse high-energy phenomena driven by interactions between the two components. Given that these sources are extremely rare, astronomers are especially interested in exploring them in detail, which could help us better understand their mysterious nature.

OpenAI released a keenly awaited new generation of its hallmark ChatGPT on Thursday, touting “significant” advancements in artificial intelligence capabilities as a global race over the technology accelerates.

ChatGPT-5 is rolling out free to all users of the AI tool, which is used by nearly 700 million people weekly, OpenAI said in a briefing with journalists.

Co-founder and chief executive Sam Altman touted this latest iteration as “clearly a model that is generally intelligent.”

The ability to detect single photons (the smallest energy packets constituting electromagnetic radiation) in the infrared range has become a pressing need across numerous fields, from medical imaging and astrophysics to emerging quantum technologies. In observational astronomy, for example, the light from distant celestial objects can be extremely faint and require exceptional sensitivity in the mid-infrared.

Similarly, in free-space quantum communication—where single photons need to travel across vast distances—operating in the mid-infrared can provide key advantages in signal clarity.

The widespread use of single-photon detectors in this range is limited by the need for large, costly, and energy-intensive cryogenic systems to keep the temperature below 1 Kelvin. This also hinders the integration of the resulting detectors into modern photonic circuits, the backbone of today’s information technologies.

Fuel cells are energy solutions that can convert the chemical energy in fuels into electricity via specific chemical reactions, instead of relying on combustion. Promising types of fuel cells are direct methanol fuel cells (DMFCs), devices specifically designed to convert the energy in methyl alcohol (i.e., methanol) into electrical energy.

Despite their potential for powering large electronics, vehicles and other systems requiring portable power, these methanol-based fuel cells still have significant limitations. Most notably, studies found that their performance tends to significantly degrade over time, because the materials used to catalyze reactions in the cells (i.e., electrocatalytic surfaces) gradually become less effective.

One approach to cleaning these surfaces and preventing the accumulation of poisoning products produced during chemical reactions entails the modulation of the voltage applied to the fuel cells. However, manually adjusting the voltage applied to the surfaces in effective ways, while also accounting for physical and chemical processes in the fuel cells, is impractical for real-world applications.