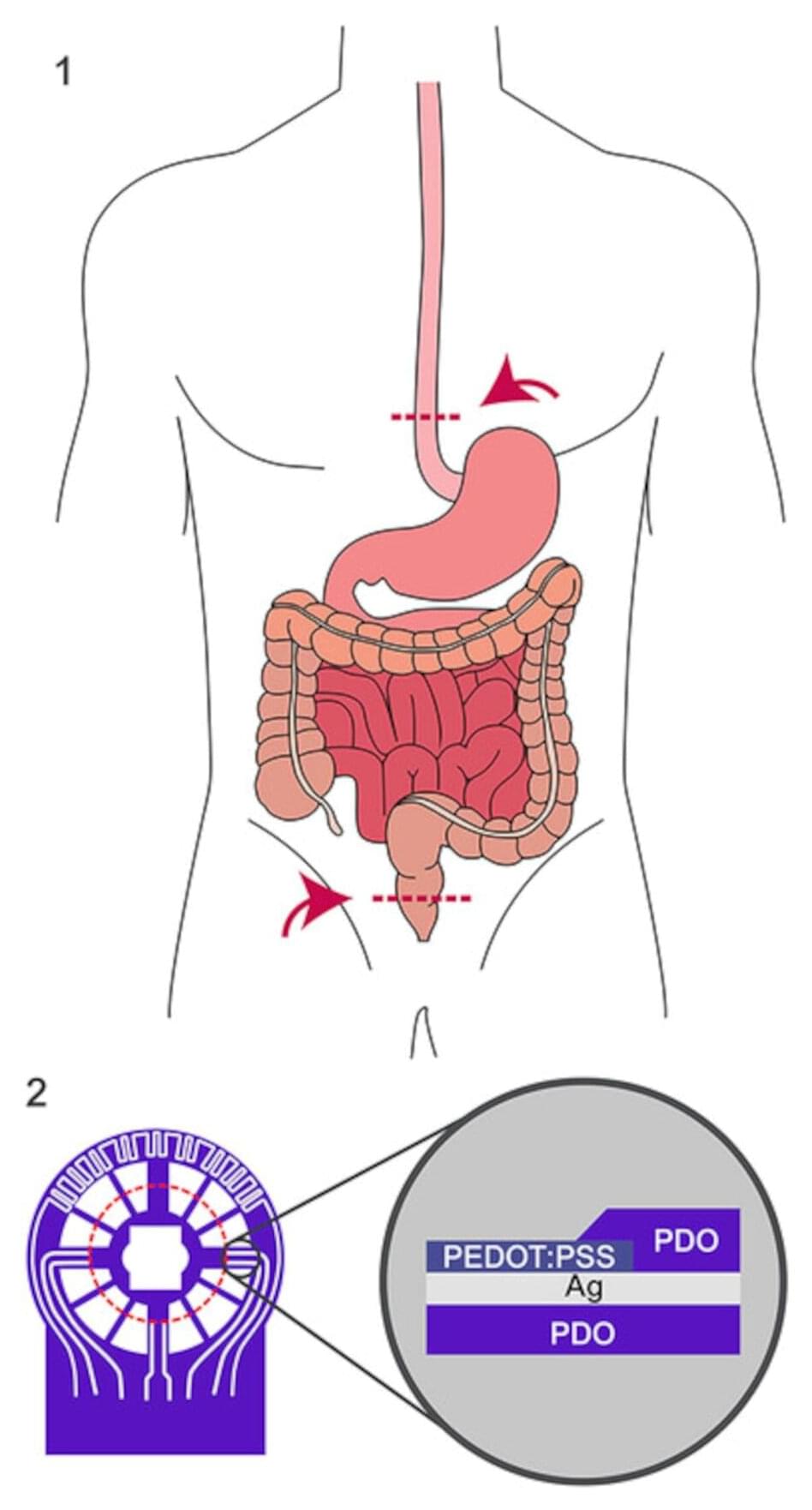

An interdisciplinary research team from Dresden University of Technology (TUD), Rostock University Medical Center (UMR) and Dresden University Hospital has developed an innovative, implantable and fully absorbable sensor film. For the first time, it enables reliable early detection of circulatory disorders in intestinal anastomoses—one of the riskiest surgical procedures in the abdominal cavity. The results have now been presented in the journal Advanced Science.

Intestinal anastomoses, which is the surgical connection of two sections of the intestine after the removal of diseased tissue, carry a considerable risk of post-operative complications. In particular, circulatory disorders or immunological reactions can lead to serious consequential damage or even death within a short period of time. However, direct monitoring of the suture site has not been possible until now, which often entails corresponding risks for patients as well as considerable costs due to follow-up operations and long hospital stays.

Based on this specific medical need, the interdisciplinary network of the Else Kröner Fresenius Center (EKFZ) for Digital Health at TUD and Dresden University Hospital brought together key experts from Dresden and Rostock.