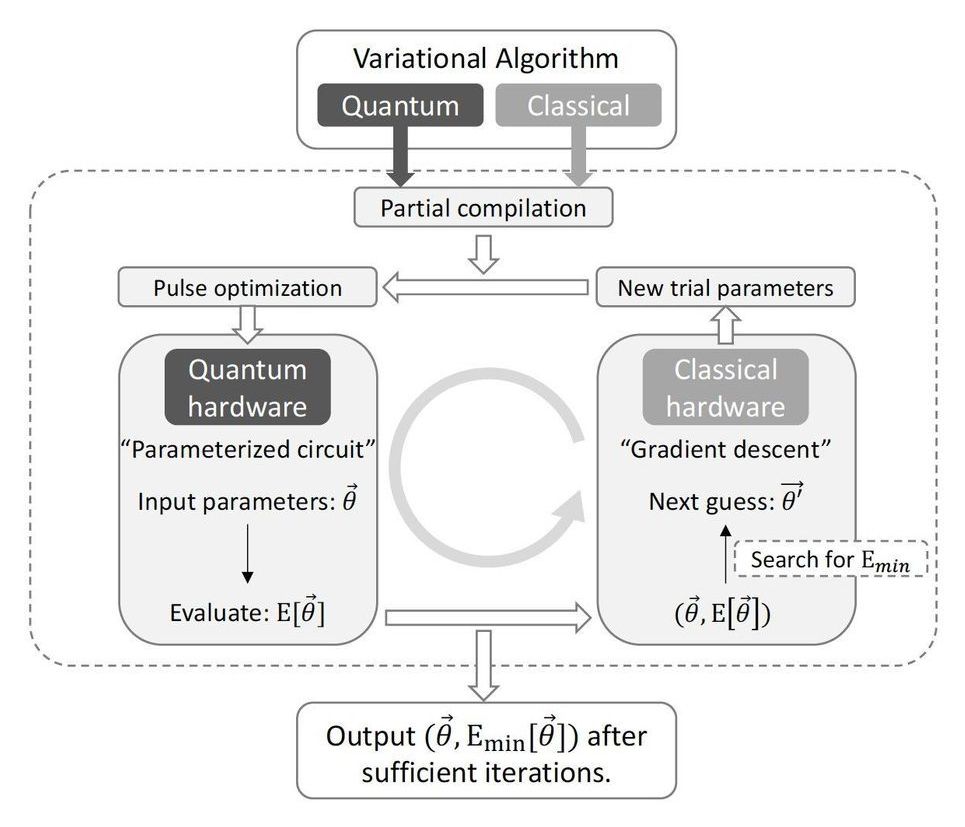

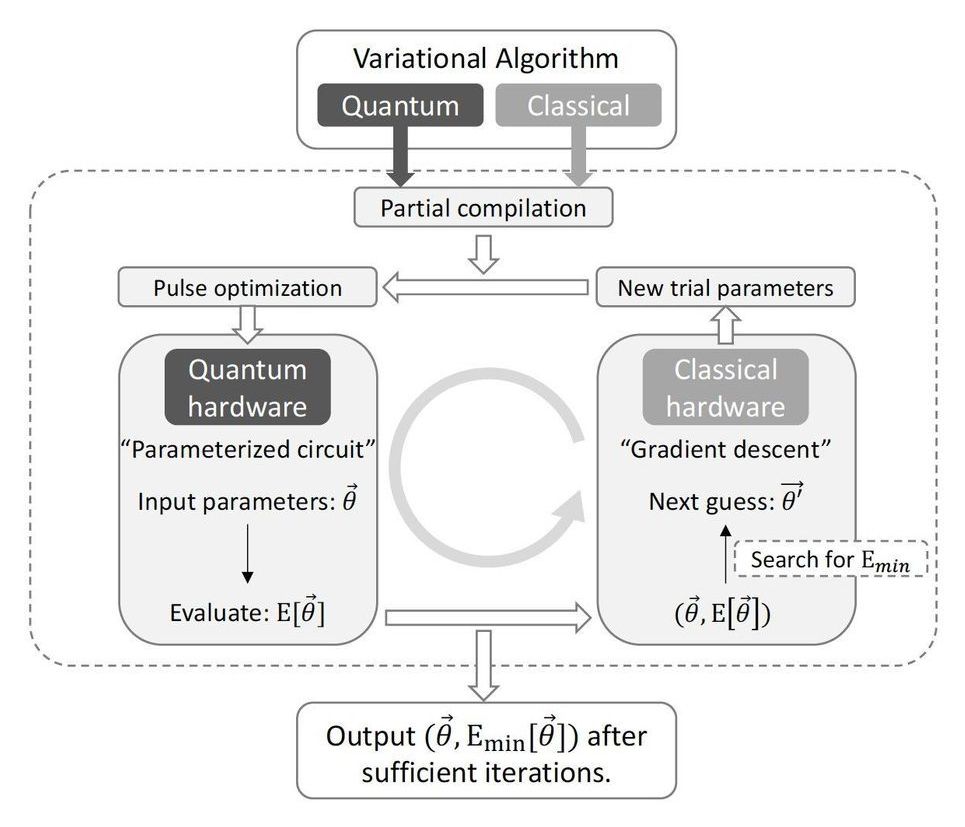

A new paper from researchers at the University of Chicago introduces a technique for compiling highly optimized quantum instructions that can be executed on near-term hardware. This technique is particularly well suited to a new class of variational quantum algorithms, which are promising candidates for demonstrating useful quantum speedups. The new work was enabled by uniting ideas across the stack, spanning quantum algorithms, machine learning, compilers, and device physics. The interdisciplinary research was carried out by members of the EPiQC (Enabling Practical-scale Quantum Computation) collaboration, an NSF Expedition in Computing.

Adapting to a New Paradigm for Quantum Algorithms

The original vision for quantum algorithms dates to the early 1980s, when physicist Richard Feynman proposed performing molecular simulations using just thousands of noise-less qubits (quantum bits), a practically impossible task for traditional computers. Other algorithms developed in the 1990s and 2000s demonstrated that thousands of noise-less qubits would also offer dramatic speedups for problems such as database search, integer factoring, and matrix algebra. However, despite recent advances in quantum hardware, these algorithms are still decades away from scalable realizations, because current hardware features noisy qubits.