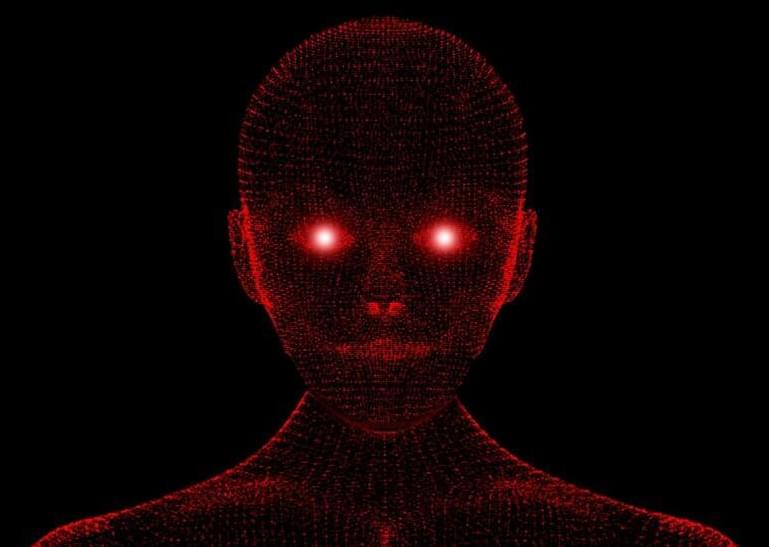

🤖 Officially, they’re called “lethal autonomous weapons systems.” Colloquially, they’re called “killer robots.” Either way you’re going to want to read about their future in warfare. 👇

The commander must also be prepared to justify his or her decision if and when the LAWS is wrong. As with the application of force by manned platforms, the commander assumes risk on behalf of his or her subordinates. In this case, a narrow, extensively tested algorithm with an extremely high level of certainly (for example, 99 percent or higher) should meet the threshold for a justified strike and absolve the commander of criminal accountability.

Lastly, LAWS must also be tested extensively in the most demanding possible training and exercise scenarios. The methods they use to make their lethal decisions—from identifying a target and confirming its identity to mitigating the risk of collateral damage—must be publicly released (along with statistics backing up their accuracy). Transparency is crucial to building public trust in LAWS, and confidence in their capabilities can only be built by proving their reliability through rigorous and extensive testing and analysis.

Continue reading “‘Killer robots’ are coming. Is the US ready for the consequences?” »