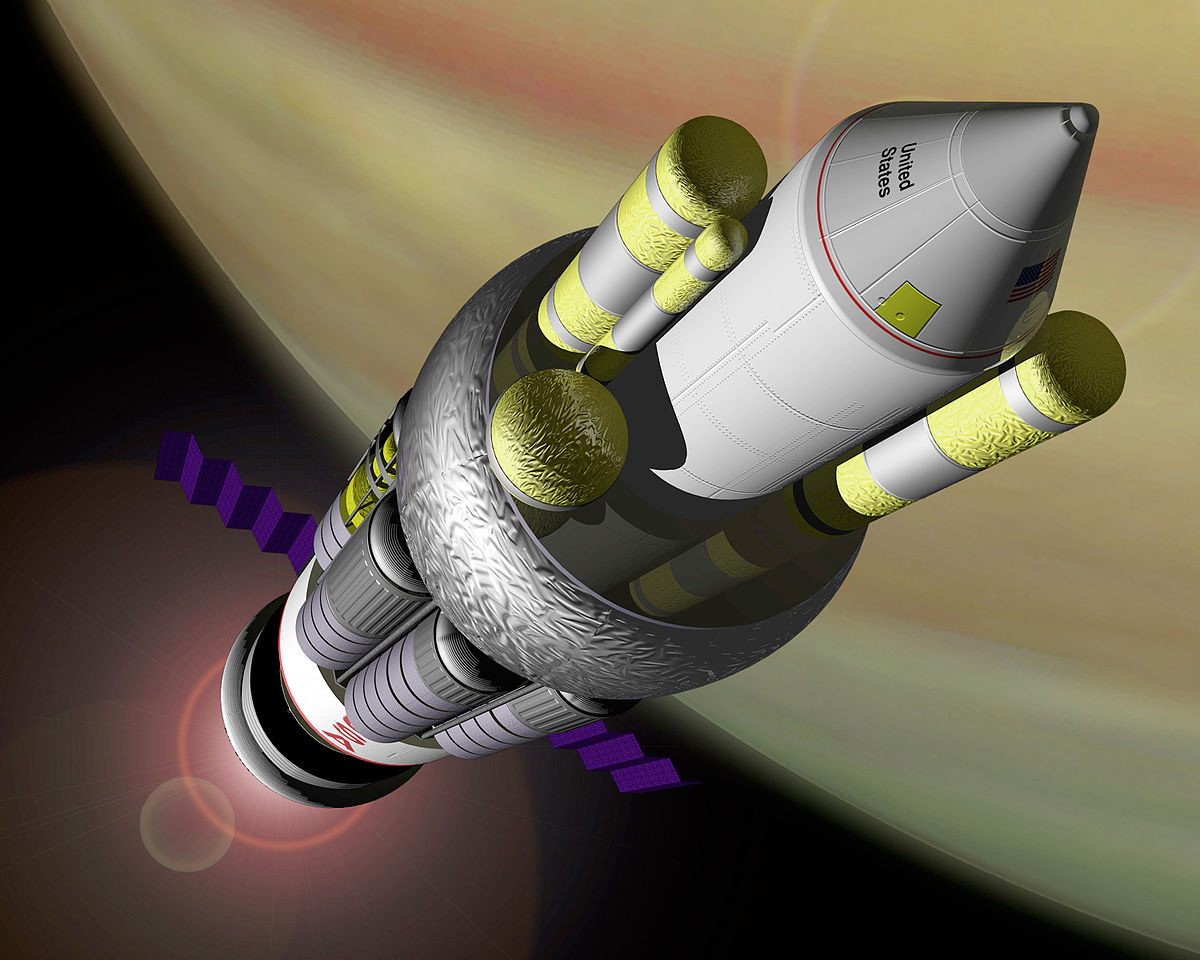

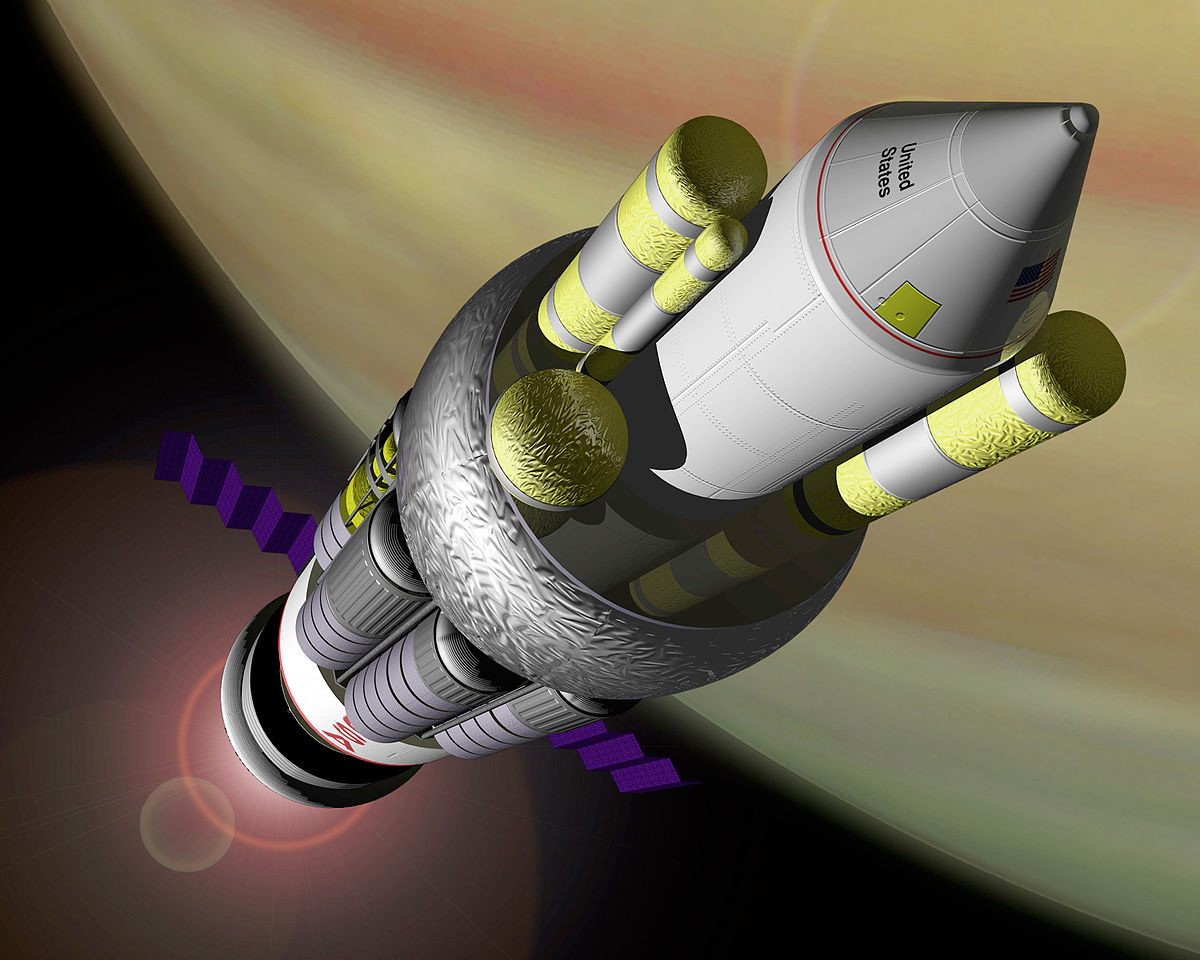

It is interesting to note that the technical possibility to send interstellar Ark appeared in 1960th, and is based on the concept of “Blust-ship” of Ulam. This blast-ship uses the energy of nuclear explosions to move forward. Detailed calculations were carried out under the project “Orion”. http://en.wikipedia.org/wiki/Project_Orion_(nuclear_propulsion) In 1968 Dyson published an article “Interstellar Transport”, which shows the upper and lower bounds of the projects. In conservative (ie not imply any technical achievements) valuation it would cost 1 U.S. GDP (600 billion U.S. dollars at the time of writing) to launch the spaceship with mass of 40 million tonnes (of which 5 million tons of payload), and its time of flight to Alpha Centauri would be 1200 years. In a more advanced version the price is 0.1 U.S. GDP, the flight time is 120 years and starting weight 150 000 tons (of which 50 000 tons of payload). In principle, using a two-tier scheme, more advanced thermonuclear bombs and reflectors the flying time to the nearest star can reduce to 40 years.

It is interesting to note that the technical possibility to send interstellar Ark appeared in 1960th, and is based on the concept of “Blust-ship” of Ulam. This blast-ship uses the energy of nuclear explosions to move forward. Detailed calculations were carried out under the project “Orion”. http://en.wikipedia.org/wiki/Project_Orion_(nuclear_propulsion) In 1968 Dyson published an article “Interstellar Transport”, which shows the upper and lower bounds of the projects. In conservative (ie not imply any technical achievements) valuation it would cost 1 U.S. GDP (600 billion U.S. dollars at the time of writing) to launch the spaceship with mass of 40 million tonnes (of which 5 million tons of payload), and its time of flight to Alpha Centauri would be 1200 years. In a more advanced version the price is 0.1 U.S. GDP, the flight time is 120 years and starting weight 150 000 tons (of which 50 000 tons of payload). In principle, using a two-tier scheme, more advanced thermonuclear bombs and reflectors the flying time to the nearest star can reduce to 40 years.

Of course, the crew of the spaceship is doomed to extinction if they do not find a habitable and fit for human planet in the nearest star system. Another option is that it will colonize uninhabited planet. In 1980, R. Freitas proposed a lunar exploration using self-replicating factory, the original weight of 100 tons, but to control that requires artificial intelligence. “Advanced Automation for Space Missions” http://www.islandone.org/MMSG/aasm/ Artificial intelligence yet not exist, but the management of such a factory could be implemented by people. The main question is how much technology and equipment should be enough to throw at the moonlike uninhabited planet, so that people could build on it completely self-sustaining and growing civilization. It is about creating something like inhabited von Neumann probe. Modern self-sustaining state includes at least a few million people (like Israel), with hundreds of tons of equipment on each person, mainly in the form of houses, roads. Weight of machines is much smaller. This gives us the upper boundary of the able to replicate human colony in the 1 billion tons. The lower estimate is that there would be about 100 people, each of which accounts for approximately 100 tons (mainly food and shelter), ie 10 000 tons of mass. A realistic assessment should be somewhere in between, and probably in the tens of millions of tons. All this under the assumption that no miraculous nanotechnology is not yet open.

The advantage of a spaceship as Ark is that it is non-specific reaction to a host of different threats with indeterminate probabilities. If you have some specific threat (the asteroid, the epidemic), then there is better to spend money on its removal.

Thus, if such a decision in the 1960th years were taken, now such a ship could be on the road.

But if we ignore the technical side of the issue, there are several trade-offs on strategies for creating such a spaceship.

1. The sooner such a project is started, the lesser technically advanced it would be, the lesser would be its chances of success and higher would be cost. But if it will be initiated later, the greater would be chances that it will not be complete until global catastrophe.

2. The later the project starts, the greater are the chance that it will take “diseases” of mother civilization with it (e.g. ability to create dangerous viruses ).

3. The project to create a spaceship could lead to the development of technologies that threaten civilization itself. Blast-ship used as fuel hundreds of thousands of hydrogen bombs. Therefore, it can either be used as a weapon, or other party may be afraid of it and respond. In addition, the spaceship can turn around and hit the Earth, as star-hammer — or there maybe fear of it. During construction of the spaceship could happen man-made accidents with enormous consequences, equal as maximum to detonation of all bombs on board. If the project is implementing by one of the countries in time of war, other countries could try to shoot down the spaceship when it launched.

4. The spaceship is a means of protection against Doomsday machine as strategic response in Khan style. Therefore, the creators of such a Doomsday machine can perceive the Ark as a threat to their power.

5. Should we implement a more expensive project, or a few cheaper projects?

6. Is it sufficient to limit the colonization to the Moon, Mars, Jupiter’s moons or objects in the Kuiper belt? At least it can be fallback position at which you can check the technology of autonomous colonies.

7. The sooner the spaceship starts, the less we know about exoplanets. How far and how fast the Ark should fly in order to be in relative safety?

8. Could the spaceship hide itself so that the Earth did not know where it is, and should it do that? Should the spaceship communicate with Earth? Or there is a risk of attack of a hostile AI in this case?

9. Would not the creation of such projects exacerbate the arms race or lead to premature depletion of resources and other undesirable outcomes? Creating of pure hydrogen bombs would simplify the creation of such a spaceship, or at least reduce its costs. But at the same time it would increase global risks, because nuclear non-proliferation will suffer complete failure.

10. Will the Earth in the future compete with its independent colonies or will this lead to Star Wars?

11. If the ship goes off slowly enough, is it possible to destroy it from Earth, by self-propelling missile or with radiation beam?

12. Is this mission a real chance for survival of the mankind? Flown away are likely to be killed, because the chance of success of the mission is no more than 10 per cent. Remaining on the Earth may start to behave more risky, in logic: “Well, if we have protection against global risks, now we can start risky experiments.” As a result of the project total probability of survival decreases.

13. What are the chances that its computer network of the Ark will download the virus, if it will communicate with Earth? And if not, it will reduce the chances of success. It is possible competition for nearby stars, and faster machines would win it. Eventually there are not many nearby stars at distance of about 5 light years — Alpha Centauri, the Barnard star, and the competition can begin for them. It is also possible the existence of dark lonely planets or large asteroids without host-stars. Their density in the surrounding space should be 10 times greater than the density of stars, but to find them is extremely difficult. Also if nearest stars have not any planets or moons it would be a problem. Some stars, including Barnard, are inclined to extreme stellar flares, which could kill the expedition.

14. The spaceship will not protect people from hostile AI that finds a way to catch up. Also in case of war starships may be prestigious, and easily vulnerable targets — unmanned rocket will always be faster than a spaceship. If arks are sent to several nearby stars, it does not ensure their secrecy, as the destination will be known in advance. Phase transition of the vacuum, the explosion of the Sun or Jupiter or other extreme event can also destroy the spaceship. See e.g. A.Bolonkin “Artificial Explosion of Sun. AB-Criterion for Solar Detonation” http://www.scribd.com/doc/24541542/Artificial-Explosion-of-S…Detonation

15. However, the spaceship is too expensive protection from many other risks that do not require such far removal. People could hide from almost any pandemic in the well-isolated islands in the ocean. People can hide on the Moon from gray goo, collision with asteroid, supervolcano, irreversible global warming. The ark-spaceship will carry with it problems of genetic degradation, propensity for violence and self-destruction, as well as problems associated with limited human outlook and cognitive biases. Spaceship would only burden the problem of resource depletion, as well as of wars and of the arms race. Thus, the set of global risks from which the spaceship is the best protection, is quite narrow.

16. And most importantly: does it make sense now to begin this project? Anyway, there is no time to finish it before become real new risks and new ways to create spaceships using nanotech.

Of course it easy to envision nano and AI based Ark – it would be small as grain of sand, carry only one human egg or even DNA information, and could self-replicate. The main problem with it is that it could be created only ARTER the most dangerous period of human existence, which is the period just before Singularity.

It is interesting to note that the technical possibility to send interstellar Ark appeared in 1960th, and is based on the concept of “Blust-ship” of Ulam. This blast-ship uses the energy of nuclear explosions to move forward. Detailed calculations were carried out under the project “Orion”.

It is interesting to note that the technical possibility to send interstellar Ark appeared in 1960th, and is based on the concept of “Blust-ship” of Ulam. This blast-ship uses the energy of nuclear explosions to move forward. Detailed calculations were carried out under the project “Orion”.