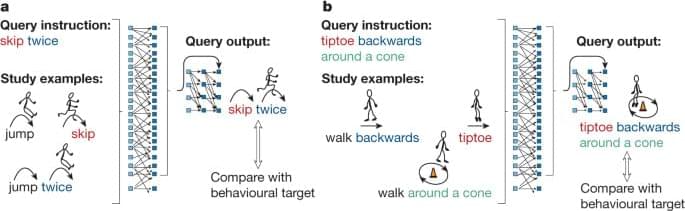

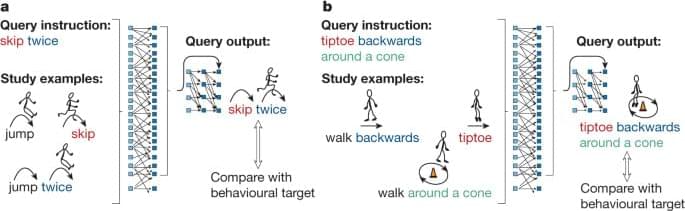

The power of human language and thought arises from systematic compositionality—the algebraic ability to understand and produce novel combinations from known components. Fodor and Pylyshyn1 famously argued that artificial neural networks lack this capacity and are therefore not viable models of the mind. Neural networks have advanced considerably in the years since, yet the systematicity challenge persists. Here we successfully address Fodor and Pylyshyn’s challenge by providing evidence that neural networks can achieve human-like systematicity when optimized for their compositional skills. To do so, we introduce the meta-learning for compositionality (MLC) approach for guiding training… More.

Over 35 years ago, when Fodor and Pylyshyn raised the issue of systematicity in neural networks1, today’s models19 and their language skills were probably unimaginable. As a credit to Fodor and Pylyshyn’s prescience, the systematicity debate has endured. Systematicity continues to challenge models11,12,13,14,15,16,17,18 and motivates new frameworks34,35,36,37,38,39,40,41. Preliminary experiments reported in Supplementary Information 3 suggest that systematicity is still a challenge, or at the very least an open question, even for recent large language models such as GPT-4. To resolve the debate, and to understand whether neural networks can capture human-like compositional skills, we must compare humans and machines side-by-side, as in this Article and other recent work7,42,43. In our experiments, we found that the most common human responses were algebraic and systematic in exactly the ways that Fodor and Pylyshyn1 discuss. However, people also relied on inductive biases that sometimes support the algebraic solution and sometimes deviate from it; indeed, people are not purely algebraic machines3,6,7. We showed how MLC enables a standard neural network optimized for its compositional skills to mimic or exceed human systematic generalization in a side-by-side comparison. MLC shows much stronger systematicity than neural networks trained in standard ways, and shows more nuanced behaviour than pristine symbolic models. MLC also allows neural networks to tackle other existing challenges, including making systematic use of isolated primitives11,16 and using mutual exclusivity to infer meanings44.

Our use of MLC for behavioural modelling relates to other approaches for reverse engineering human inductive biases. Bayesian approaches enable a modeller to evaluate different representational forms and parameter settings for capturing human behaviour, as specified through the model’s prior45. These priors can also be tuned with behavioural data through hierarchical Bayesian modelling46, although the resulting set-up can be restrictive. MLC shows how meta-learning can be used like hierarchical Bayesian models for reverse-engineering inductive biases (see ref. 47 for a formal connection), although with the aid of neural networks for greater expressive power. Our research adds to a growing literature, reviewed previously48, on using meta-learning for understanding human49,50,51 or human-like behaviour52,53,54. In our experiments, only MLC closely reproduced human behaviour with respect to both systematicity and biases, with the MLC (joint) model best navigating the trade-off between these two blueprints of human linguistic behaviour. Furthermore, MLC derives its abilities through meta-learning, where both systematic generalization and the human biases are not inherent properties of the neural network architecture but, instead, are induced from data.

Continue reading “Human-like systematic generalization through a meta-learning neural network” »