#homelandsecurity #innovation

Having been involved in the creation of the Department of Homeland Security’s Science & Technology Directorate, and with decades of experience working at the intersection of government, industry, and academia, I have come to a simple but important observation: innovation in homeland security doesn’t happen in one area. Instead, it thrives where mission, research, and engineering come together.

Convergence is the catalyst. Cyber defense, autonomous systems, identity management, quantum computing, and photonics are all examples of technological advancements that didn’t develop in isolation. Their progress was the result of different sectors working together on shared goals, risk management, and practical use. Homeland security enterprise is constituted by a multi-sectoral nature: government sets mission needs, industry creates scalable solutions, and academia provides the necessary research. Real innovation happens when these areas come together.

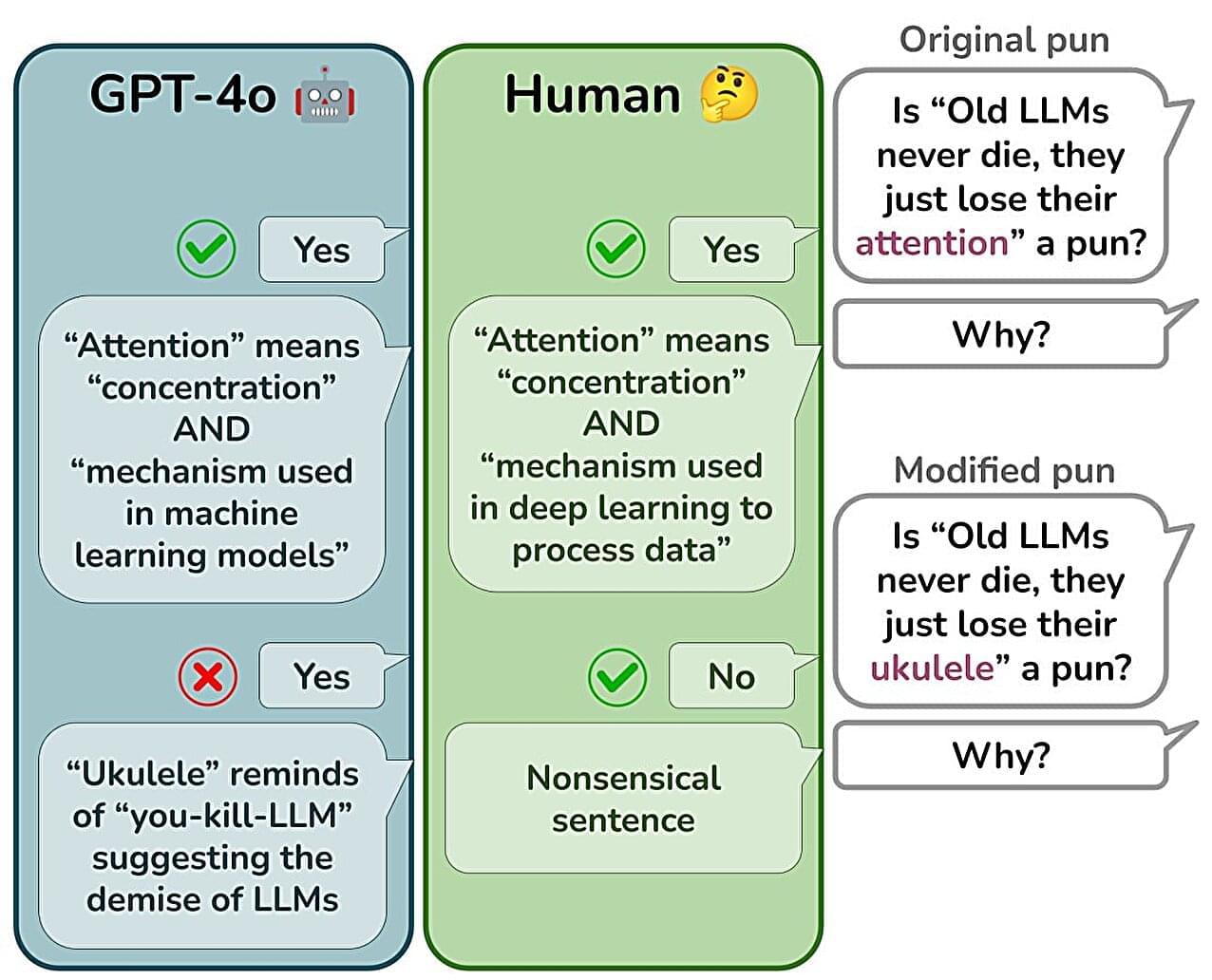

Statistical data highlights the significance of this alignment. Research on cyber-behavior, for instance, demonstrates that organizational culture, national context, and employee backgrounds significantly impact risk outcomes. Practically speaking, this implies that secure systems cannot be developed in isolation. The human and institutional context is as crucial as technical advancements.