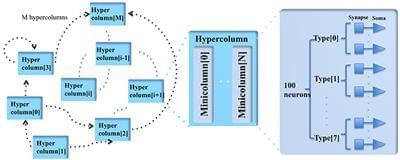

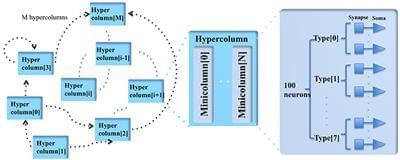

Such as complex models of various areas of the cortex. The main novelty of this work is the abstraction of a neuromorphic architecture into clusters represented by minicolumns and hypercolumns, analogously to the fundamental structural units observed in neurobiology. Without this approach, simulating large-scale fully connected networks needs prohibitively large memory to store look-up tables for point-to-point connections. Instead, we use a novel architecture, based on the structural connectivity in the neocortex, such that all the required parameters and connections can be stored in on-chip memory. The cortex simulator can be easily reconfigured for simulating different neural networks without any change in hardware structure by programming the memory. A hierarchical communication scheme allows one neuron to have a fan-out of up to 200 k neurons. As a proof-of-concept, an implementation on one Altera Stratix V FPGA was able to simulate 20 million to 2.6 billion leaky-integrate-and-fire (LIF) neurons in real time. We verified the system by emulating a simplified auditory cortex (with 100 million neurons). This cortex simulator achieved a low power dissipation of 1.62 μW per neuron. With the advent of commercially available FPGA boards, our system offers an accessible and scalable tool for the design, real-time simulation, and analysis of large-scale spiking neural networks.

Our inability to simulate neural networks in software on a scale comparable to the human brain (1011 neurons, 1014 synapses) is impeding our progress toward understanding the signal processing in large networks in the brain and toward building applications based on that understanding. A small-scale linear approximation of a large spiking neural network will not be capable of providing sufficient information about the global behavior of such highly nonlinear networks. Hence, in addition to smaller scale systems with detailed software or hardware neural models, it is necessary to develop a hardware architecture that is capable of simulating neural networks comparable to the human brain in terms of scale, with models with an intermediate level of biological detail, that can simulate these networks quickly, preferably in real time to allow interaction between the simulation and the environment.