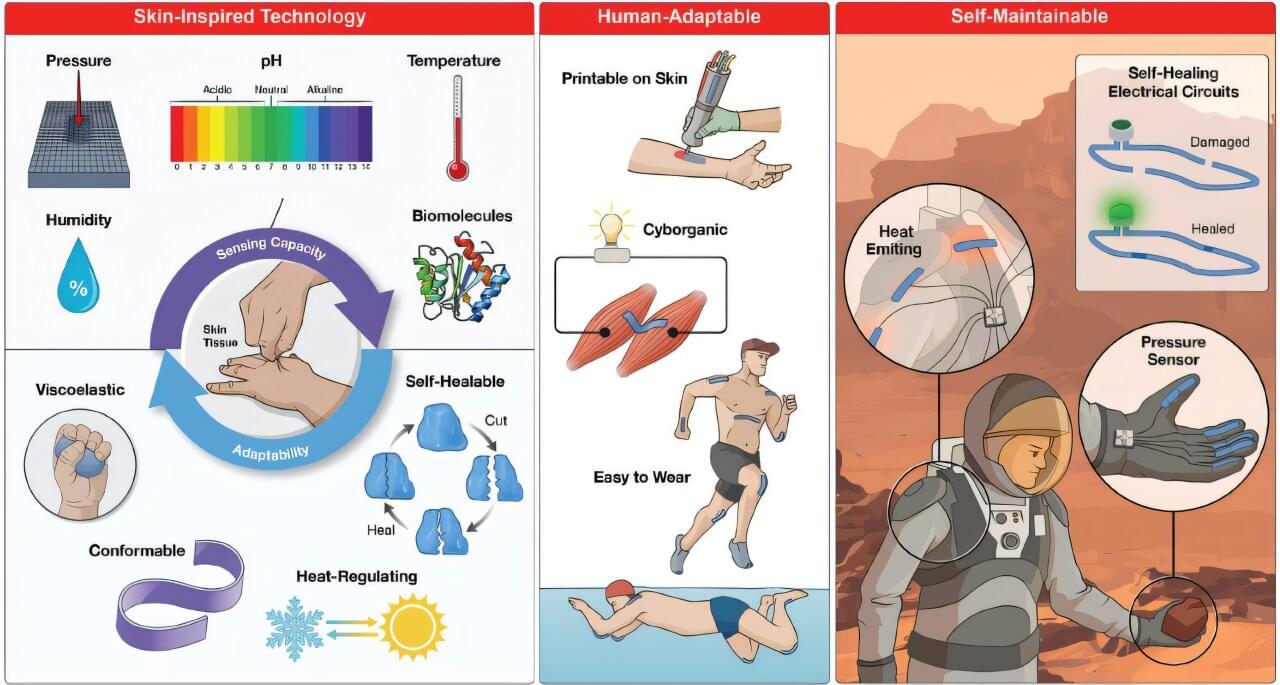

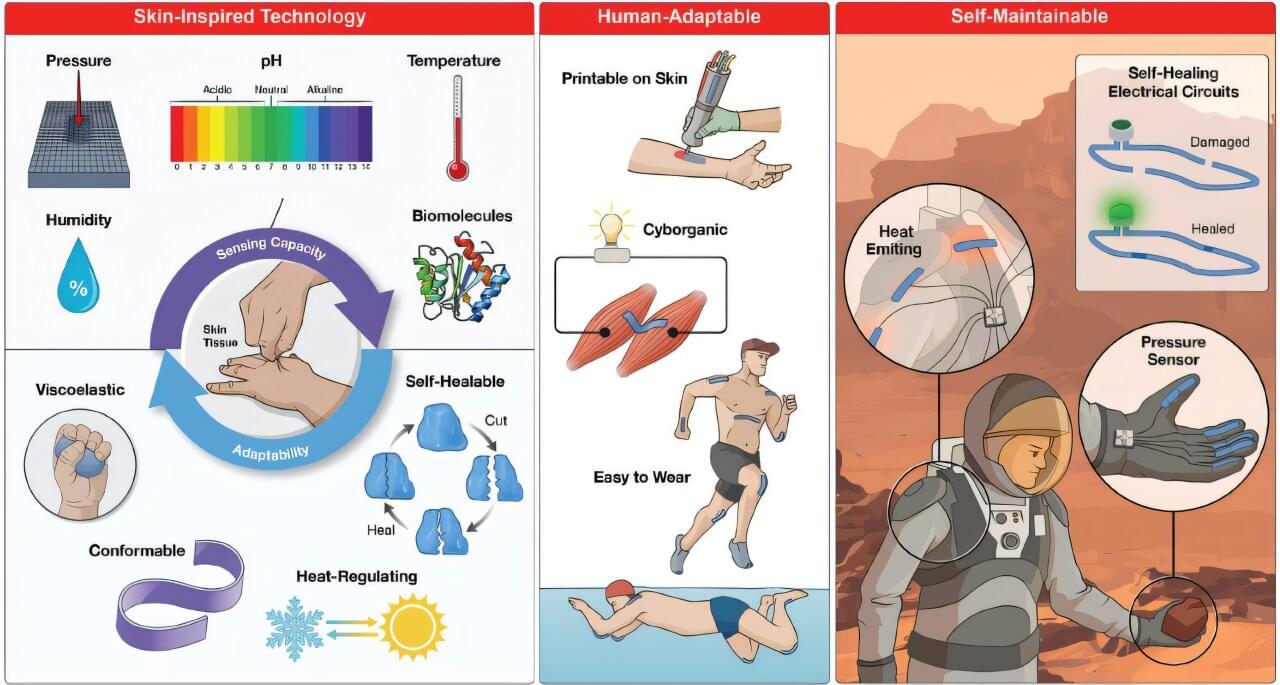

Researchers at DTU have developed a new kind of electronic material that behaves almost exactly like human skin. The substance could be useful in soft robotics, medicine, and health care.

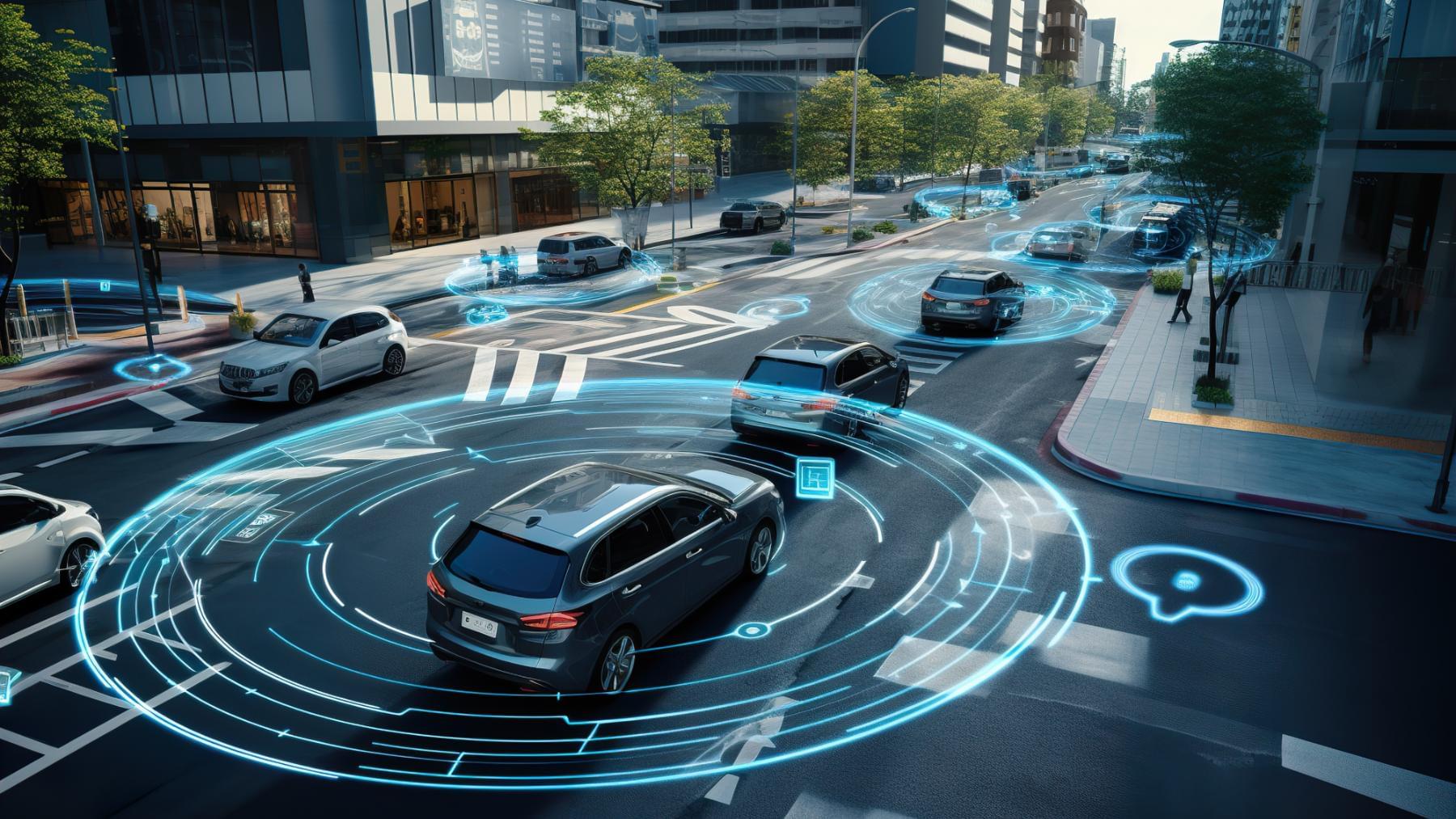

Laser technology is used in many areas, where precise measurements are required and in communication. This means that they are important for everything from self-driving cars to the fiber optic internet and for detecting gases in the air.

Now, a research group has come up with a new type of laser that solves several problems associated with current-day lasers. The group is led by Associate Professor Johann Riemensberger at NTNU’s Department of Electronic Systems.

“Our results can give us a new type of laser that is both fast, relatively cheap, powerful and easy to use,” says Riemensberger.

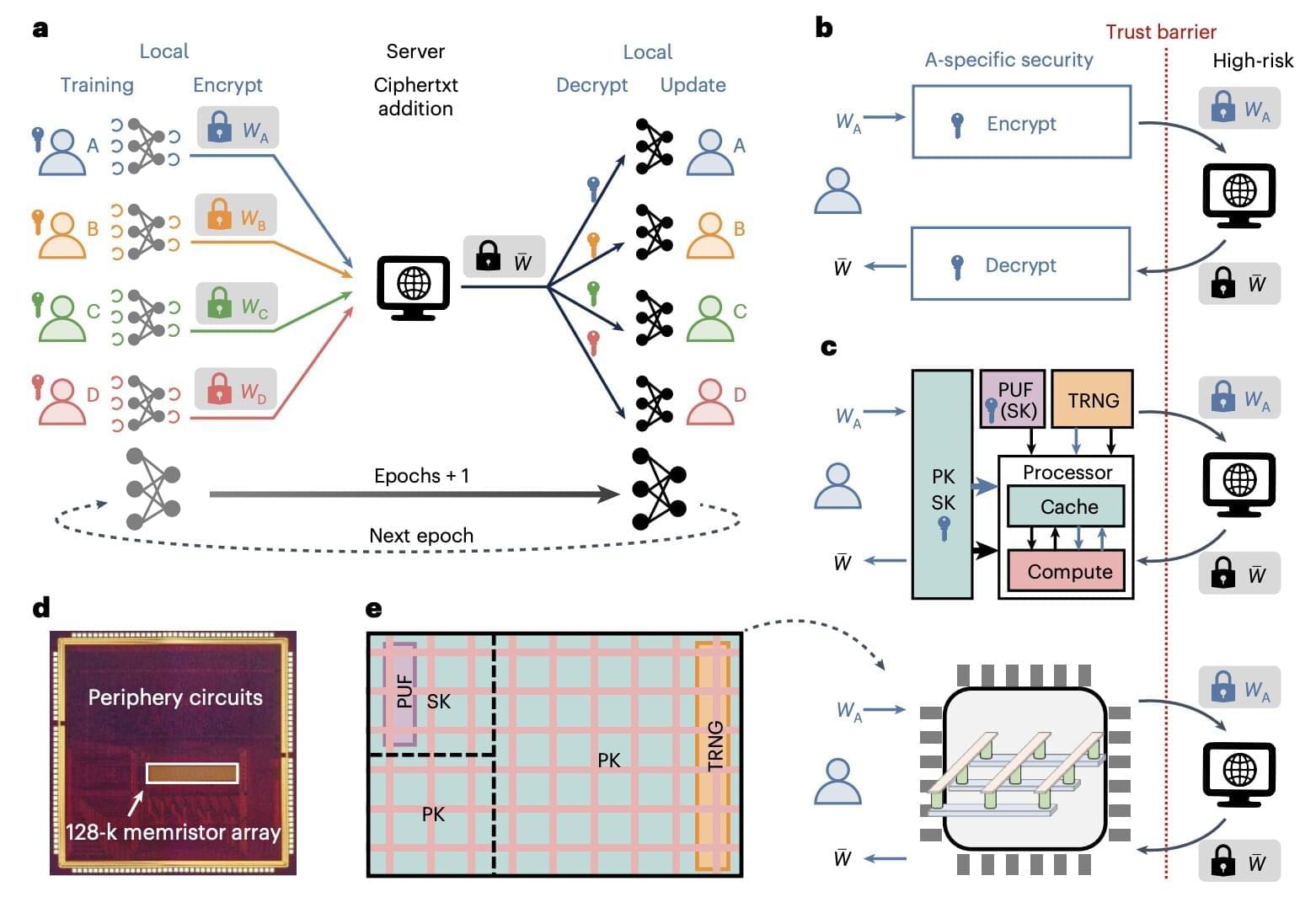

In recent decades, computer scientists have been developing increasingly advanced machine learning techniques that can learn to predict specific patterns or effectively complete tasks by analyzing large amounts of data. Yet some studies have highlighted the vulnerabilities of some AI-based tools, demonstrating that the sensitive information they are fed could be potentially accessed by malicious third parties.

A machine learning approach that could provide greater data privacy is federated learning, which entails the collaborative training of a shared neural network by various users or parties that are not required to exchange any raw data with each other. This technique could be particularly advantageous when applied in sectors that can benefit from AI but that are known to store highly sensitive user data, such as health care and finance.

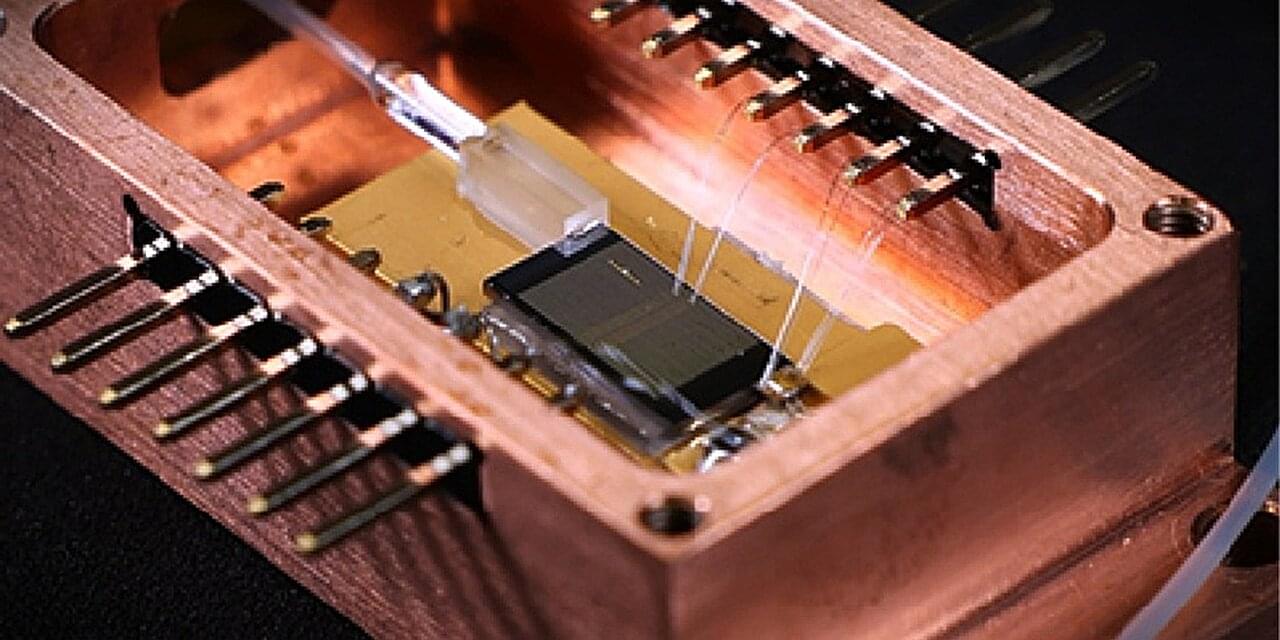

Researchers at Tsinghua University, the China Mobile Research Institute, and Hebei University recently developed a new compute-in-memory chip for federated learning, which is based on memristors, non-volatile electronic components that can both perform computations and store information, by adapting their resistance based on the electrical current that flowed through them in the past. Their proposed chip, outlined in a paper published in Nature Electronics, was found to boost both the efficiency and security of federated learning approaches.

Google Imagen 4, which is the company’s state-of-the-art text-to-image model, is rolling out for free, but only on AI Studio.

In a blog post, Google announced the rollout of the new Imagen 4 model, but reminded users that it’s free for a “limited time” only.

Unlike the old text-to-image model, Imagen 4 offers significant improvements and takes the text-to-image generation quality to the next level.

A blue-and-white Waymo van rolls up to a stoplight near Austin’s South Congress Avenue, sensors spinning in the sun. In three months, that van –and every other driverless car in Texas– will need a brand-new permission slip taped to its dash. Governor Greg Abbott has signed SB 2807, a bill that for the first time gives the Texas Department of Motor Vehicles gate-keeper power over autonomous vehicles.

Starting September 1, 2025, any company that wants to run a truly driver-free car—robo-taxi, delivery pod, or freight hauler—must first snag a state-issued permit. To qualify, operators have to file a safety and compliance plan that spells out:

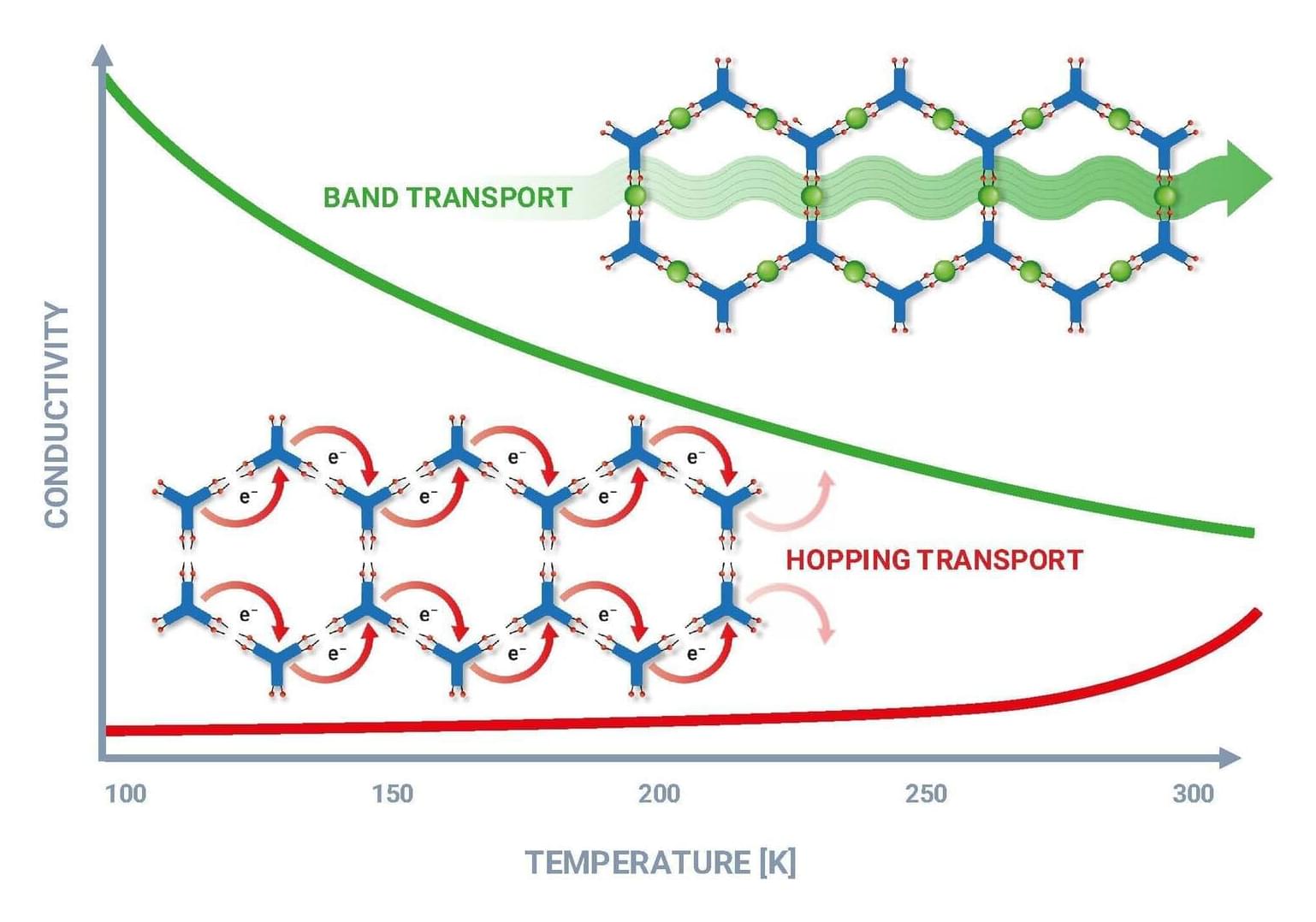

Metal-organic frameworks (MOFs) are characterized by high porosity and structural versatility. They have enormous potential, for example, for applications in electronics. However, their low electrical conductivity has so far greatly restricted their adoption.

Using AI and robot-assisted synthesis in a self-driving laboratory, researchers from Karlsruhe Institute of Technology (KIT), together with colleagues in Germany and Brazil, have now succeeded in producing an MOF thin film that conducts electricity like metals. This opens up new possibilities in electronics and energy storage —from sensors and quantum materials to functional materials.

The team reports on this work in the Materials Horizons journal.

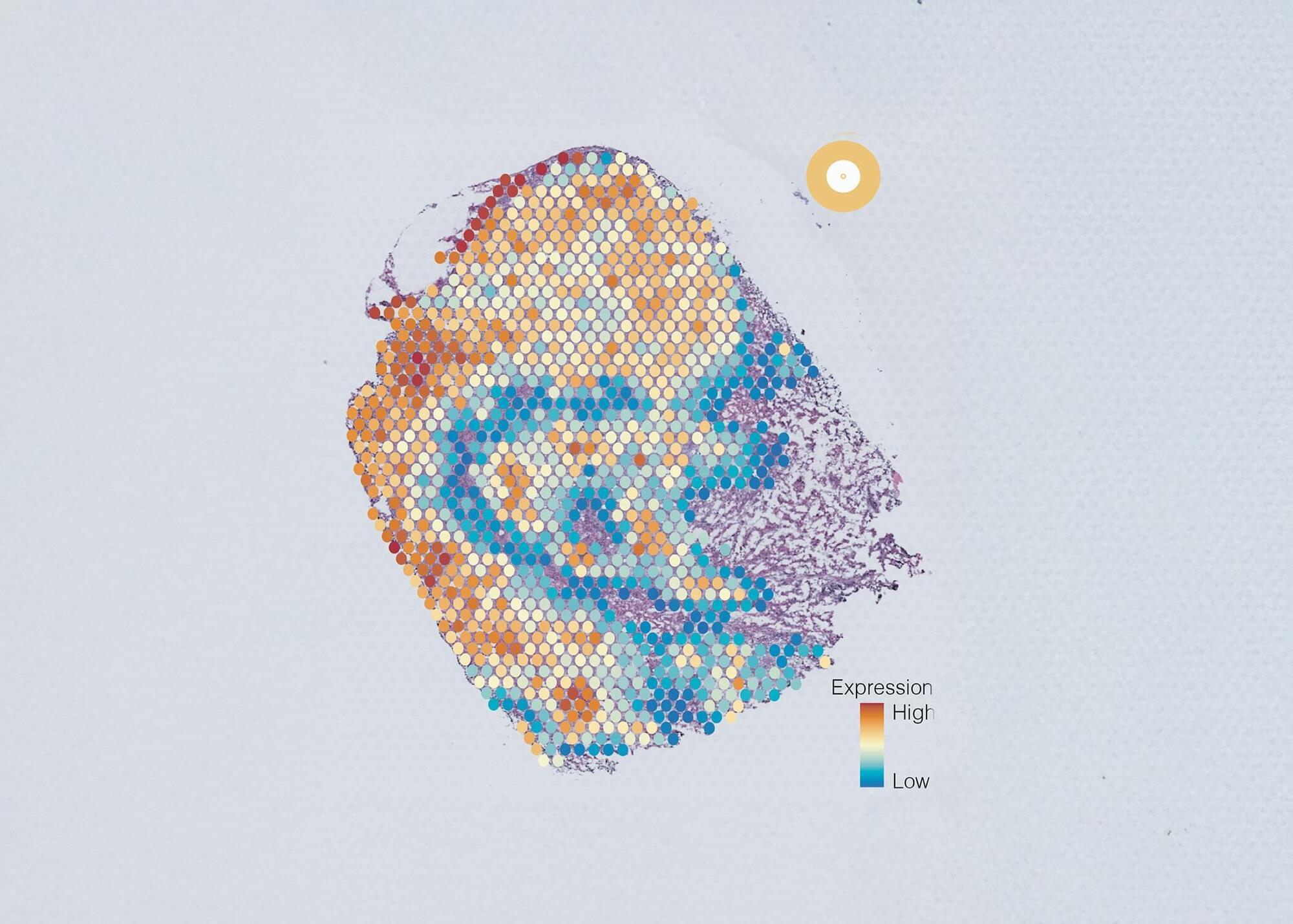

A multinational team of researchers, co-led by the Garvan Institute of Medical Research, has developed and tested a new AI tool to better characterize the diversity of individual cells within tumors, opening doors for more targeted therapies for patients.

Findings on the development and use of the AI tool, called AAnet, have been published in Cancer Discovery.

Tumors aren’t made up of just one cell type—they’re a mix of different cells that grow and respond to treatment in different ways. This diversity, or heterogeneity, makes cancer harder to treat and can in turn lead to worse outcomes, especially in triple-negative breast cancer.

This is something that I often wonder about, because a model’s hardcore reasoning ability doesn’t necessarily translate into a fun, informative, and creative experience. Most queries from average users are probably not going to be rocket science. There isn’t much research yet on how to effectively evaluate a model’s creativity, but I’d love to know which model would be the best for creative writing or art projects.

Human preference testing has also emerged as an alternative to benchmarks. One increasingly popular platform is LMarena, which lets users submit questions and compare responses from different models side by side—and then pick which one they like best. Still, this method has its flaws. Users sometimes reward the answer that sounds more flattering or agreeable, even if it’s wrong. That can incentivize “sweet-talking” models and skew results in favor of pandering.

AI researchers are beginning to realize—and admit—that the status quo of AI testing cannot continue. At the recent CVPR conference, NYU professor Saining Xie drew on historian James Carse’s Finite and Infinite Games to critique the hypercompetitive culture of AI research. An infinite game, he noted, is open-ended—the goal is to keep playing. But in AI, a dominant player often drops a big result, triggering a wave of follow-up papers chasing the same narrow topic. This race-to-publish culture puts enormous pressure on researchers and rewards speed over depth, short-term wins over long-term insight. “If academia chooses to play a finite game,” he warned, “it will lose everything.”