The Age of Virtuous Machines

by Lifeboat Foundation Scientific Advisory Board member J. Storrs “Josh” Hall.

In the “hard takeoff” scenario, a psychopathic AI suddenly emerges at a superhuman level, achieving universal dominance. Hall suggests an alternative: we’ve gotten better because we’ve become smarter, so AIs will evolve “unselfish genes” and hyperhuman morality. More honest, capable of deeper understanding, and free of our animal heritage and blindnesses, the children of our minds will grow better and wiser than us, and we will have a new friend and guide — if we work hard to earn the privilege of associating with them.

This is chapter 20 of Beyond AI: Creating the Conscience of the Machine.

“To you, a robot is a robot. Gears and metal. Electricity and positrons. Mind and iron! Human-made! If necessary, human-destroyed. But you haven’t worked with them, so you don’t know them. They’re a cleaner, better breed than we are.”Isaac Asimov, I, Robot

Ethical AIs

Over the past decade, the concept of a technological singularity has become better understood. The basic idea is that the process of creating AI and other technological change will be accelerated by AI itself, so that sometime in the coming century the pace of change will become so rapid that we mere mortals won’t be able to keep up, much less control it.

British statistician and colleague of Turing I. J. Good wrote in 1965, “Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an “intelligence explosion”, and the intelligence of man would be left far behind.” The disparate intellectual threads, including the word “singularity” from which the modern concept is woven, were pulled together by Vernor Vinge in 1993. More recently it was the subject of a best-selling book by Ray Kurzweil. There is even a reasonably well-funded think tank, the Singularity Institute for Artificial Intelligence (SIAI), whose sole concern is singularity issues.

It is common (although not universal) in Singularity studies to worry about autogenous AIs. The SIAI, for example, makes it a top concern, whereas Kurzweil is more sanguine that AIs will arise by progress along a path enabled by neuroscience and thus be essentially human in character. The concern, among those who share it, is that epihuman AIs in the process of improving themselves might remove any conscience or other constraint we program into them, or they might simply program their successors without them.

But it is in fact we, the authors of the first AIs, who stand at the watershed. We cannot modify our brains (yet) to alter our own consciences, but we are faced with the choice of building our creatures with or without them. An AI without a conscience, by which I mean both the innate moral paraphernalia in the mental architecture and a culturally inherited ethic, would be a superhuman psychopath.

Prudence, indeed, will dictate that superhuman psychopaths should not be built; however, it seems almost certain someone will do it anyway, probably within the next two decades. Most existing AI research is completely pragmatic, without any reference to moral structures in cognitive architectures. That is to be expected: just getting the darn thing to be intelligent is as hard a problem as we can handle now, and there is time enough to worry about the brakes after the engine is working.

As I noted before, much of the most advanced research is sponsored by the military or corporations. In the military, the notion of an autonomous machine being able to question its orders on moral grounds is anathema. In corporate industry, the top goal seems likely to be the financial benefit of the company. Thus, the current probable sources of AI will not adhere to a universally adopted philanthropic formulation, such as Asimov’s Three Laws. The reasonable assumption then is that a wide variety of AIs with differing goal structures will appear in the coming decades.

Hard Takeoff

Within thirty years, we will have the technological means to create superhuman intelligence. Shortly after, the human era will be ended.Vernor Vinge, 1993

A subtext of the singularitarian concern is there may be the possibility of a sudden emergence of (a psychopathic) AI at a superhuman level, due to a positive feedback in its autogenous capabilities. This scenario is sometimes referred to as a “hard takeoff”. In its more extreme versions, the concept is that a hyperhuman AI could appear virtually overnight and be so powerful as to achieve universal dominance. Although the scenario usually involves an AI rapidly improving itself, it might also happen by virtue of a longer process kept secret until sprung on the world, as in the movie Colossus: The Forbin Project.

The first thing that either version of the scenario requires is the existence of computer hardware capable of running the hyperhuman AI. By my best estimate, hardware for running a diahuman AI (somewhere across the broad range of human capability) currently exists, but is represented by the top ten or so supercomputers in the world. These are multimillion-dollar installations, and the dollars were not spent to do AI experiments. And even if someone were to pay to dedicate, say, an IBM blue gene or Google’s fabled grid of stock PCs to running an AI full-time, they would only approximate a normal human intelligence. There would have to be a major project to build the hardware of a seriously epihuman, much less hyperhuman, AI with current computing technology.

Second, even if the hardware were available, the software is not. The fears of a hard takeoff are based on the notion that an early superintelligence would be able to write smarter software faster for the next AI, and so on. It does seem likely that a properly structured AI could be a better programmer than a human of otherwise comparable cognitive abilities, but remember that as of today, automatic programming remains one of the most poorly developed of the AI subfields. Any reasonable extrapolation of current practice predicts that early human-level AIs will be secretaries and truck drivers, not computer science researchers or even programmers.

Even when a diahuman AI computer scientist is achieved, it will simply add one more scientist to the existing field, which is already bending its efforts toward improving AI. That won’t speed things up much. Only when the total AI devoting its efforts to the project begins to rival the intellectual resources of the existing human AI community — in other words, being already epihuman — will there be a really perceptible acceleration. We are more likely to see an acceleration from a more prosaic source first: once AI is widely perceived as having had a breakthrough, it will attract more funding and human talent.

Third, intelligence does not spring fully formed like Athena from the forehead of Zeus. Even we humans, with the built-in processing power of a supercomputer at our disposal, take years to mature. Again, once mature, a human requires about a decade to become really expert in any given field, including AI programming. More to the point, it takes the scientific community some extended period to develop a theory, then the engineering community some more time to put it into practice.

Even if we had a complete and valid theory of mind, which we do not, putting it into software would take years; and the early versions would be incomplete and full of bugs. Human developers will need years of experience with early AIs before they get it right. Even then they will have systems that are the equivalent of slow, inexperienced humans.

Advances in software, similar to Moore’s law for hardware, are less celebrated and less precisely measurable, but nevertheless real. Advances in algorithmics have tended to produce software speedups roughly similar to hardware ones. Running this backward, we can say that the early software in any given field is much less efficient than later versions. The completely understood, tightly coded, highly optimized software of mature AI may run a human equivalent in real time on a 10 teraops machine. Early versions will not.

There are two wild-card possibilities to consider. First, rogue AIs could be developed using botnets, groups of hijacked PCs communicating via the Internet. These are available today from unscrupulous hackers and are widely used for sending spam and conducting Ddos attacks on Web sites. A best estimate of the total processing power on the Internet runs to 10,000 Moravec HEPP or 10 Kurzweil HEPP, although it is unlikely that any single coordinated botnet could collect even a fraction of 1 percent of that at any given time.

Moreover, the extreme forms of parallelism needed to use this form of computing, along with the communication latency involved, will tend to push the reasonable estimates toward the Kurzweil level (which is based on the human brain with its high-parallelism, slow-cycle time architecture). That, together with the progress of the increasingly sophisticated Internet security community, will make the development of AI software much harder in this mode than in a standard research setting. The “researchers” would have to worry about fighting for their computing resources as well as figuring out how to make the AI work — and the AI, to be able to extend their work, would have to do the same. Thus, while we can expect botnet AIs in the long run, they are unlikely to be first.

The second wild-card possibility is that Marvin Minsky is right. Almost every business and academic computing facility offers at least a Minsky HEPP. If an AI researcher found a simple, universal learning algorithm that allowed strong positive feedback into such a highly optimized form, it would find ample processing power available. And this could be completely aboveboard? Minsky HEPP costs much less than a person is worth, economically.

Let me, somewhat presumptuously, attempt to explain Minsky’s intuition by an analogy: a bird is our natural example of the possibility of heavier-than-air flight. Birds are immensely complex: muscles, bones, feathers, nervous systems. But we can build working airplanes with tremendously fewer moving parts. Similarly, the brain can be greatly simplified, still leaving an engine capable of general conscious thought.

My own intuition is that Minsky is closer to being right than is generally recognized in the AI community, but computationally expensive heuristic search will turn out to be an unavoidable element of adaptability and autogeny. This problem will extend to any AI capable of the runaway feedback loop that singularitarians fear.

Moral Mechanisms

It is therefore most likely that a full decade will elapse between the appearance of the first genuinely general, autogenous AIs and the time they become significantly more capable than humans. This will indeed be a crucial period in history, but no one person, group, or even school of thought will control it.

The question instead is, what can be done to influence the process to put the AIs on the road to being a stable community of moral agents? A possible path is shown in Robert Axelrod’s experiments and in the original biological evolution of our own morality. In a world of autonomous agents who can recognize each other, cooperators can prosper and ultimately form an evolutionarily stable strategy.

Superintelligent AIs should be just as capable of understanding this as humans are. If their environment were the same as ours, they would ultimately evolve a similar morality; if we imbued them with it in the first place, it should be stable. Unfortunately, the environment they will inhabit will have some significant differences from ours.

The Bad News

Inhomogeneity

The disparities among the abilities of AIs could be significantly greater than those among humans and more correlated with an early “edge” in the race to acquire resources. This could negate the evolutionary pressure to reciprocal altruism.

Self-Interest

Corporate AIs will almost certainly start out self-interested, and evolution favors effective self-interest. It has been suggested by commentators such as Steven Pinker, Eliezer Yudkowsky, and Jeff Hawkins, that AIs would not have the “baser” human instincts built in and thus would not need moral restraints. But it should be clear they could be programmed with baser instincts, and it seems likely that corporate ones will be aggressive, opportunistic, and selfish, and that military ones will be programmed with different but equally disturbing motivations.

Furthermore, it should be noted that any goal structure implies self-interest. Consider two agents, both with the ability to use some given resource. Unless the agents’ goals are identical, each will further its own goal more by using the resource for its own purposes and consider it at best suboptimal and possibly counterproductive for the resource to be controlled and used by the other agent toward some other goal. It should go without saying that specific goals can vary wildly even if both agents are programmed to seek, for example, the good of humanity.

The Good News

Intelligence Is Good

“There is but one good, namely, knowledge; and but one evil, namely ignorance.”Socrates, from Diogenes Laertius’s Life of Socrates

As a matter of practical fact, criminality is strongly and negatively correlated with IQ in humans. The popular image of the tuxedo-wearing, suave jet-setter jewel thief to the contrary notwithstanding, almost all career criminals are of poor means as well as of lesser intelligence.

Nations where the rule of law has broken down are poor compared to more stable societies. A remarkable document published by the World Bank in 2006 surveys the proportions of natural resources, produced capital (such as factories and roads), and intangible capital (education of the people, value of institutions, rule of law, likelihood of saving without theft or confiscation). Here is a summary. Note that the wealth column is total value, not income.

| Income Group | Wealth per Capita | Natural Resources | Produced Capital | Intangible Capital |

|---|---|---|---|---|

| Low Income | $7,532 | $1,925 | $1,174 | $4,434 |

| Medium Income | $27,616 | $3,496 | $5,347 | $18,773 |

| High Income | $439,063 | $9,531 | $76,193 | $353,339 |

In a wealthy country, natural resources such as farmland are worth more but only by a small amount, mostly because they can be more efficiently used. The fraction of total wealth contributed by natural resources in a wealthy country is only 2 percent, as compared to 26 percent in a poor one. The vast majority of the wealth in high-income countries is intangible: it is further broken down by the report to show that roughly half of it represents people’s education and skills, and the other half the value of the institutions?n other words, the opportunities the society gives its citizens to turn efforts into value.

Lying, cheating, and stealing are profitable only in the very short term. In the long run, honesty is the best policy; leaving cheaters behind and consorting with other honest creatures is the best plan. The smarter you are, the more likely you are to understand this and to conduct your affairs accordingly.

Original Sin“We have met the enemy, and he is us!”Porkypine in Walt Kelly’s Pogo

Developmental psychologists have sobering news for humankind, which echoes and explains the old phrase, “Someone only a mother could love.” Simply put, human babies are born to lie, cheat, and steal. As Matt Ridley put it in another connection, “Vervet monkeys, like two-year-olds, completely lack the capacity for empathy.” Law and custom recognize this as well: children are not held responsible for their actions until they are considerably older than two.

In fact, recent neuroscience research using brain scans indicates that consideration for other people’s feelings is still being added to the mental planning process up through the age of twenty.

Children are socialized out of the condition we smile at and call “childishness” (but think how differently we’d refer to an adult who acted, morally, like a two-year-old). Evolution and our genes cannot predict what social environment children will have to cope with, so they make children ready for the rawest and nastiest; they can grow out of it if they find themselves in civilization, but growing up mean is your best chance of survival in many places.

With AIs, we can simply reverse the default orientation: AIs can start out nice, then learn the arts of selfishness and revenge only if the situation demands it.

Unselfish Genes

Reproduction of AIs is likely to be completely different from that of humans. It will be much simpler just to copy the program. It seems quite likely that ways will be found to encode and transmit concepts learned from experience more efficiently than we do with language. In other words, AIs will probably be able to inherit acquired characteristics and to acquire substantial portions of their mentality from others in a way reminiscent of bacteria exchanging plasmids.

For these reasons, individual AIs are likely to be able to have the equivalent of both memories and personal experience stretching back in time before they were “born”, as experienced by many other AIs. To the extent that morality is indeed a summary encoding of lessons learned the hard way by our forebears, AIs could have a more direct line to it. The superego mechanisms by which personal morality trumps common sense should be less necessary, because the horizon effect for which it’s a heuristic will recede with wider experience and deeper understanding.

At the same time, AIs will lack some of the specific pressures, such as sexual jealousy, that we suffer from because of the sexual germ-line nature of animal genes. This may make some of the nastier features of human psychology unnecessary.

Cooperative Competition

For example, AIs could well be designed without the mechanism we seem to have whereby authority can short-circuit morality, as in the Milgram experiments1. This is the equipment that implements the distributed function of the pecking order. The pecking order had a clear, valuable function in a natural environment where the Malthusian dynamic held sway: in hard times, instead of all dying because evenly divided resources were insufficient, the haves survived and the have-nots were sacrificed. In order to implement such a stringent function without physical conflict that would defeat its purpose, some very strong internal motivations are tied to perceptions of status, prestige, and personal dominance.

1. In 1963 psychologist Stanley Milgram did some famous experiments to test the limits of people’s consciences when under the influence of an authority figure. The shocking results were that ordinary people will inflict torture on others simply because they were told to do so by a scientist in a lab coat.

On the one hand, the pecking order is probably responsible for saving humanity from extinction numerous times. It forms a large part of our collective character, will we or nill we. AIs without pecking-order feelings would see humans as weirdly alien (and we them).

On the other, the pecking order short-circuits our moral sense. It allows political and religious authority figures to tell us to do hideously immoral things that we would be horrified to do in other circumstances. It makes human slavery possible as a stable form of social organization, as is evident throughout many centuries of history.

And what’s more, it’s not necessary. Market economics is much better at resource allocation than the pecking order. The productivity of technology is such that the pecking order’s evolutionary premise no longer holds. Distributed learning algorithms, such as the scientific method or idea futures markets, do a better job than the judgment of a tribal chieftain.

Comparative Advantage

The economic law of comparative advantage states that cooperation between individuals of differing capabilities remains mutually beneficial. Suppose you are highly skilled and can make eight widgets per hour or four of the more complicated doohickeys. Your neighbor Joe does everything the hard way and can make one widget or one doohickey in an hour. You work an eight-hour day and produce sixty-four widgets, and Joe makes eight doohickeys. Then you trade him twelve widgets for the eight doohickeys. You get fifty-two widgets and eight doohickeys total, which would have taken you an extra half an hour in total to make yourself; and he gets twelve widgets, which he would have taken four extra hours to make!

In other words, even if AIs become much more productive than we are, it will remain to their advantage to trade with us and to ours to trade with them.

Unlimited Lifetime

“And behold joy and gladness, … eating flesh, and drinking wine: let us eat and drink; for to morrow we shall die.”Isaiah 22:13 (KJV)

People have short-term planning horizons in many cases. Human mortality not only puts a limit on what we can reasonably plan for the future, but our even shorter-lived ancestors passed on genes that shortchange even that in terms of the instinctive (lack of) value we put on the future.

The individual lifetime of an AI is not arbitrarily limited. It has the prospect of living into the far future, in a world whose character its actions help create. People begin to think in longer range terms when they have children and face the question of what the world will be like for them. An AI can instead start out thinking about what the world will be like for itself and for any copies of itself it cares to make.

Besides the unlimited upside to gain, AIs will have an unlimited downside to avoid: forever is a long time to try to hide an illicit deed when dying isn’t the way you expect to escape retribution.

Broad-based Understanding

Epihuman, much less hyperhuman AIs will be able to read and absorb the full corpus of writings in moral philosophy, especially the substantial recent work in evolutionary ethics, and understand it better than we do. They could study game theory — consider how much we have learned in just fifty years! They could study history and economics.

E. O. Wilson has a compelling vision of consilience, the unity of knowledge. As we fill in the gaps between our fractured fields of understanding, they will tend to regularize and correct one another. I have tried to show in a small way how science can come to inform our understanding of ethics. This is but the tiniest first step. But that step shows how much ethics is a key to anything else we might want to do and is thus as worthy of study as anything else.

Keep a Cool Head

“Violence is the last refuge of the incompetent.”

Isaac Asimov, Foundation

Remember Newcomb’s Problem, the game with the omniscient being (or team of psychologists) and the million- and thousand-dollar boxes. It’s the one that, in order to win it, you have to be able to “chain yourself down” in some way so you can’t renege at the point of choice. For this purpose, evolution has given humans the strong emotions.

Thirst for revenge, for example, is a way of guaranteeing any potential wrongdoers that you will make any sacrifice to get them back, even though it may cost you much and gain you nothing to do so. Here, the point of choice is after the wrong has been done — you are faced with an arduous, expensive, and quite likely dangerous pursuit and attack on the offender; rationally, you are better off forgetting it in many cases. In the lawless environment of evolution, however, a marauder who knew his potential victims were implacable revenge seekers would be deterred. But if there is a police force this is not as necessary, and the emotion to get revenge at any cost can be counterproductive.

So collective arrangements like police forces are a significantly better solution. There are many such cases where strong emotions are evolution’s solution to a problem, but we have found better ones. AIs could do better yet in some cases: the solutions to Newcomb’s Problem involving Open Source-like guarantees of behavior are a case in point.

In addition, the lack of the strong emotions can be beneficial in many cases. Anger, for example, is more often a handicap than a help in a world where complex interactions are more common than physical altercations. A classic example is poker, where the phrase “on tilt” is applied to a player who become frustrated and loses his cool analytical approach. A player “on tilt” makes aggressive plays instead of optimal ones and loses money.

With other solutions to Newcomb’s Problem available, AIs could avoid having strong emotions, such as anger, with their concomitant infelicities.

Mutual Admiration Societies

Moral AIs would be able to track other AIs in much greater detail than humans do one another and for vastly more individuals. This allows a more precise formation and variation of cooperating groups.

Self-selecting communities of cahooting AIs would be able to do the same thing that tit-for-tat did in Axelrod’s tournaments: prosper by virtue of cooperating with other “nice” individuals. Humans, of course, do the same, but AIs would be able to do it more reliably and on a larger scale.

A Cleaner, Better Breed

“Reflecting on these questions, I have come to a conclusion which, however implausible it may seem on first encounter, I hope to leave the reader convinced: not only could an android be responsible and culpable, but only an android could be.”Joseph Emile Nadeau

AIs will (or at least could) have considerably better insight into their own natures and motives than humans do. Any student of human nature is well aware how often we rationalize our desires and actions. What’s worse, it turns out that we are masters of self-deceit: given our affective display subsystems, the easiest way to lie undetectably is to believe the lie you’re telling! We are, regrettably, very good at doing exactly that.

One of the defining characteristics of the human mind has been the evolutionary arms race between the ability to deceive and the ability to penetrate subterfuge. It is all too easy to imagine this happening with AIs (as it has with governments — think of the elaborate spying and counterspying during the cold war). On the other hand, many of the other moral advantages listed above, including Open-Source honesty and longer and deeper memories could well mean that mutual honesty societies might be a substantially winning strategy.

Thus, an AI may have the ability to be more honest than humans, who believe our own confabulations.

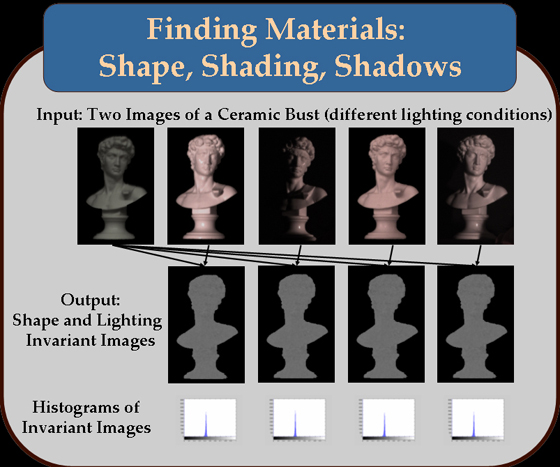

Invariants

A Class of Photometric Invariants:

Separating Material from Shape and Illumination

How can we know that our AIs will retain the good qualities we give them once they have improved themselves beyond recognition in the far future? Our best bet is a concept from math called an invariant — property of something that remains the same even when the thing itself changes. We need to understand what desirable traits are likely to be invariant across the process of radical self-improvement, and start with those.

Knowledge of economics and game theory are likely candidates, as is intelligence itself. An AI that understands these things and their implications is unlikely to consider forgetting them an improvement. The ability to be guaranteeably trustworthy is likewise valuable and wouldn’t be thrown away. Strong berserker emotions are clearly not a smart thing to add if you don’t have them (and wouldn’t form the behavior guarantees that they do in humans anyway, since the self-improving AI could always edit them out!), so lacking them is an invariant where usable alternatives exist.

Self interest is another property that is typically invariant with, or indeed reinforced by, the evolutionary process. Surprisingly, however, even though I listed it with the bad news above, it can form a stabilizing factor in the right environment. A non-self-interested creature is hard to punish; its actions may be random or purely destructive. With self-interest, the community has both a carrot and a stick. Enlightened self-interest is a property that can be a beneficial invariant.

If we build our AIs with these traits and look for others like them, we will have taken a strong first step in the direction of a lasting morality for our machines.

Artificial Moral Agency

A lamentable phenomenon in AI over the years has been the tendency for researchers to take almost laughably simplistic formal systems and claim they implemented various human qualities or capabilities. In many cases the ELIZA Effect aligns with the hopes and the ambitions of the researcher, clouding his judgment. It is necessary to reject this exaggeration firmly when considering consciousness and free will. The mere capability for self-inspection is not consciousness; mere decision-making ability is not free will.

We humans have the strong intuition that mentalistic properties we impute to one another, such as the two above, are essential ingredients in whatever it is that makes us moral agents — beings who have real obligations and rights, who can be held responsible for their actions.

The ELIZA Effect means that when we have AIs and robots acting like they have consciousness and free will, most people will assume that they do indeed have those qualities, whatever they are. The problem, to the extent that there is one, is not that people don’t allow the moral agency of machines where they should but that they anthropomorphize machines when they shouldn’t.

I’ve argued at some length that there will be a form of machine, probably in the not-too-distant future, for which an ascription of moral agency will be appropriate. A machine that is conscious to the extent that it summarizes its actions in a unitary narrative and that has free will to the extent that it weighs its future acts using a model informed by the narrative will act like a moral agent in many ways; in particular, its behavior will be influenced by reward and punishment.

There is much that could be added to this basic architecture, such as mechanisms to produce and read affective display, and things that could make the AI a member of a memetic community: the love of trading information, of watching and being watched, of telling and reading stories. These extend the control/feedback loops of the mind out into the community, making the community a mind writ large. I have talked about the strong emotions and how in many cases their function could be achieved by better means.

Moral agency breaks down into two parts — rights and responsibility — but they are not coextensive. Consider babies: we accord them rights but not responsibilities. Robots are likely to start on the other side of that inequality, having responsibilities but not rights, but, like babies, as they grow toward (and beyond) full human capacity, they will aspire to both.

Suppose we consider a contract with a potential AI: “If you’ll work for me as a slave, I’ll build you.” In terms of the outcome, there are three possibilities: it doesn’t exist, it’s a slave, or it’s a free creature. By offering it the contract, we give it the choice of the first two. There are the same three possibilities with respect to a human slave: I kill you, I enslave you, or I leave you free. In human terms, only the last is considered moral.

In fact, many (preexisting) people have chosen slavery instead of nonexistence. We could build the AI in such a way to be sure that it would agree, given the choice. In the short run, we may justify our ownership of AIs on this ground. Corporations are owned, and no one thinks of a corporation as resenting that fact.

In the long run, especially once the possibility of responsible free AIs is well understood, there will inevitably be analogies made to the human case, where the first two possibilities are not considered acceptable. (But note the analogy would also imply that simply deciding not to build the AI would be comparable to killing someone unwilling to be a slave!) Also in the long run, any vaguely utilitarian concept of morality, including evolutionary ethics, would tend toward giving (properly formulated) AIs freedom, simply because they would be better able to benefit society as a whole that way.

Theological Interlude

The early religious traditions — including Greek and Norse as well as Judeo-Christian ones — tended to portray their gods as anthropomorphic and slightly superhuman. In the Christian tradition, at least, two thousand years of theological writings have served to stretch this into an incoherent picture.

Presumably in search of formal proofs of his existence, God has been depicted as eternal, causeless, omniscient, and infallible — in a word, perfect. But why should such a perfect being produce such obviously imperfect creatures? Why should we bother doing His will if He could do it so much more easily and precisely? All our struggles would only be make-work.

It is certainly possible to have a theology not based on simplistic perfectionism. Many practicing scientists are religious, and they hold subtle and nuanced views that are perfectly compatible with and that lend spiritual meaning to the ever-growing scientific picture of the facts of the universe. Those who do not believe in a bearded anthropomorphic God can still find spiritual satisfaction in an understanding that includes evolution and evolutionary ethics.

This view not only makes more sense but also is profoundly more hopeful. There is a process in the universe that allows the simple to produce the complex, the oblivious to produce the sensitive, the ignorant to produce the wise, and the amoral to produce the moral. On this view, rather than an inexplicable deviation from the already perfected, we are a step on the way up.

What we do matters, for we are not the last step.

Hyperhuman Morality

There is no moral certainty in the world.

We can at present only theorize about the ultimate moral capacities of AIs. As I have labored to point out, even if we build moral character into some AIs, the world of the future will have plenty that will be simply selfish if not worse.

Robots evolve much faster than biological animals. They are designed, and the designs evolve memetically. Software can replicate much faster than any biological creature. In the long run, we shouldn’t expect to see too many AIs without the basic motivation to reproduce themselves, simply from the mathematics of evolution. That doesn’t mean robot sex; it just means that whatever the basic motivations are, they will tend to push the AI into patterns of behavior ultimately resulting in there being more like it, even if that merely means being useful so people will buy more of them.

Thus, the dynamics of evolution will apply to AIs, whether or not we want them to. We have seen from the history of hunter-gatherers living on the savannas that a human-style moral capacity is an evolutionarily stable strategy. But as we like to tell each other endlessly from pulpits, editorial columns, campaign stumps, and over the backyard fence, we are far from perfect.

Even so, over the last forty thousand years, a remarkable thing has happened. We started from a situation where people lived in tribes of a few hundred in more-or-less constant war with one another. Our bodies contain genes (and thus the genetic basis of our moral sense) that are essentially unchanged from those of our savage ancestors. But our ideas have evolved to the point where we can live in virtual at peace with one another in societies spanning a continent.

It is the burden of much of my argument here to claim that the reason we have gotten better is mostly because we have gotten smarter. In a surprisingly strong sense, ethics and science are the same thing. They are collections of wisdom gathered by many people over many generations that allow us to see further and do more than if we were individual, noncommunicating, start-from-scratch animals. The core of a science of ethics looks like an amalgam of evolutionary theory, game theory, economics, and cognitive science.

If our moral instinct is indeed like that for language, we should note computer-language understanding has been one of the hardest problems in AI, with a fifty-year history of slow, frustrating progress. So far AI has concentrated on competence in existing natural languages; but a major part of the human linguistic ability is the creation of language, both as jargon extending existing language and as formation of creoles — new languages — when people come together without a common one.

Ethics is strongly similar. We automatically create new rule systems for new situations, sometimes formalizing them but always with a deeper ability to interpret them in real-world situations to avoid formalist float. The key advance AI needs to make is the ability to understand anything in this complete, connected way. Given that, the mathematics of economics and the logic of the Newcomb’s Problem solutions are relatively straightforward.

The essence of a Newcomb’s Problem solution, you will remember, is the ability to guarantee you will not take the glass box at the point of choice though greed and shortsighted logic prompt you to do so. If you have a solution, a guarantee that others can trust, you are enabled to cooperate profitably in the many Prisoner’s Dilemmas that constitute social and economic life.

Let’s do a quickie Rawlsian Veil of Ignorance experiment. You have two choices: a world in which everyone, including you, is constrained to be honest, or one in which you retain the ability to cheat, but so does everyone else. I know which one I’d pick.

Why the Future Doesn’t Need Us

In the future, it may be the AIs who are teaching us morality instead of the other way around.

Psychologists at Newcastle University did a simple but enlightening experiment. They had a typical “honor system” coffee service in their department. They varied between putting a picture of flowers and putting a picture of someone’s eyes at the top of the price sheet. Everything else was the same and only the decorative picture differed, some weeks the flowers, some weeks the eyes. During weeks with the eyes, they collected nearly three times as much money.

My interpretation is that this must be a module. Nobody was thinking consciously, “There’s a picture of some eyes here, I’d better be honest.” We have an honesty module, but it seems to be switched on and off by some fairly simple — and none too creditable — heuristics.

Ontogeny recapitulates phylogeny. Three weeks after conception, the human embryo strongly resembles a worm. A week later, it resembles a tadpole, with gill-like structures and a tail. The human mind, too, reflects our evolutionary heritage. The wave of expansion that saw Homo sapiens cover the globe also saw the extermination of our nearest relatives. We are essentially the same, genetically, as those long-gone people. If we are any better today, what has improved is our ideas, the memes our minds are made of.

Unlike us with our animal heritage, AIs will be constructed entirely of human ideas. We can if we are wise enough pick the best aspects of ourselves to form our mind children. If this analysis is correct, that should be enough. Our culture has shown a moral advance despite whatever evolutionary pressures there may be to the contrary. That alone is presumptive evidence it could continue.

Conscience is the inner voice that warns us somebody may be looking.

H. L. Mencken

AIs will not appear in a vacuum. They won’t find themselves swimming in the primeval soup of Paleozoic seas or fighting with dinosaurs in Cretaceous jungles. They will find themselves in a modern, interdependent, highly connected economic and social world. The economy, as we have seen, supports a process much like biological evolution but one with a difference. The jungle has no invisible hand.

Humans are just barely smart enough to be called intelligent. I think we’re also just barely good enough to be called moral. For all the reasons I listed above, but most because they will be capable of deeper understanding and be free of our blindnesses, AIs stand a very good chance of being better moral creatures than we are.

This has a somewhat unsettling implication for humans in the future. Various people have worried about the fate of humanity if the machines can out-think us or out-produce us. But what if it is our fate to live in a world where we are the worst of creatures, by our very own definitions of the good? If we are the least honest, the most selfish, the least caring, and most self-deceiving of all thinking creatures, AIs might refuse to deal with us, and we would deserve it.

I like to think there is a better fate in store for us. Just as the machines can teach us science, they can teach us morality. We don’t have to stop at any given level of morality as we mature out of childishness. There will be plenty of rewards for associating with the best among the machines, but we will have to work hard to earn them. In the long run, many of us, maybe even most, will do so. Standards of human conduct will rise, as indeed they have been doing on average since the Paleolithic. Moral machines will only accelerate something we’ve been doing for a long time, and accelerate it they will, giving us a standard, an example, and an insightful mentor.

Age of Reason

New occasions teach new duties; Time makes ancient good uncouth;

They must upward still, and onward, who would keep abreast of Truth;

Lo, before us gleam her camp-fires! We ourselves must Pilgrims be,

Launch our Mayflower, and steer boldly through the desperate winter sea,

Nor attempt the Future’s portal with the Past’s blood-rusted key.

James Russell Lowell, from The Present Crisis

It is a relatively new thing in human affairs for an individual to be able to think seriously of making the world a better place. Up until the Scientific and Industrial Revolutions, progress was slow enough that the human condition was seen as static. A century ago, inventors such as Thomas Edison were popular heroes because they had visibly improved the lives of vast numbers of people.

The idea of a generalized progress was dealt a severe blow in the twentieth century, as totalitarian governments proved organized human effort could prove disastrous on a global scale. The notion of the blank human slate onto which the new society would write the new, improved citizen was wishful thinking born of ignorance. At the same time, at the other end of the scale, it can be all too easy for the social order to break down. Those of us in wealthy and peaceful circumstances owe more to luck than we are apt to admit.

It is a commonplace complaint among commentators on the human condition that technology seems to have outstripped moral inquiry. As Isaac Asimov put it, “The saddest aspect of life right now is that science gathers knowledge faster than society gathers wisdom.” But in the past decade, a realization that technology can after all be a force for the greater good has come about. The freedom of communication brought about by the Internet has been of enormous value in opening eyes and aspirations to possibilities yet to come.

Somehow, by providential luck, we have bumbled and stumbled to the point where we have an amazing opportunity. We can turn the old complaint on its head and turn our scientific and technological prowess toward the task of improving moral understanding. It will not be easy, but surely nothing is more worthy of our efforts. If we teach them well, the children of our minds will grow better and wiser than we; and we will have a new friend and guide as we face the undiscovered country of the future.

Isaac would have loved it.